Introduction

Within the European Higher Education Area (EHEA) framework, it is essential for students to develop competences that allow them to consciously construct knowledge, to learn in different contexts and modalities throughout their lives, to deal with future learning challenges and to adapt knowledge to changing situations (Cano, 2008). The acquisition of competences by students is the primary objective of education and contents are indispensable resources for the development of those competences (Bolívar, 2008).

In the project entitled Defining and Selecting Key Competencies put forth by the Organization for Economic Cooperation and Development (OECD), competence is defined as “the ability to respond to complex demands and carry out different tasks appropriately…, this entails a combination of practical skills, knowledge, motivation, ethical values, attitudes, emotions, and other social and behavioral components that are mobilized together to achieve effective action” (Ministry of Education, Culture and Sport of the Government of Spain, Ministerio de Educación, Cultura y Deporte, MECD, 2013, pp.17-18).

The ordinance on official university education (Royal Decree 1393/2007) states that students should acquire both general and specific competences. Through cross-curricular competences one gains the ability to continue learning and keeping oneself up to date throughout one's life, develops the ability to communicate and work in multidisciplinary and multicultural groups and learns to make the best use of all available resources (Agenda Nacional de Evaluación de la Calidad y Acreditación, 2014). Specifically, the cross-curricular competence of learning to learn is a basic competence that has a great influence on all others, primarily because it is key for the permanent learning that takes place in different vital contexts of human development (Black et al., 2006). This competence includes a set of skills that require personal autonomy, critical thought and reflexive capacity about the learning process itself (Black et al., 2006). Learning to learn entails developing knowledge about the mental processes involved in the process of knowledge construction and applying new knowledge and abilities in other contexts (MECD, 2018). Furthermore, in order to acquire this competence it is necessary to develop attitudes and values such as motivation and confidence. This allows the student to plan realistic objectives and achieve goals, thus feeding back to his or her confidence and personal ability to achieve tasks and set more complex learning objectives (MECD, 2018).

In the present Spanish educational system, the primary responsibility of providing comprehensive training in competences falls to educators, which has meant that this group has had to face demanding training challenges and come to terms with a new teaching approach focused on the student (Zabalza, 2009). Regarding the development of the learning to learn competence within the university framework, the importance of carrying this out through facilitative teaching and learning contexts has been pointed out (Fazey & Fazey, 2001; Wingate, 2007). In this respect, there are many different variables that have been associated with the development of this competence (Coll et al., 2012; Muñoz-San Roque et al., 2016; Villardón et al., 2013). On the one hand, the teaching-learning approaches of university educators should be noted (Monroy et al., 2015; Trigwell & Prosser, 2004). Two types of teaching approaches are identified, specifically, one approach characterized by a strategy that focuses on the educator, in which teaching is understood as transmission or communication, and a polar approach that applies a strategy that focuses on the student. The former involves a traditional learning approach such as knowledge acquisition, in which the amount of learning is emphasized, while in the latter, teaching is understood as the process of the creation of learning opportunities in which the educator tries to influence both the way in which the students think about their learning and the construction of knowledge. In order for teaching to facilitate the learning to learn competence and deeper learning, the educator's approach to learning must focus on the student (Trigwell & Prosser, 2004).

On the other hand, a number of authors have associated metacognition with greater development of the learning to learn competence (Fazey & Fazey, 2001; Veenman et al., 2006). Metacognition refers to the ability to reflect on learning processes and understand them in depth (Veenman et al., 2006). This allows students to expand their knowledge of themselves as people in training and inspires autonomous learning, which, in turn, influences learning self-regulation (van der Stel & Veenman, 2010). This process requires the student to define objectives, plan processes to achieve those objectives, regulate learning development, and develop the ability to evaluate his or her own process (Villardón et al., 2013).

The learning to learn competence in the university setting consists of a complex process of personal development that for the student involves making changes in his or her perceptions, in his or her learning habits, and in his or her epistemological beliefs (Wingate, 2007). Therefore, in order for this competence to be successfully developed, students must receive personalized support (Zabalza, 2009). This means a positive educational environment, based on respect and mutual trust, in which the individual feels heard, accepted and empowered. To accomplish this, it is essential that the relationships between educator and students be positive and close, characterized by fluid two-way communication in which meanings are shared (Coll et al., 2012). The students must also understand that they are active and responsible individuals, capable of directing their own learning (Putwain et al., 2013). All of these are aspects that strengthen their academic self-concept (Coll et al., 2012).

Furthermore, the development of the learning to learn competence must take place in different contexts and through a diverse methodology (Wingate, 2007). Starting with the principle that a methodology must be based on meaningful learning, it must also be functional, inspiring and aimed at action, able to combine the individual sphere with the social (Novak, 2002). It is worth noting that some research has found that the use of diverse methodologies facilitates learning and makes it possible to respond to the heterogeneity of student demands (Wingate, 2007; Zabalza, 2009). The methodology for the development of the learning to learn competence involves posing learning situations typical of the profession, real or simulated, and a system for evaluation that is not separate from training (Monereo & Lemus, 2010). Likewise, the students must be considered active subjects in this process and their critical reflection on their practice must be strengthened, a necessary behavior so that they may incorporate improvements in their learning process, this being the primary objective of evaluation (Margalef, 2014).

On the other hand, effective teaching of the learning to learn competence also requires an instructional commitment (Wingate, 2007); that is, valuing the liking of the profession, enjoying it, demonstrating affective and intellectual involvement in the work of teaching, internalizing an enterprising culture and expressing a positive attitude toward change (Zabalza, 2009). The quality of higher education depends on the availability of a teaching staff with excellent training in the development of competences. This in turn requires creating measurement instruments that evaluate the state of their implementation. With respect to the learning to learn competence, although there are valid measurement instruments to evaluate some of the variables mentioned above (e.g., the Spanish version of the Approaches to Teaching Inventory, S-ATI-20, Monroy et al., 2015; and the Self-Perception Scale of the Level of Development of the Learning to Learn Competence, la Escala de Autopercepción del Nivel de Desarrollo de la Competencia de Aprender a Aprender, EADCAA, Muñoz San-Roque et al, 2016), most are based on the student perspective (e.g., the Learning Competence Scale, LCS, Villardón et al., 2013), and there are currently no instruments in Spanish that allow specific, valid and reliable measurement of the development of this competence in university teaching staff. Thus, the purpose of the present study was to design and validate a questionnaire to measure facilitative learning contexts implemented by university teaching staff to develop the learning to learn competence.

Method

Participants

The instrument was administered to 415 educators (204 women and 211 men; M of age = 45.03; SD = 10.58) in higher education from various universities in Spain. Sample selection was random, but we tried to achieve balance with regard to gender, age group, ownership of the universities in question, subject taught and participants' teaching experience. Likewise, we ensured that all areas of knowledge were represented (Table 1). Participation was voluntary and all participants gave their informed consent before being included in the study. Educators not actively teaching in higher education at the time of the study were excluded (n = 15).

Instruments

Learning to Learn Questionnaire (LLQ). The LLQ is a questionnaire that measures facilitative teaching and learning contexts for the development of the learning to learn competence in university teaching staff. It consists of 39 items to which the participant responds using a 5-point Likert scale ranging from “Strongly disagree/Almost never” to “Strongly agree/Almost always.” The LLQ takes approximately 20-25 minutes to complete and includes 4 factors. The Commitment to Students and to Teaching factor (8 items) focuses on the educator's involvement in having an impact on the student's personal and professional development. It also refers to the educator's commitment to the profession and to the quality of teaching through the analysis of his or her own practice and continuous improvement (e.g., “I believe that I learn continually in my interaction with students and that this contributes to my personal and professional development”). The Classroom Environment factor (7 items) refers to teaching-learning environments based on acceptance, commitment and mutual respect, for which it is key that positive and close relationships be established both between instructors and students and among students (e.g., “I value the students' contributions and let them know it”). The Methodology factor (15 items) refers to active teaching-learning methodologies focusing on the students. These are methodologies that include an investigative approach and explore complex situations related to the sociocultural situation of the students. Teaching is understood to be a process of the creation of learning opportunities in which the educator tries to influence both the way in which the students think about their learning and the creation of knowledge (e.g., “I prioritize methodological strategies that involve inquiry and problem solving”). Finally, the Self-Regulation factor (9 items) describes the type of support provided by the educator so that the student makes an appropriate transition from external to internal regulation of the learning process. For this purpose, it favors metacognition and inspires autonomous learning, which in turn influences self-regulation in learning (e.g., “I self-evaluate my intervention in the classroom with the students in order to offer strategies to help the students develop this self-evaluation ability”).

Spanish version of the Approaches to Teaching Inventory (S-ATI-20, Monroy et al., 2015; original version by Trigwell and Prosser, 2004). The S-ATI-20 measures the teaching approaches of university educators. It consists of 20 items grouped into two 10-item scales, each of which represents one of the extremes on a continuum of teaching approaches: Professor-Centered Transmission of Information (PCTI) (e.g., “It is recommended that students base their studies on what I offer them”), and Student-Centered Conceptual Change (SCCC) (e.g., “In the class periods of this course I deliberately incite debate and discussion”). The PCTI refers to a type of approach that addresses teaching by focusing on the professor in terms of how he or she structures, presents, manages and transmits the subject matter, independent of what the students do. For the purposes of the present study, we used the CCCE, which is characterized as a teaching approach that focuses on the student. In our sample, the internal consistency index determined by Cronbach's alpha coefficient was .91.

Procedure

First, the construct under consideration was conceptualized and operationalized. Next, we defined who to evaluate and the use to which the obtained scores would be put, and discussed the reason for and viability of the creation of the instrument (Abad et al., 2011). This process gave rise to the creation of the items and to the proposal of the dimensions to which those items could theoretically be assigned. The first version of the questionnaire consisted of 86 items.

In order to determine to what extent the content of the questionnaire was consistent with and appropriate for its specific objectives, an evaluation was carried out by a group of experts. Specifically, a total of 18 expert judges (6 men and 12 women) from various Spanish universities participated, as did 2 expert judges (1 man and 1 woman) from one Chilean university. The following criteria were followed to select people for the panel of experts: experience with the construct under consideration; reputation in the scientific community; availability and motivation to participate; and impartiality.

The process was carried out in two phases. First, 5 experts were chosen to be part of a work group. After specifying to the participants both the objective of the test and the dimensions and indicators measured by each of the items, a consensus was reached through discussion about the validity of the dimensions and the items. With the agreed upon version as a point of departure, a panel of experts (n = 15) was created and the Delphi method was applied to evaluate the content validity of the test. To evaluate the relevance and representativity of the dimensions and the items, a tool was created with its respective indicators for both quantitative (a 10-point Likert scale; from “not at all relevant” to “totally relevant”) and qualitative assessment. Once the first assessment was completed, each of the experts was informed as to the answers and the reasons given by the rest of the participants and they were given the chance to change a response after evaluating other possible arguments. Two rounds of assessment were conducted until the number of items per dimension was established and a first version of the questionnaire was achieved. The entire process was carried out telematically. Dimensions and items with quantitative scores lower than 7 or qualitative scores with less than 70% agreement among experts were eliminated (Bulger & Housner, 2007).

To obtain evidence of validity based on the response processes, the cognitive interview method was used (Miller et al., 2014). The questionnaire was given to 10 university educators. First, while they completed the questionnaire, participants were asked to verbalize their thoughts. Next, verbal information about the answers given during the questionnaire was compiled through a semi-structured interview. This is how the quality of the responses was evaluated and how it was determined whether the items elicited the expected information. Light modifications were made, such as, for example, on the item “When I send positive messages, I keep in mind the characteristics of each student, the moment and the possible consequences,” which caused certain comprehension problems, the words “appreciative messages” were changed to “opinions and assessments,” since that was easier to understand while still remaining faithful to the meaning of the original item.

Next, a pilot study was carried out in order to obtain empirical data on the application of the LLQ. A total of 50 university educators (64.4% women and 35.4% men) between 25 and 60 years of age participated. An analysis of response trends was carried out on the items; we also conducted an analysis of indices based on distribution and a study on the relationship between each of the items that composed the scale and the scale itself according to the discrimination index. Thus, we obtained a preliminary version of the LLQ that included 80 items.

Finally, in order to obtain evidence of the validity and reliability of LLQ scores, we got in touch telematically with 1500 instructors from various Spanish universities and invited them to participate in the study.

Data analysis

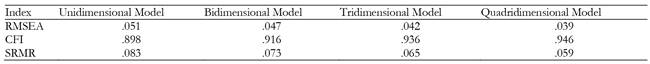

For our study of the evidence of the validity of the internal structure of the LLQ, the total sample (n = 415) was divided into two equivalent groups by random selection using the SPSS V. 24 program. An exploratory factorial analysis (EFA) was carried out on the first half (n = 207), and a confirmatory factorial analysis (CFA) on the second half (n = 208). In order to carry out the EFA of the preliminary version of the LLQ, the FACTOR 10.8.02 program (Lorenzo-Seva & Ferrando, 2013) and the Mplus v7.4 software were used. Responses to the items were treated as ordinal categorical variables. Therefore, a factorial method of analysis was chosen with the categorical variable methodology (GVM-AF, Muthén & Kaplan, 1985) based on polychoric inter-item correlations. First, using the polychoric matrix of correlations as a point of departure, the number of dimensions to retain was determined through the procedure of optimized parallel analysis with random extraction of 500 submatrices and based on minimum rank factor analysis (Timmerman & Lorenzo-Seva, 2011). Next, the EFA was carried out using unweighted least squares estimation and promin factor rotation (Lorenzo-Seva, 1999), since it was expected that the factors would correlate. The degree of fit of the data to the factorial analysis was tested using Bartlett's test of sphericity and the Kaiser-Meyer-Olkin (KMO) index, and items were selected that showed standardized factorial loads greater than .30. The following indices were used to determine the goodness of fit of the model: the root mean square error of approximation (RMSEA), the goodness of fit index (GFI), and the standardized root mean residual (SRMR). A multiple imputation procedure (Hot-Deck Multiple; Lorenzo-Seva & Van Ginkel, 2016) was used to manage missing data.

In order to validate the factorial solution suggested by the EFA, a CFA was carried out with the second half of the sample (n = 208). Data were analyzed using the Mplus version 7.4 software. To analyze the fit of the proposed model in the CFA, we used the robust weighted least square estimator with a χ2 adjusted for mean and variance (WLSMV), a method that is preferable to others when the variables are ordinal in nature and do not follow a normal distribution, and there are indicators that show ceiling and floor effects (Finney & DiStefano, 2013). The following goodness of fit indicators were used: (1) χ2 divided by degrees of freedom (quotients of ≤ 2.0 indicate excellent fit; lower values indicate less fit; Bollen, 1989); (2) the comparative fit index (CFI); (3) the Tucker-Lewis index (TLI); and (4) the root mean square error of approximation (RMSEA) and weighted root mean square residual (WRMR). CFI and TLI values greater than .90 and .95, respectively, were taken to indicate an acceptable fit and an excellent fit (Hu & Bentler, 1999). For the RMSEA, values under .08 and .06 indicate acceptable fit and appropriate fit, respectively (Hu & Bentler, 1999), and finally, WRMR values under 1 indicate a good fit (DiStefano at al., 2018).

Our estimation of the reliability of the scores was based on the triphasic model proposed by Viladrich, Angulo-Brunet and Doval (2017).

In order to analyze the convergent validity of the LLQ, predictable relationships between LLQ scores and S-ATI-20 scores (Monroy et al., 2015) were examined. For the present study, the SCCC factor was used. Given that it did not fulfill the assumption of normality, the correlation coefficient was estimated from Spearman's rho.

Finally, differences in the expression of the LLQ dimensions were studied according to gender, age and teaching experience. First, the invariance of the structure of the model obtained in the CFA was tested. Then, to analyze differences, we used the Spearman's rho correlation coefficient, the Kruskal-Wallis test and the Mann-Whitney U test.

Results

Exploratory factorial analysis

The polychoric matrix of correlations showed appropriate indicators of fit for its factorization (Bartlett's statistic = 7248.9; gl = 2016; p < .0001; KMO = .902; p < .0001). Next, based on that polychoric matrix of correlations, an optimized parallel analysis was carried out in which 4 factors exceeded the percentage of variance explained by those generated randomly. As seen in Table 2, the model composed of 4 factors showed a better fit.

The results of the EFA made it clear that 5 items (8, 32, 65, 67, 68) showed a factorial load lower than .30, and these items were eliminated progressively one by one. It was also found that 11 items (4, 25, 29, 33, 48, 53, 55, 57, 59, 60, 62) showed a factorial load that was similar in more than one dimension, and these too were eliminated. Finally, we discarded 5 items (2, 14, 51, 52, 61) whose placement in the proposed factor had no substantive meaning. In total, the final version that resulted from these analyses comprised 43 items grouped into 4 factors: Commitment to Students and to Teaching (9 items), Classroom Environment (7 items), Methodology (18 items), and Self-Regulation (9 items), together accounting for 50.40% of common variance.

Confirmatory factorial analysis

First, in order to evaluate the unidimensional structure of the LLQ, a CFA was carried out with the 43 items extracted in the EFA. This model showed the following indices of fit: χ 2(819, n = 208) = 1473.5, p = .0001; χ 2/df = 1.80; CFI = .895; TLI = .890; RMSEA(CI90%) = .062(.057; .067); WRMR = 1.306. Next, the quadridimensional model proposed in the EFA was put to the test, and showed better fit: χ 2(854, n = 208) = 1408.4, p = .0001; χ 2/df = 1.65; CFI = .913; TLI = .908; RMSEA(CI90%) = .056(.051; .061); WRMR = 1.215. Nevertheless, inappropriate items were successively eliminated in order to choose the most parsimonious model, the model that made conceptual sense and showed optimum goodness of fit based on the values of the indices of modification obtained from the Mplus v7.4 program. Finally, our obtained result consisted of 39 items grouped into 4 factors: Factor 1, Commitment to Students and to Teaching (8 items); Factor 2, Classroom Environment (7 items); Factor 3, Methodology (15 items); and Factor 4, Self-Regulation (9 items), χ 2(696, n = 208) = 992.7, p = .0001; χ 2/df = 1.43; CFI = .950; TLI = .946; RMSEA(CI90%) = .045(.039; .052); WRMR = 1.024. All factorial loads were statistically significant (p < .0001) and greater than .40 (values between .45 and .80). It must be noted that an equivalence was found in this factorial structure both in the first half of the sample, χ2(696, n = 207) = 970.9, p = .0001; χ 2/df = 1.40; CFI = .953; TLI = .949; RMSEA(CI90%) = .044(.037; .050); WRMR = 1.004, and in the total sample, χ 2(696, n = 415) = 1257.3, p = .0001; χ 2/df = 1.81; CFI = .951; TLI = .947; RMSEA(CI90%) = .044(.040; .048); WRMR = 1.143.

Table 3 shows the LLQ dimensions, the items included in each dimension, and the standardized factorial loads.

Table 4 shows the means, standard deviations and Spearman's rho correlations among the different factors that comprise the measurement instrument.

Internal consistency

In the first phase, univariate distributions and the relationships among items were studied based on the model proposed by Viladrich et al. (2017). Our exploration of the data made ceiling effects evident, the asymmetry values did not particularly stand out, and high kurtosis values were observed, and data were therefore treated as ordinals. For this reason, polychoric correlation coefficients were obtained. In the second phase, first tau-equivalent measurement models and a congeneric measurement model were specified with the CFA. Next, the parameters of the model were estimated, the goodness of fit indices were calculated, and the measurement model with the best fit and that was also parsimonious and interpretable was chosen. We applied the WLSMV estimator using the Mplus version 7.4 program to fit the measurement models to the data. Table 5 shows the principle results of the measurement models.

As can be seen in Table 5, the fit to the model of congeneric measurements was better than that of tau-equivalent measurements. Therefore, internal consistency was estimated based on the ordinal omega coefficient (Gadermann et al., 2012). The values were .826 (.814-.838) for Commitment to Students and to Teaching, .815 (.802-.828) for Classroom Environment, .887 (.880-.894) for Methodology, and .784 (.768-.800) for Self-Regulation.

Relationships between LLQ dimensions and the S-ATI-20 (Monroy et al., 2015)

Moderate positive correlations were obtained between the participants' scores on the LLQ dimensions and on the SCCC dimension of the S-ATI-20 (Monroy et al., 2015). More detailed information is given in Table 6.

Differences in the expression of LLQ dimensions based on gender, age and teaching experience

Table 7 shows the goodness of fit indices of the configural invariance models based on gender, age and teaching experience.

As seen in Table 7, the indices of fit make it possible to accept the equivalence of the factorial structure obtained in the CFA for the different groups based on gender, age and teaching experience.

In order to examine whether there were differences between female and male instructors in their scores on the LLQ dimensions, we used a Mann-Whitney U test, which yielded statistically significant differences between the rank measurements for all four factors: Commitment to Students and to Teaching, U = 17771, p = .002; Classroom Environment, U = 18758.5, p = .023; Methodology, U = 18216, p = .009; and Self-Regulation, U = 17098, p = .001. Consistent with these results, the sizes of the effect associated with the differences in rank between female and male instructors were small in magnitude in all cases (r = .15, r = .11, r = .13 and r = .18, respectively).

In order to determine whether there were differences in the scores on the LLQ dimensions based on age, we used the Kruskal-Wallis test, in which age group was the predictor variable and the scores on the LLQ dimensions were the criterion variable. The results showed that there were no statistically significant differences in LLQ dimension scores based on age group.

Finally, in order to verify whether teaching experience groups (0-5 years, 6-15 years, 16-25 years, more than 25 years) differed in the LLQ dimensions, the Kruskal-Wallis test was applied. The results showed that there were no statistically significant differences in the LLQ dimension scores based on teaching experience.

Discussion

The purpose of the present study was to design and validate a questionnaire, the LLQ, to measure facilitative learning contexts implemented by university teaching staff to develop the learning to learn competence. For this purpose, we examined content validity, validity based on response processes, validity based on internal structure, and concurrent validity.

The obtained results make it clear that the LLQ fulfills the criteria for the creation of psychometric instruments with arguments of validation, for which reason we believe that it is an appropriate tool for measuring facilitative learning contexts implemented by university teaching staff to develop the learning to learn competence. First, evidence was obtained both about content validity and about validity based on response processes. Our results yielded an LLQ composed of 39 items grouped into 4 factors: 1. Commitment to Students and to Teaching (8 items); 2. Classroom Environment (7 items); 3. Methodology (15 items); and 4. Self-Regulation (9 items). All factors showed good internal consistency indices. The convergent validity of the questionnaire was examined by calculating the correlations between the LLQ dimensions and the SCCC dimension of the S-ATI-20 (Monroy et al., 2015). This analysis provided evidence in favor of convergent validity.

Regarding differences in LLQ dimension scores based on age and teaching experience, the present results found no differences in participants' scores on these dimensions. Thus, we can conclude that the professional competence needed to facilitate learning spaces to develop the learning to learn competence does not depend on either years of teaching experience or on age. To our way of thinking, a greater ability in this competence is probably associated with dynamic and flexible variables that have an impact in general on the development of professional competences in the university context (Feixas et al., 2013; Ion & Cano, 2012; Tejada Fernández & Ruiz Bueno, 2016). Thus, on the basis of the proposal by Feixas et al. (2013), these variables could be grouped into training factors (receiving a specific teaching training in that competence), environmental factors (implementing it with the support of peers, receiving supervision and institutional recognition, teaching culture of the work group), and individual factors (personal work organization). In future studies, it would be interesting to determine what types of teacher training factors are associated with greater development of the learning to learn competence in university educators.

Furthermore, it should be noted that differences were found between female and male educators in their LLQ dimension scores. Specifically, female educators showed higher scores than male educators on all LLQ dimensions. It is risky to hazard an explanation for this result given that there is no precedent whatsoever in this type of study. Nevertheless, we believe that these results may be associated with a greater presence in female instructors than in males of certain variables, such as, for example, a greater awareness of the importance of student-based teaching, having received greater specific training in the development of the learning to learn competence, and having achieved a greater transfer of that training. These are mere conjectures given that these variables were not measured for the present study. It is essential to continue advancing in this field of study and to conduct studies with larger samples in order to obtain complementary information.

Conclusion

Adaptation to the EHEA has brought with it a profound change in university education, both in degrees and in content and teaching methodology. Teaching-learning methodologies aimed at the development of competences have to do more with what the student learns than with what the instructor teaches, and are associated with greater student comprehension, motivation and participation in the learning process (Guisasola & Garmendia, 2014).

The LLQ is the first instrument in the Spanish language for evaluating the development of facilitative learning contexts in the creation of the learning to learn competence in university teaching practice, and is a tool that can be applied quickly and that is both valid and reliable. Therefore, in the framework of the EHEA, the LLQ may be of great use for university teaching staff since educators can thereby have at their fingertips an exact measurement of how they are implementing the development of the learning to learn competence in their teaching practice and thus be able to determine appropriate actions for improvement.

Furthermore, it must be kept in mind that professional teaching competences are developed dynamically throughout the individual's entire working life. Being an educator in higher education means learning to be such an educator, and most university centers have therefore opted for continuous training for the teaching profile. In this respect, the LLQ can serve to detect professional training needs on the center level in the development of this competence.

To conclude, we must point out that the present study has a certain number of limitations. First, this work was carried out with a small sample of university educators who participated voluntarily. Therefore, it would be preferable to carry out a probabilistic sampling to avoid limitations associated with the generalization of results. Second, we believe that the size of the sample, though sufficient, is not large, and it would therefore have been desirable to have used a larger sample. Third, although the present sample was heterogeneous with respect to gender, age group, studies, teaching experience and knowledge area, it must be noted that educators in the knowledge area of Sciences and Health Sciences were infrarepresented. Therefore, it would have been more appropriate to use a sample that was more representative of the stated knowledge areas. Finally, for the purpose of accumulating greater evidence in favor of the validity of the interpretation of the scores, it would be interesting to conduct comparative studies of the academic performance of students who receive instruction from educators with different levels of skill in putting into practice the facilitative learning contexts of the learning to learn competence. Likewise, keeping in mind that the range of natural application is the university teaching framework, it would be appropriate to seek information on the consequences of the application of the LLQ.

texto en

texto en