Introduction

The outcome of interest in a biomedical or epidemiological study is often an event that can occur more than once in a subject. Therefore, identifying a statistical method suitable for studying recurrent events is of great interest to the field.

From a statistical point of view, recurrent event analysis presents two major challenges. The first is individual heterogeneity, i.e. the unmeasured effects produced by between-subject variability, presumably due to unobserved exposures. For instance, imagine that a study measuring the number of respiratory crises is not asking for smoking status. It is likely that smokers will have a different pattern from non-smokers, resulting in heterogeneity across the subjects that can’t be attributed to any known factor as smoking status was not recorded. This issue is usually tackled using frailty models, which incorporate random effect terms to account for this “extra” variability. The second problem is within-subject correlations attributable to a single subject suffering multiple episodes of the event. These correlations are especially problematic in situations complicated by event dependence, in other words, when the risk of having a new episode depends on the number of previous episodes. This is the case of the number of sick leaves suffered by workers: A history of sick leaves increases the risk of a subsequent episode. Reis et al.1 quantified the extent of this increase. If we fail to account for event dependence, our resulting estimators will be inefficient and potentially biased. As discussed in Box-Steffensmeier et al.,2 common-baseline hazard models average the effects across all events not taking strata into account, being this averages biased in a predictable direction. In cohort studies, event dependence can be controlled by using survival models with specific-baseline hazards for each episode that the subject faces.3

Amorim and Cai4 provide an excellent review of approaches to recurrent event analysis. The article describes the applicable statistical methods for epidemiological studies of recurrent events, working off of the assumption that researchers have access to all of the information required by each model. In practice, however, much of this data is typically unavailable. Specific-baseline hazard models assume that the exact number of previous episodes suffered by each subject is known, but in reality it is typically impractical to obtain an exhaustive history for each patient. This leaves us without a method to directly address event dependence. The usual practice in such cases is to fit models with a common-baseline hazard.

The aim of the present study is to assess how well these common-baseline hazard models perform when they are used to estimate the effect of multiple exposures on the hazard of presenting an episode of a recurrent event when the previous history is not taken into account.

Methods

Simulations

Example

We illustrate this work by reproducing a study from the literature5 to analyze long-term sickness absence (SA) frequency in a cohort of Dutch workers. We will use the same baseline hazard as in the Dutch study, 0.0021 per worker-week. The between-episodes hazard ratios (HR) do not correspond exactly to those of any specific study, although Reis et al.1 provide values for a wide range of SA-related diagnoses. SA is a commonly-used outcome in occupational health studies because it is considered a major economic and public health issue,6-8 resulting in a growing interest in identifying the best method to quantitatively and efficiently analyze this phenomenon.5,9-13

Generation of populations

Six different populations of 250 000 workers, each with 20 years of history, were generated using the survsim14,15 package in R 2.15.3 (R Foundation for Statistical Computing, Vienna, Austria). For each subject i, the hazard of the next episode k was simulated through an exponential distribution:

Where  , i.e. the baseline hazard for subjects exposed to episode k. The maximum number of SA episodes that a worker may suffer was not fixed, although the baseline hazard was considered constant when k≥3. X1, X2, and X3 are the three covariates that represent the exposure, with Xii∼Bernoulli (0.5). β1, β2, and β3 are the parameters of the three covariates that represent the effect, set independently of the episode k to which the worker is exposed, as: β1 = 0.25, β2 = 0.5, and β3 = 0.75 in order to represent effects of different magnitudes. νi is a random effect.

, i.e. the baseline hazard for subjects exposed to episode k. The maximum number of SA episodes that a worker may suffer was not fixed, although the baseline hazard was considered constant when k≥3. X1, X2, and X3 are the three covariates that represent the exposure, with Xii∼Bernoulli (0.5). β1, β2, and β3 are the parameters of the three covariates that represent the effect, set independently of the episode k to which the worker is exposed, as: β1 = 0.25, β2 = 0.5, and β3 = 0.75 in order to represent effects of different magnitudes. νi is a random effect.

Event dependence

Event dependence was addressed by using various values of h0k (t), specifying different β0k. Table 1 presents the specifications for the generated populations in terms of the baseline hazards by SA episode and random effects used. Table 1 also presents the HR resulting from the comparison of the baseline hazard with that of the first episode, which gives us the event dependence for the phenomenon. Note that for populations 1 and 2, the HR = 1.20 and 1.44, respectively, for the second SA episode, as well as for the third and subsequent SA episodes with respect to the first. This means that between the second and third SA episodes, the baseline hazard was also increased by a factor of 1.20. The HR = 1.50 between episodes two and three for populations 3 and 4, and 2.50 for populations 5 and 6. We chose to simulate phenomena with increasing event dependence, given that Reis et al.(1) demonstrated that the hazard always increases in the presence of previous SA.

Individual heterogeneity

Individual heterogeneity was addressed by introducing νi, the random effect. This effect was held constant over the various episodes for a given subject but varied between subjects. Specifically, we established: a) absence of any random effect (νi = 1), which leads to a perfectly specified population once the subject covariates are set, and b) individual heterogeneity, where νi ∼Gamma with mean = 1 and variance = 0.1.

Table 1 shows the simulated populations.

Cohort design

Although the populations with 20 years of history were generated, a procedure was subsequently applied to limit the effective follow-up periods to 1, 3, and 5 years, with some subjects having suffered a prior episode before the follow-up period began.

This was achieved as follows.

We selected the subjects who were either present at 15 years of follow-up or incorporated after that date. Follow-up time was then re-scaled, setting t = 0 at 15 years for subjects already present in the population and t = 0 at the beginning of the follow-up period for those incorporated later. The purpose of this procedure was to obtain a cohort in which some subjects had a work history prior to the 15-year point that included previous episodes, which were treated as unknown. The figure of 15 years was chosen as a representative length of work history for typical corporate employee. Using this subpopulation, we then generated the three sub-bases corresponding to different study end-points: at 16 years (1 year of effective follow-up, from the 15th to 16th year), at 18 years (three years of follow-up), and at 20 years (five years of follow-up).

Models

All of the models considered are non-parametric and are extensions of the Cox proportional hazards model.(16,17) For all workers, we use the real previous episodes when fitting specific-baseline models, and we completely ignore them in the common-baseline models.

Models for non-individual heterogeneity context

-

Specific-baseline hazard approach: Prentice-Williams-Peterson (PWP)

For studies of recurrent phenomena involving event dependence but not individual heterogeneity, PWP is the survival model of reference.(18) PWP addresses event dependence by stratifying according to number of previous episodes, thereby assigning a specific-baseline hazard to each potential episode. When the i-th subject is at risk of the k-th episode, the hazard function is defined as:

where Xiβ represent the vectors of covariates and the regression coefficients.

-

Common-baseline hazard approach: Andersen-Gill (AG)

AG(19) is based on counting processes and assumes that the baseline hazard is common across all episodes, independent of the number of previous episodes. It has the following hazard function:

where

and is therefore the same for all episodes, k. AG treats different episodes within a given subject as though they were independent, subsequently obtaining a robust “sandwich” estimator of the variance.20

and is therefore the same for all episodes, k. AG treats different episodes within a given subject as though they were independent, subsequently obtaining a robust “sandwich” estimator of the variance.20

Models for individual heterogeneity context

-

Specific-baseline hazard approach: Conditional Frailty Model (CFM)

When individual heterogeneity comes into play, the reference model becomes CFM.21 This model addresses individual heterogeneity by assuming a latent multiplicative effect on the hazard function:

Ui is an individual random effect which is assumed to have unit mean and finite variance, which is estimated from the data.22 Since Ui is a multiplicative effect, we can think this frailty as a representation of the cumulative effect of one or more omitted covariates.22,23 The most commonly-adopted frailty terms24-26 are E[ Ui] = 1 and V[ Ui] = θ.

-

Common-baseline hazard approach: Shared Frailty Model (SFM)

Among other applications, SFM27-29 may be used in the context of recurrent events, where within-subject episodes share a frailty term that is independent of those for other individuals. Its hazard function is:

where the baseline hazard is independent of the episode k to which the subject is exposed. Ui is parameterized as in CFM.

Model assessment criteria

The criteria used to evaluate model performance were: 1) percentage bias:  , where β is the true value for estimate of interest,

, where β is the true value for estimate of interest,  , where B is the number of simulations performed; 2) percentage mean squared error (MSE): MSE =

, where B is the number of simulations performed; 2) percentage mean squared error (MSE): MSE =  , for j = 1,…,B, where

, for j = 1,…,B, where  is the estimate of interest within each of the j = 1,…,B simulations and V

is the estimate of interest within each of the j = 1,…,B simulations and V is the variance of the estimate of interest within each simulation; 3) coverage: percentage of times that the 95% confidence interval

is the variance of the estimate of interest within each simulation; 3) coverage: percentage of times that the 95% confidence interval  includes β, for j = 1,…,B, where SE

includes β, for j = 1,…,B, where SE is the standard error of the estimate of interest within each simulation; 4) confidence intervals average length; 5) proportional hazards: Percentage of times that the assumption of proportional hazards cannot be rejected, for j = 1,…,B, according to the test proposed by Grambsch and Therneau.30

is the standard error of the estimate of interest within each simulation; 4) confidence intervals average length; 5) proportional hazards: Percentage of times that the assumption of proportional hazards cannot be rejected, for j = 1,…,B, according to the test proposed by Grambsch and Therneau.30

All models were fitted using the coxph function from the survival31 package in R.

Results

The results presented here refer only to the 5-year follow-up cohorts. Results for the cohorts with 1 and 3 years of follow-up are available as supplementary data online, but are not detailed here, as the findings were quite similar.

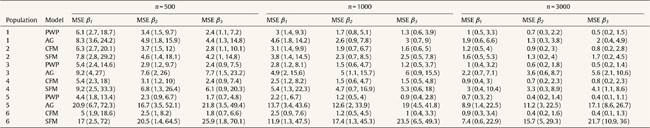

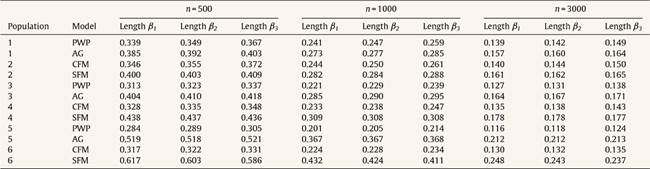

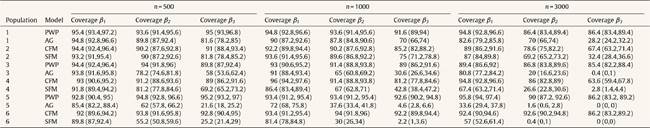

Regarding the situations with no-individual heterogeneity, we can see that the average bias in the common-baseline hazard models is 11 − 16% for population with low event dependence, rising to 42 − 51% for those with high event dependence (Table 2). In general, the bias does not change markedly in terms of the effect associated with β, sample size, or heterogeneity of the population. Higher sample size means lower MSE and, for common-baseline models, MSE increases with the exposure effect (Table 3). In terms of coverage, Table 4 shows that AG only achieves performances approaching 95% for populations with small or moderate event dependence (populations 1 and 3) and for β1 = 0.25. For the other scenarios, coverage falls notably, worsening with increasing event dependence, effect to estimate, and sample size. For example, in population 5, the 95%CI included the true parameter value for β3 in a mere 0-4.6% of samples when n = 1000 or n = 3000. As shown in Table 5, AG demonstrated overall low compliance with the assumption of proportional hazards, worsening with increasing event dependence, effect to estimate, and sample size. Compliance reached levels approaching 90% only in population 1, falling dramatically for population 5.

Table 4 Coverage: percentage of times that the true parameter value is included in the 95% confidence interval.

Table 5 Percentage of times that the assumption of proportional hazards is not rejected (95% confidence interval).

Results for heterogeneous populations present an almost identical pattern. Slight differences are observed regarding the 95%CI: SFM CI95% was generally broader (Table 6), translating into a slight rise in coverage level (Table 4).

The specific-baseline hazard approaches showed much better results than the common-baseline approaches, both in homogeneous and heterogeneous contexts. For populations free of heterogeneity, the percentage of bias remained below 10% and was generally negative, i.e. slightly underestimating the effect and coverage levels were around 85−95%. Overall, more than 90% of the simulated samples complied with the assumption of proportional hazards. In presence of individual heterogeneity, when there is low event dependence, the bias slightly falls with the increase of the effect to estimate and sample size.

Discussion

Statistical analysis of recurrent outcomes with event dependence is not trivial, as it requires methods that can account for this dependence to obtain efficient and unbiased estimates. Although including the number of previous episodes as a time-dependent covariate would address the problem,10 episode-specific hazard functions are more coherent with the nature of recurrent events. In any case, to deploy either alternative, it is necessary to know how many previous episodes each subject has had, which is often impossible. As a result, some epidemiologists often recur to a common-baseline hazard function that is independent of previous episodes. The present paper assesses how well these common-baseline hazard models perform, in comparison to some of the most common specific-baseline hazard models, when applied to situations complicated by event dependence and when the previous episodes are not taken into account.

It is worth noting that the results obtained here may be indicative of the behavior of phenomena with “positive” event dependence (risk of presenting a new episode increases in function of the number of previous episodes), not when event dependence is “negative” (which in our opinion is much less common in the study of public health phenomena). Similarly, the magnitude of the bias, coverage levels, etc., depends on other specific aspects of each study, as the intensity of the event dependence, sample size, etc.

It is important to highlight that there were almost no differences between the pattern of behavior of common-baseline approach versus specific-baseline approach, in heterogeneous or homogenous populations in terms of bias, coverage, or compliance with the proportional hazards assumption.

The performance of the common-baseline approaches worsened as event dependence increased, producing lower coverage and increasingly overestimating the effect. Subjects in the previously-exposed group had more event occurrences and therefore more recurrent episodes, and they suffered these episodes earlier than subjects in the non-exposed group. Thus, the exposed subjects arrived at a higher baseline hazard sooner and in greater numbers. This means that if specific-baseline hazards are not used, the increased baseline hazard would be largely attributable to the exposed group.

As the effect to be estimated increases, performance of models with common-baseline hazard worsens. The explanation is similar to the one above: the larger the effect, the greater the difference in risk between subjects in exposed and non-exposed groups; hence, the numbers and recurrence rates among exposed subjects become progressively greater compared to those of the unexposed subjects. Thus, as in the case of event dependence, the baseline hazard effect is disproportionally attributable to exposure.

For these models, coverage is affected by sample size, worsening as sample size increases. Clearly this is a spurious relationship; what really happens is that larger sample sizes provide greater precision, but since the estimates obtained are biased, greater precision means poorer coverage.32

As expected, PWP was clearly superior to AG in situations complicated by event dependence. Even so, coverage and compliance with the proportional hazards assumption remained unacceptably low in the face of significant event dependence and large effects to be estimated. Note, however, that our results show that PWP overall tends to slightly underestimate the value of β. This is probably because the upper strata, representing subjects with greater numbers of recurrences, concentrate members of the exposed group. Further studies to investigate the best strategy to use in the upper strata would be helpful. In order to keep all episodes in the analysis, we pooled all episodes beyond the second recurrence. It would be interesting to see whether “truncating” the number of episodes or, alternatively, not grouping them together at all, would improve performance. The first option has the disadvantage of eliminating some episodes, whereas the second produces strata with very few subjects and consequently unstable estimates.27 All the above comments are also valid for CFM. On the other hand, Torá-Rocamora et al.13 show that fitting the CFM when dealing with very large datasets may require high computing times. In this case, a suitable alternative could be the conditional frailty Poisson model which produces similar results but decreases the time substantially. We should also mention that the approaches presented in this paper are not the only ones that could be used for the analysis of recurrent events. Alternatives include multilevel mixed effects survival parametric models33, flexible parametric34 or multistate models.35

In summary, information about previous episodes is fundamental for sound analysis of recurrent events, but the required data is not always available. All the common-baseline hazard models that we evaluated performed almost equally poorly, making it impossible to recommend one over another. The one exception in which a common-baseline hazard model may be a reasonable option for event-dependent analysis is a situation in which the level of event dependence is very low and the effect to be estimated is small. Although this estimate would still be somewhat biased, coverage and compliance with the proportional hazards assumption might be within the realm of acceptability. In other situations, these models are clearly inappropriate, producing low coverage, low or extremely low compliance with the proportional hazards assumption, and blatant overestimation of the effect of exposure. In practice, the magnitude of this problem may even be greater. Reis et al.1 showed that event dependence for SA is often higher than the figures used in our simulations, meaning that the common-baseline hazards models would perform even more poorly. The authors showed, for example, that the HR for the second and third episodes of sick leave due to mental and behavioral disorders were 9.52 and 20.26, respectively, with respect to the first episode.

From this paper we may derive two main conclusions: first, availability of the history of previous episodes per subject is very important and therefore, an effort to this purpose should be made in the fieldwork; second, if we don’t have this information, it is important to find valid alternatives to tackle analyses of this type. One option that we consider worth investigating is imputing the number of previous episodes, which would allow for the use of models with specific-hazard functions.