Introduction

Situational Judgment Tests as Measures of 21st Century Skills:Evidence across Europe and Latin America

Since several decades, various educational and (non)profit organizations around the globe have compiled lists of skills needed for the next generation to survive in an ever changing, turbulent, and complex world. Although the final lists of these large-scale international efforts often differ in their name (“survival skills”,“21st century skills”) and content, they all share the characteristic that the skills identified go beyond technical and functional aptitude. The most common examples of such 21st century skills are, therefore, collaboration and teamwork, creativity and imagination, critical thinking, and problem solving (see, for overviews, Binkley et al., 2012; Geisinger, 2016).

Besides identifying the list of 21st century skills, an equally important issue deals with how these skills are best measured. Specifically, challenges deal with using a methodology that does not lead to biases and that enables comparing the results obtained across the various geographical regions. Along these lines, it is of pivotal importance that measurement effects do not cloud the standing of the regions on the 21st century skills (constructs). In the past, self-reports were typically used for determining people’s standing on each of the skills. However, the self-report methodology suffers from various pitfalls. One drawback is that self-reports assume people possess the necessary self-insight to rate themselves on each of the statements that operationalize the 21st century skills. Another drawback is that people tend to engage in response distortion in that they might overstate how they score on the statements (socially desirable responding). Other documented limitations relate to response style bias (extreme responding that differs across groups, such as different cultures; e.g., Hui & Triandis, 1989; Johnson, Kulesa, Cho, & Shavitt, 2005) or reference group bias (responding that is dependent on the chosen group of reference, such as one’s own cultural group; e.g., Heine, Lehman, Peng, & Greenholtz, 2002).

These limitations have resulted in the search for other methods for measuring 21st century skills (Kyllonen, 2012; see also Ainley, Fraillon, Schulz, & Gebhardt, 2016; Care, Scoular, & Griffin, 2016; Ercikan & Oliveri, 2016; Greiff & Kyllonen, 2016; Herde, Wüstenberg, & Greiff, 2016; Lucas, 2016). In PISA (OECD, 2014), three such approaches were suggested (for a summary, see Kyllonen, 2012). The first method dealt with the use of anchoring vignette items. Anchoring vignette items first ask respondents to evaluate several other targets on a specific target construct. Only afterwards, a respondent provides a self-rating on the target construct. The respondent’s self-rating is then rescaled based upon the evaluation standards that are extracted from the other ratings (e.g., Hopkins & King, 2010). As a second approach, forced choice methods were proposed. Forced-choice items do not ask respondents to evaluate isolated statements about themselves on a Likert-scale. Instead, they confront respondents with a choice between options that are intended to be of similar social desirability. Recent research attested to the broad applicability of forced choice items (Brown & Maydeu-Olivares, 2011; Stark, Chernyshenko, & Drasgow, 2004). Third, situational judgment tests (SJTs) were proposed. SJTs confront respondents with multiple, domain-relevant situations and request to choose from a set of predefined responses (Motowidlo, Dunnette, & Carter, 1990).

Importantly, these approaches aim to alleviate the limitations inherent in the typical self-report inventories, while at the same time ensuring that the average ratings on the 21st century skills can be compared across geographical regions. Note that SJTs do not actually measure 21st century skills. Instead, SJTs assess people’s procedural knowledge (“knowing what to do and how to do it”) of engaging in behavior that operationalizes a given 21st century skill (Lievens, 2017; Lievens & Motowidlo, 2016; Motowidlo & Beier, 2010; Motowidlo, Hooper, & Jackson, 2006).

In this study, we focus on the use of SJTs as measures of 21st century skills. Our objectives are twofold. First, we outline how a combined emic-etic approach can be used for developing SJT items that can be used across geographical regions. Second, we investigate whether SJT scores derived from a SJT that was developed in line with a combined emic-etic approach can indeed be compared across regions. We do so by conducting analyses of measurement invariance across regions of Europe and Latin America. Analyses of measurement invariance reveal whether different (regional or cultural) groups interpret test items in the same way and attribute the same meaning to them. Therefore, analyses of measurement invariance are crucial to disentangle measurement effects from true score differences between (regional or cultural) groups (Cheung & Rensvold, 2002; Vandenberg & Lance, 2000).

Our study is situated in an educational context. We use the data from Laureate International Universities, which is a global network of universities that, at the time of the study, operated in 25 countries and had over one million students globally. Similar to the efforts described above, Laureate International Universities started in 2015 to identify, define, and measure foundational competencies and behavioral skills required by graduating students to be successful in entry-level professional jobs across industries and geographical regions. SJT items were also developed to assess those foundational competencies. On the basis of the SJT scores, students receive feedback regarding their strengths and weaknesses as well as actionable tips to help them improve. It is also important that regions can be compared on their average standing on the various competencies.

The structure of this paper is as follows: First, we shortly define SJTs and illustrate their most important characteristics. Second, we explain why these special characteristics of SJTs may pose problems for measurements across geographical regions. Third, we describe how a combined emic-etic approach of test development might serve to limit these problems. Fourth, we provide an empirical test of the combined emic-etic approach to develop SJTs to measure 21st century skills across geographical regions of Europe and Latin America. Fifth, we discuss our results and implications for further research and practice.

Study Background

SJTs: Definition, Characteristics, and Brief History

In SJTs, candidates are presented with short domain-relevant situational descriptions and various response options to deal with the situations. Upon reading the short situational descriptions, candidates are asked to pick one response option from a list, rank the response options (“What would you prefer doing most, least?”), or rate the effectiveness of these options (Motowidlo et al., 1990). Most SJTs still take the form of a written test because the scenarios are presented in a written format. In video-based or multimedia SJTs, a number of video scenarios describing a person handling a critical situation is developed (McHenry & Schmitt, 1994). Recently, organizations are also exploring 2D-animated, 3D-animated, and even avatar-based SJTs (see, for an overview, Weekley, Hawkes, Guenole, & Ployhart, 2015).

SJTs are not new inventions. Early SJT versions go back to before WWII. In 1990, Motowidlo and colleagues reinvigorated interest in SJTs. Since then, SJTs have become attractive selection instruments for practitioners who are looking for cost-effective instruments. As compared to other sample-based predictors, SJTs might be easily deployed via the internet in a global context due to their efficient administration (Ployhart, Weekley, Holtz, & Kemp, 2003). Moreover, in domestic employment contexts, SJTs have demonstrated adequate criterion-related and incremental validity and potential to reduce adverse impact (Christian, Edwards, & Bradley, 2010; McDaniel, Hartman, Whetzel, & Grubb III, 2007; McDaniel, Morgeson, Finnegan, Campion, & Braverman, 2001).

SJTs in an International Context: Potential Problems

Although SJTs have been advanced as alternative method for assessing 21st century skills across geographical regions, such an outcome is far from assured. For example, Ployhart and Weekley (2006) mentioned the following key challenge:“it is incumbent on researchers to identify the cross-cultural generalizability – and limits – of SJTs... One might ask whether it is possible to create a SJT that generalizes across cultures. Given the highly contextual nature of SJTs, that poses a very interesting question.” (p. 349). Indeed, SJT items are directly developed or sampled from the criterion behaviors that the test is designed to predict (Chan & Schmitt, 2002). Therefore, SJT items are highly contextualized because the situations are embedded in a particular context or situation that is representative of future tasks.

Lievens (2006) reviewed prior research on SJTs in a cross-cultural context and also identified SJT item characteristics that might affect the cross-cultural use of SJTs (see also Lievens et al., 2015). The contextualized nature of SJT items makes them particularly prone to cultural differences because the culture wherein one lives acts like a lens, guiding the interpretation of events and defining appropriate behaviors (Heine & Buchtel, 2009; Lytle, Brett, Barsness, Tinsley, & Janssens, 1995). This contextualized nature of SJTs might create boundary conditions for the use across geographical regions in at least four ways (Lievens, 2006). First, the contextualization in SJTs is shown in the kind of problem situations (i.e., the item stems) that are presented to candidates. When SJTs are used in an international context, the issue then becomes whether there are differences in terms of the situations (critical incidents) generated across regions. Some situations will simply not be relevant in one region, whereas they might be very relevant in another region (e.g., differences in organizing meetings across countries). Second, similar differences might occur on the level of how to react to the problem situation. That is, some response options might be relevant in one region, whereas they might not occur in another region. The meeting example can again be used here, with openly not agreeing with the boss being an unrealistic response option in some regions. Third, the effectiveness (scoring) of response options might vary across regions. Along these lines, Nishii, Ployhart, Sacco, Wiechmann, and Rogg, (2001) stated:“if a scoring key for a SJT is developed in one country and is based on certain cultural assumptions of appropriate or desirable behavior, then people from countries with different cultural assumptions may score lower on these tests. Yet these lower scores would not be indicative of what is considered appropriate or desirable response behavior in those countries“. Fourth, the item-construct linkages might differ across regions. That is, a specific response option might be an indicator of a given construct in one region but an indicator of another construct in another region. For example, to decline a task assignment from a supervisor because of time constraints during a department meeting might indicate assertiveness or self-regulation in a culture low in power distance but might indicate impoliteness or rudeness in a culture high in power distance.

In short, these potential differences in the situations, response options, response option effectiveness, and item-construct linkages across geographical regions highlight that care should be taken to develop SJTs for measuring 21st century skills across regions. That is, strategies should be deployed for designing SJTs that alleviate these potential problems.

Strategies for SJT Design in a Cross-cultural Context: Emic, Etic, and Combined Emic-Etic Approach

In the search of strategies for dealing with potential threats to the cross-cultural transportability of SJTs, it is possible to borrow valuable insights from the large body of research in cross-cultural psychology. Generally, three possible approaches can be adopted for developing global (selection) instruments, namely an emic, an imposed etic, and a combined emic-etic approach (Berry, 1969, 1990; Headland, Pike, & Harris, 1990; Leong, Leung, & Cheung, 2010; Morris, Leung, Ames, & Lickel, 1999; Pike, 1967; Yang, 2000).

An indigenous or emic approach posits that tests should be developed and validated with the own culture as a point-of-reference. In the context of SJTs, an example is the study of Chan and Schmitt (2002). They developed an SJT for civil service positions in Singapore. This implied that the job analysis, the collection of situations, the derivation of response alternatives, the development of the scoring key, and the validation took place in Singapore. Chan and Schmitt (2002) found that in Singapore the SJT was a valid predictor for overall performance and had incremental validity over cognitive ability, personality, and job experience. This corresponds to the meta-analytic validity research base in the United States (Christian et al., 2010; McDaniel et al., 2007; McDaniel et al., 2001).

In this example, the development of the SJT ensured that the job relevant scenarios were derived from input of local subject matter experts. However, there are also drawbacks in the emic approach. As an indigenous approach implicates the use of different instruments for different countries, it is a costly and time-consuming strategy. In addition, a challenge for the country-specific emic approach is to contribute to the cumulative knowledge in a specific domain that typically centers around generalizable concepts (Leong et al., 2010; Morris et al., 1999).

Contrary to the emic approach, the imposed etic approach assumes that the same instrument can be applied universally across different cultures (Berry, 1969; Church & Lonner, 1998). So, according to the imposed etic approach, a selection procedure developed in a given country can be exported for use in other countries when guidelines for test translation and adaptation are taken into consideration (International Test Commission, 2001). Hence, the imposed etic approach represents an efficient strategy for cross-cultural assessment. However, the imposed etic approach is also not without limitations. Even when tests are appropriately translated and adapted, the test content of the transported instruments might reflect predominantly the culture from which the instrument is derived, thereby potentially omitting important emic aspects of the local culture (Cheung et al., 1996; Leong et al., 2010).

In light of these drawbacks, the effectiveness of the imposed etic approach for constructing international SJTs seems doubtful given the highly contextualized nature of SJT items. One study confirmed the problems inherent in using an imposed etic approach in contextualized instruments such as SJTs. Such and Schmidt (2004) validated an SJT in three countries. Results in a cross-validation sample showed that the SJT was valid in half of the countries, namely the United Kingdom and Australia. Conversely, it was not predictive in Mexico. These results suggest that effective behavior on the SJT was mainly determined in terms of what is considered effective behavior in two countries with a similar heritage (the United Kingdom and Australia).

Another study on the cross-cultural transportability of SJTs showed that an integrity SJT developed in the US was generally applicable to a Spanish population as well (Lievens, Corstjens et al., 2015). Most of the scenarios from the American SJT were rated to be realistic in the Spanish population, patterns of endorsements of various response options were mainly similar across cultures, the American scoring scheme correlated highly with Spanish scoring schemes and item-construct linkages also appeared to be comparable, because correlations between self-reports and SJT scores were found to be similar across cultures. In sum, evidence for the imposed etic approach for constructing international SJTs is mixed.

Yet, the emic-etic distinction should not be seen as a dichotomy. Rather, it constitutes a continuum (Church, 2001; Morris et al., 1999; Sahoo, 1993). Therefore, it is possible to combine these cultural-general and cultural-specific approaches of international test development (Leong et al., 2010; Schmit, Kihm, & Robie, 2000), resulting in the combined emic-etic approach. In such a combined emic and etic approach, the instrument is developed with cross-cultural input. In the personality domain, we are aware of two prior projects that successfully applied the combined emic-etic approach. First, in the development of the Chinese Personality Assessment Inventory (CPAI; Cheung, Cheung et al., 2008; Cheung, Fan, Cheung, & Leung, 2008; Cheung et al., 1996) descriptions of personality were extracted from multiple sources (e.g., proverbs, everyday life, etc.) to identify personality constructs relevant to the Chinese culture. These local expressions were then compared to translations of imported measures of similar constructs. Large-scale tests of the inventory in China showed that there was substantial overlap between the CPAI and the Big Five, although there were also unique features (i.e., the interpersonal relatedness factor). As a second illustration, Schmit et al. (2000) developed a global personality inventory. Hereby the behavioral indicators (items) of personality constructs that were written by worldwide panels of local experts varied, while the broader underlying constructs were similar across countries. Construct-related validity studies provided support for the same underlying structure of the global personality inventory across countries.

So, as a result of a combined emic-etic approach, both universal and indigenous constructs are incorporated: the inclusion of culture-specific concepts produces within-culture relevance, while the measurement of universal concepts allows cross-cultural comparisons (Cheung et al., 1996). The combined emic-etic approach also enables to expand the interpretation of indigenous constructs in a broader cultural context.

In sum, prior studies have developed and used SJTs in various geographical regions. However, many applications were within-country examinations that attest to an indigenous (culture-specific/emic) approach. One study (Such & Schmidt, 2004) applied an imposed etic approach with the SJT not being valid in some countries. To avoid these problems, the combined emic-etic approach might serve as a potentially viable strategy for constructing sample-based selection procedures such as SJTs for use in cross-cultural applications. So far, no empirical studies have used or tested this combined emic-etic approach in sample-based selection procedures such as SJTs. This study starts to fill this key research and practice gap by using a combined emic-etic approach for constructing an SJT for assessing 21st century skills across geographical regions.

Method

Development and Validation of a Global Competency Framework

Laureate International Universities developed and validated a comprehensive framework of competencies that are required by graduating students to be successful in the workplace across geographical regions, industries, and jobs. In line with the combined emic-etic approach, cross-regional input was gained across all developmental steps to ensure that the competency framework was relevant across regions and cultures.

The development of the competency framework was based on various sources of information. These included best practices in competency modeling (Campion et al., 2011; Kurz & Bartram, 2002), content of competency frameworks from academic institutions and professional companies (e.g., Getha-Taylor, Hummert, Nalbandian, & Silvia, 2013; Lee, 2009; Lunev, Petrova, & Zaripova, 2013), internal research conducted by several institutions in the Laureate network, and data from various research partners. A draft competency framework was developed by integrating information from these sources and utilizing competency names and definitions from the SHL Universal Competency Framework (Bartram, 2012).

To ensure that the draft competency framework comprehensively covered competencies that were applicable and important across geographical regions, industries, and jobs, it was reviewed, refined, and approved by various groups. These groups included a global advisory council, consisting of eighteen members from regions represented in Laureate, two subject matter experts on competency modeling, and eighteen global focus groups that represented all regions, stakeholders (students/alumni, faculty/staff, academic leaders, and employers), and experts across disciplines. In total, the global focus group comprised of 86 participants.

Finally, two survey studies were conducted among Laureate’s stakeholders across the network to evaluate and refine the competency framework. In Survey 1, 25,202 representatives across different stakeholders, roles, disciplines, and regions confirmed the importance of the competencies for entry-level professionals. In Survey 2, 10,420 of these representatives further reviewed and confirmed the individualist behaviors defined within each competency. The final competency framework includes 20 competencies. Further details about the competency framework, its development, and the global validation study are reported elsewhere (Strong, Burkholder, Solberg, Stellmack, & Presson, manuscript submitted for publication).

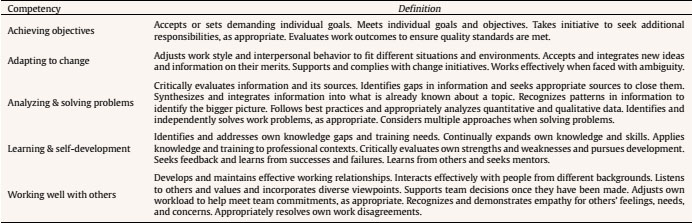

In this study, we focus on five core competencies that were identified in the global validation study as most important and critical for successful job performance of new professionals across geographical regions, industries, and jobs. These core competencies are achieving objectives, adapting to change, analyzing and solving problems, learning and self-development, and working well with others. The definitions of these competencies are provided in the Appendix.

SJT Item Design and Scoring

Analogous to the development of the competency framework, a combined emic-etic approach was applied to develop written SJT items with close-ended response format for the competencies. The development of the SJTs followed recommendations from Weekley, Ployhart, and Holtz (2006). We started with using the critical incident technique (Flanagan, 1954) to gain input for item development from subject matter experts. Given that the SJTs should assess competencies required of graduating students to be successful in the workplace, students, faculty/staff, administrators, alumni, and advisory committee members of Laureate institutions as well as employers served as subject matter experts. Representatives from these groups were invited to fill in an online survey to describe specific situations for a chosen competency, in which one student performed exceptionally well and another student performed exceptionally poorly. In total, 1,749 critical incidents were gathered from 564 respondents.

Three experienced test construction consultants drafted initial items. They compiled, reviewed and synthesized the critical incidents. Per competency, critical incidents and related examples for excellent and poor performance were converted into item stems and response options. Per item stem, five response options were generated that aimed to measure different levels of proficiency for the same competency.

Item stems and response options were written in a way to be applicable across different regions, industries, and jobs. To verify this, two global focus group panels reviewed all items and determined the scoring key. The panels consisted of 21 and 22 participants, respectively. Both panels represented similar numbers of representatives from all geographical regions, functional roles (Laureate faculty/staff and employers), and employers from different industries. Panelists reviewed items with special focus on realism and face validity of depicted situations and response options within their geographical region and field of work. Potential issues were discussed and items were adapted, if necessary.

To set the scoring key per SJT, these panelists rated the effectiveness of each response option per item stem on a five-point scale (1 = very ineffective, 5 = very effective). In line with the consensus weighting method (see Chan & Schmitt, 1997), the average ratings were used to assign each response option a score of 1 through 5 points.

The items and related response options and scoring keys were further reviewed by assessment experts and employers. In total, twelve assessment experts (two per geographical region) with advanced degrees in Industrial/Organizational Psychology or a closely related discipline reviewed all items. Assessment experts provided feedback regarding item clarity or content from their own cultural perspective. Based upon this feedback, some items were slightly modified. Assessment experts also indicated whether each item appeared to tap into the respective competency. If at least half of the assessment experts indicated that an item did not appear to capture the targeted competency, the respective item was dropped. A final panel of fourteen employers reviewed all items. Again, this panel was formed by representatives from all global regions as well as from different industries and jobs.

After final minor item modifications, each of the competency specific SJTs constructed consisted of 21 items on average. Items had a behavioral tendency response instruction (“What would you do?”). For each item stem/scenario, participants were instructed to choose a response option they would most likely do and another response option they would least likely do. Participants could receive between 1 and 5 points for each choice. Therefore, scores could vary between 2 and 10 points per scenario.

All SJT items were translated from English into six additional languages. These additional languages were Latin American Spanish, European Spanish, Brazilian Portuguese, European Portuguese, French, and German. The rigorous translation process followed guidelines for translating tests (e.g., Van de Vijver, 2003), including repeated front and back translations by different translators.

Procedure and Sample

Laureate institutions invited their students to take part in this study to receive developmental feedback about their competency levels. The different SJTs were distributed across four different bundles that contained different competency specific SJTs. Students were invited to complete one bundle but could complete additional bundles to receive developmental feedback about further competencies. Within each bundle, students completed a random set of eight scenarios per competency specific SJT. Finally, students responded to demographic questions.

To assure that only valid data were analyzed, we removed data for several reasons. In a limited number of 24 cases, students started the same bundle twice. To exclude biases due to retest effects regarding the same competencies or scenarios, we excluded responses from the second bundle completion. For the same reason, we removed responses of eight students from the second access to any SJT of the same competency. Given that we were interested in cross-regional comparisons, we took care that participants understood the test items well. Hence, we removed data for 87 students that indicated to be“not comfortable” with the language in which they completed the SJTs. Further, we removed students’ responses per scenario if they were made in less than twelve seconds (internal test runs had shown it was impossible to choose both a best and worst response per scenario in less than twelve seconds). Remaining sample sizes for our five core competencies did not justify analyses for the geographical regions of Africa, Asia, Oceania, or the US. Therefore, we focused our analyses on students from Europe and Latin America.

After data cleaning, a total of 5,790 students (53% female) from twenty different institutions provided valid responses to the competency specific SJTs (mean age = 22.63, SD = 5.09); 64% of the students resided in Europe, 36% in Latin America. In total, students came from eighteen different countries. The majority of European students resided in Turkey (30%), Portugal (20%), or Spain (17%). The majority of Latin American students lived in Mexico (34%), Chile (22%), or Brazil (18%). Each student chose to complete the SJTs in one of seven available languages. The majority of students completed the SJTs in English (32%), Latin American Spanish (29%), or European Portuguese (13%); 74 % of all students completed the SJTs in their dominant language; 72% of all students reported to be“very comfortable” with the language in which they completed the SJTs1. Students completed the SJTs either during their first (52%) or last year of study (48%) at the institution; 45% completed the SJTs in a proctored setting; 58% of students reported to have already gained some professional experience; 41% already completed an internship; 16% of all participants were graduate students. Students studied across thirteen different majors (31 % Business & Management, 15 % Engineering and Information Technology, 14% Health Sciences).

Results

Internal Consistency Reliabilities

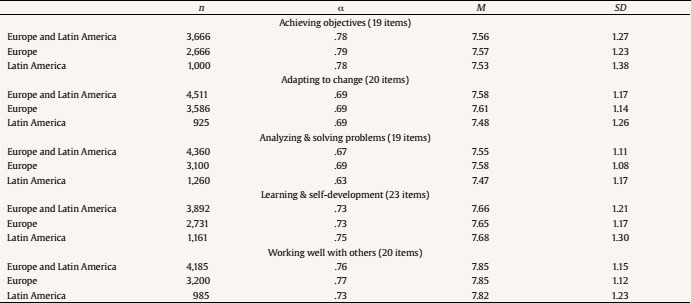

We based our analyses on SJT scenario scores as sum scores for the best and worst choice per scenario. To calculate internal consistencies for each of the five SJTs, we used the full information maximum likelihood procedure and the ML estimator in Mplus Version 7.4 (Muthén & Muthén, 1998-2015) to estimate scenario scores from missing values. Then, we used intercorrelations between scenario scores to calculate Cronbach’s alpha for our total sample. Internal consistencies of the five SJTs were moderate to acceptable for the total sample (.67-.78, see Table 1). Internal consistencies calculated separately for each region produced similar results (see Table 1).

Measurement Invariance across Regions

To examine measurement invariance across regions for each of the five SJTs, we first sought to establish a baseline model for the total sample, then investigated model fit for the baseline model within each region, and afterwards ran increasingly restrictive multigroup confirmatory factor analyses (e.g., Byrne & Stewart, 2006; Byrne & Van de Vijver, 2010). We conducted these analyses in Mplus via the full information maximum likelihood procedure and the ML estimator.

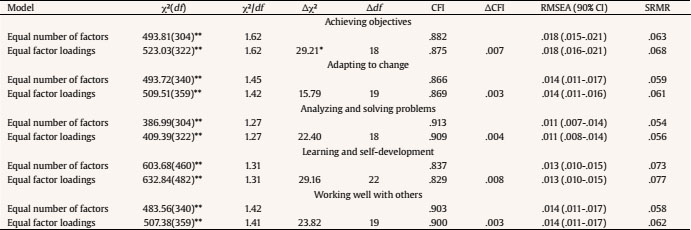

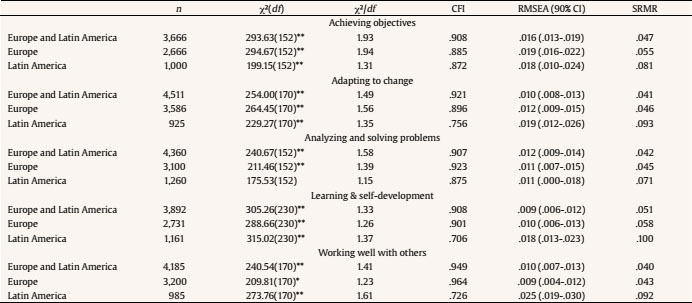

To guide the examination of a baseline model for the total sample, we hypothesized that a one-factor model would explain scenario scores for each SJT. This hypothesis was based upon the fact that all scenarios and response options for a specific SJT were developed to tap into one respective competency. For all five SJTs, a one-factor model showed good model fit (see Table 2). Thus, a one-factor model was chosen as baseline model in all of the following steps.

Table 2 Goodness-of-fit Indices for Factor Structure Models (Overall Sample and Within Regions)

*p < .05, **p < .01.

We then investigated model fit for this baseline model per region. For the SJTs of“achieving objectives” as well as“analyzing and solving problems”, model fit for the baseline model within each region were at least acceptable. For the three remaining SJTs, the CFI value for the model fit within Latin America fell below the limit of acceptable model fit. Previous studies that investigated the factor structure of SJTs frequently found similar patterns and usually failed to find good model fit (with acceptable CFI values). To analyze measurement invariance, these studies then used the best fitting model as baseline model for the multigroup confirmatory factor analyses (e.g., Krumm et al., 2015; Lievens, Sackett, Dahlke, Oostrom, & De Soete, in press). In line with this approach, we kept the one-factor model as baseline model for our measurement invariance analyses.

To investigate measurement invariance, we sought to find evidence for configural and metric invariance for the baseline model across regions (see summary of Byrne & Van de Vijver, 2010). To investigate configural measurement invariance, we restricted the number of latent factors and the number of factor loadings to be equal across both regional groups. Configural measurement invariance therefore indicates that the same factorial structure explains the observed scores across regional groups. Second, we restricted the size of factor loadings to be equal across both regional groups to investigate metric measurement invariance. Metric measurement invariance thus suggests that observed scores are equally related to the assumed latent factor(s). In other words, metric measurement invariance indicates that the observed scores measure the latent factor(s) equally across (regional) groups (see, for example, Byrne & Stewart, 2006; Byrne & van de Vijver, 2010).

To examine configural and metric measurement invariance, we inspected model fit, and conducted nested model comparisons by using the chi-square difference test as well as the criterion proposed by Cheung and Rensvold (2002). These authors stated that measurement equivalence could be defended in practical terms, if increasingly restrictive confirmatory factor analyses are associated with only marginal drops in CFI values (∆CFI < .01; see also Byrne & Stewart, 2006). With the exception of the SJT for“achieving objectives”, chi-square difference tests were not significant for all five SJTs, which provides evidence for metric measurement invariance. In addition, drops in CFI values were marginal for all five SJTs (∆CFI ≤ .008). Thus, we concluded that metric measurement equivalence could be established for all five SJTs (see Table 3). Importantly, this means that at a practical level differences in manifest mean scenario scores across regions can be compared.

Discussion

Many educational and (non)profit organizations have investigated which skills or competencies are needed to face the challenges of the 21st century (Binkley et al., 2012; Geisinger, 2016). Subsequently, researchers have started to investigate how such 21st century skills can be best measured (Kyllonen, 2012). One such key challenge deals with assessing 21st century skills without biases that may interfere with comparing results obtained across various geographical regions and cultures. This study advances our knowledge about appropriate assessment approaches for 21st century skills by outlining how the combined emic-etic approach enables developing SJTs that tap into 21st century skills across regional groups. To this end, we investigated measurement invariance across Europe and Latin America for five different SJTs that assessed a core competency for graduating students to be successful in entry-level jobs.

Our results showed that configural and metric measurement invariance could be established across Europe and Latin America for all of the five SJTs. Thus, the same factorial structure explained SJT scenario scores across these regional groups and SJT scenario scores measured the latent factor(s) equally across those regional groups (see, for example, Byrne & Stewart, 2006; Byrne & van de Vijver, 2010). In other words, participants from Europe and Latin America interpreted the SJT scenarios and response options in the same way and attributed the same meaning to them. This is a fundamental precondition to rule out measurement effects and to investigate mean differences across (regional) groups (Cheung & Rensvold, 2002; Vandenberg & Lance, 2000).

Our results advance knowledge about the use of SJTs across geographical regions and cultures. Given SJTs’ highly contextualized nature, comparing SJT scores across regions and cultures is viewed as a crucial challenge (e.g., Lievens, 2006; Ployhart & Weekley, 2006). Previous cross-cultural investigations of SJTs also showed mixed results when the SJT development followed an imposed etic approach and did not include cross-regional/cultural input across all steps of SJT development (Lievens, Corstjens, et al., 2015; Such & Schmidt, 2004). However, as we demonstrated, integrating subject matter experts from different regions and cultures during the definition of the construct of measurement, the sampling of critical incidents, scenario writing, generation of response options, and setting the scoring key provides the fundament for SJTs to work well and be transportable across regions/cultures.

Although a combined emic-etic approach is time and resource intensive, it seems to pay off in terms of the cross-cultural application of assessment methods. Our work therefore attests to the success of relying on a combined emic-etic approach and extends similarly positive findings from research on the cross-cultural transportability of personality inventories (Cheung, Cheung et al., 2008; Cheung, Fan et al., 2008; Cheung et al., 1996; Schmit et al., 2000). To the best of our knowledge, this study is the first to apply a combined etic-emic approach of SJT development and to investigate its effects on measurement invariance across geographical regions. Our general recommendation is that the combined emic-etic approach serves as a viable strategy to develop SJTs for assessing 21st century skills across geographical regions.

Some caveats are in order, though. First, traditional, written SJTs with close-ended response formats do not measure behavior related to 21st century skills. Instead, they capture people’s procedural knowledge about engaging in behavior related to these skills (Lievens, 2017; Lievens & Motowidlo, 2016; Motowidlo & Beier, 2010; Motowidlo et al., 2006). Recent research explored SJTs with other stimulus and response formats such as constructed response multimedia tests. These tests present short video clip situations to participants, that then have to display their behavioral response in front of a webcam. Evaluations of these constructed responses have been shown to be valid indicators of job and training performance (Cucina et al., 2015; De Soete, Lievens, Oostrom, & Westerveld, 2013; Herde & Lievens, 2018; Lievens, De Corte, & Westerveld, 2015; Lievens & Sackett, 2017; Lievens et al., in press; Oostrom, Born, Serlie, & van der Molen, 2010, 2011). Although constructed response multimedia tests add costs to SJT development (i.e., design of video clips and evaluation of participants’ behavioral responses), they might complement current approaches to the assessment of 21st century skills. Given their dynamic audiovisual stimulus format and their audiovisual constructed response format, constructed response multimedia tests are even more contextualized than written, close-ended SJTs. Future research should therefore investigate whether constructed response multimedia tests developed according to a combined emic-etic approach also produce scores of 21st century skills that can be compared across regions and cultures.

As another limitation, we had data for only two geographical regions (Europe and Latin America). That said, this sample incorporated participants from eighteen different countries, thereby attesting to a huge cultural diversity. Nonetheless, further empirical research is necessary to replicate our results and examine the comparability of scores derived from SJTs across other geographical regions and cultures.

Conclusion

In sum, this paper is the first to investigate the combined emic-etic approach to develop SJTs to obtain scores that can be compared across geographical regions and cultures. Our results established metric measurement invariance across five SJTs for participants from Europe and Latin America. Hence, this study attests to the potential of the combined emic-etic approach. We therefore encourage researchers and practitioners to adopt this approach in cross-cultural research and practice for assessing 21st century skills.