Meu SciELO

Serviços Personalizados

Journal

Artigo

Indicadores

-

Citado por SciELO

Citado por SciELO -

Acessos

Acessos

Links relacionados

-

Citado por Google

Citado por Google -

Similares em

SciELO

Similares em

SciELO -

Similares em Google

Similares em Google

Compartilhar

Escritos de Psicología (Internet)

versão On-line ISSN 1989-3809versão impressa ISSN 1138-2635

Escritos de Psicología vol.10 no.2 Málaga Mai./Ago. 2017

https://dx.doi.org/10.5231/psy.writ.2017.14032

The Student Satisfaction with Educational Podcasts Questionnaire

Cuestionario de satisfacción con podcasts educativos

Rafael Alarcón, Rebecca Bendayan & María J. Blanca

Department of Psychobiology and Methodology of Behavioural Sciences. University of Málaga. Spain

The research reported in this publication was supported by a Teaching Innovation Project "Design of podcasts in Research Methods and Statistics" (PIE10-002), funded by the University of Malaga.

ABSTRACT

Student satisfaction with podcasts is frequently used as an indicator of the effectiveness of educational podcasting. This aspect has usually been assessed through surveys or interviews in descriptive studies, but no standard questionnaire exists that can be used to compare results. The main aim of this study was to present the Student Satisfaction with Educational Podcasts Questionnaire (SSEPQ). The SSEPQ consists of 10 items that are scored on a Likert-type scale. The items address the opinions of students on content adequacy, ease of use, usefulness, and the benefits of podcasts for learning. 3-5 minute podcasts were assessed as a supplementary tool within the research methods and statistics course of their psychology undergraduate degree. Confirmatory factor analysis with cross-validation showed a one-factor structure, supporting the use of the total score as a global index of student satisfaction with podcasts. The results suggest that there is a high level of satisfaction with podcasts as a tool to improve learning. The questionnaire is a brief and simple tool that can provide lecturers with direct feedback from their students, and may prove useful in improving the teaching-learning process.

Key words: Podcast, M-learning, Questionnaire, Higher education.

RESUMEN

La satisfacción del estudiante con los podcasts constituye un indicador de la eficacia de los podcasts educativos. Este aspecto generalmente se ha evaluado a través de encuestas o entrevistas en estudios descriptivos, pero aún no existe un cuestionario estándar que pueda ser utilizado en estudios comparativos. El objetivo de este trabajo es presentar el Cuestionario de satisfacción de estudiantes con podcasts educativos (SSEPQ), que consta de 10 ítems que evalúan la percepción del estudiante respecto a la adecuación del contenido, facilidad de uso, utilidad y beneficio de los podcasts para el aprendizaje. Los estudiantes evaluaron podcast diseñados como material complementario de la asignatura Metodología de investigación y estadística del grado en Psicología. El análisis factorial confirmatorio reveló una estructura de un factor, cuya puntuación total es indicativa de un índice global de satisfacción con los podcasts. Los resultados proporcionan evidencia de un alto nivel de satisfacción con los podcasts como recurso para mejorar el aprendizaje. El SSEPQ es un cuestionario breve y sencillo que puede ser útil al profesorado, proporcionando información sobre la percepción de los estudiantes del uso de estos recursos en educación superior con objeto de mejorar el proceso de enseñanza-aprendizaje.

Palabras clave: Podcast, M-learning, Cuestionario, Educación superior.

Introduction

Over the last few years, podcasts have begun to be widely used in higher education for educational purposes. A podcast is a media file that can be audio and/or video and which can be automatically downloaded from the web to devices such as smartphones, PCs or mp3 players (O'Bannon, Lubke, Beard, & Britt, 2011; Román & Solano, 2010), thus enabling a wide variety of messages to be communicated (Heilesen, 2010). Their ease of use without restrictions of time and place, coupled with rapid and free availability for most portable devices, makes podcasts a useful tool to enhance cooperative and self-learning (Evans, 2008; Heilesen, 2010; Hill & Nelson, 2011; Reychav & Wu, 2015).

In higher education, podcasts have been used in a variety of ways (Carvalho, Aguiar, & Maciel, 2009; McGarr, 2009; Popova & Edirisingha, 2010; Van Zanten, Somogyi, & Curro, 2012; Vogele & Gard, 2006). McGarr (2009) outlined three broad categories along a continuum from the direct use of educational podcasts for teaching and learning to the provision of learning content: substitutional use, in place of traditional lectures; creative use, whereby students have to construct the podcast themselves; and supplementary use to provide any additional material that assists learning. In the latter category, the short 3-5 minute podcast is becoming very popular as a way of summarizing a lecture or presenting basic concepts (Abdous, Facer, & Yen, 2012; Lee & Chan, 2007; Van Zanten et al., 2012).

Experiences with different uses of podcasting in higher education have been reported from across the world and in numerous scientific fields. Examples of applications can be found in law (Wieling & Hofman, 2010), music (Bolden & Nahachewsky, 2015), chemistry (Pegrum, Bartle, & Longnecker, 2015), mathematics (Kay & Kletskin, 2012), biology (Montealegre, Carvajal, Holguín, Pedraza, & Jaramillo, 2010), physiology (Abt & Barry, 2007), medicine (Narula, Ahmed, & Rudkowski, 2012), psychology (Alonso-Arbiol, 2009), nursing (Burke & Cody, 2014), marketing (Van Zanten et al., 2012), business and management (Evans, 2008), economics (Vajoczki, Watt, Marquis, & Holshausen, 2010) and foreign language learning (Abdous et al., 2012). One question that often emerges from these experiences is related to the benefits for students of using podcasts and the effectiveness of this method as a learning tool. Vajoczki et al. (2010) established four main indicators to assess the effectiveness of podcasts: student satisfaction, educational outcomes, instructor satisfaction and financial feasibility. The present paper is concerned with the first indicator.

Student satisfaction refers to the "favorability of a student's subjective evaluation of the various outcomes and experiences associated with education" (Elliott & Shin, 2002, p. 198). With respect to podcasts, Vajoczik et al. (2010) point out that the student satisfaction has been explored by asking students about ease of use, usefulness and benefits to learning of podcasts. The empirical evidence indicates that students like podcasts (Taylor & Clark, 2010), are satisfied with this tool (Lakhal, Khechine, & Pascot, 2007; Traphagan, Kusera, & Kishi, 2010), and that they find podcasts easy to follow (Kay & Kletskin, 2012; Vajockzi et al., 2010), intellectually stimulating (Fernández, Simo, & Sallan, 2009), motivating of their study (Bolliger, Supanakorn, & Boggs, 2010; Hill & Nelson, 2011) and useful in helping them increase their understanding or to reinforce concepts (Clark et al., 2007; Evans, 2008; Kay & Kletskin, 2012; Montealegre et al., 2010; Popova, Kirschner, & Joiner, 2014; Vajockzi et al., 2010; Van Zanten et al., 2012). Students also report that podcasts lead them to improve their study habits through the promotion of greater independence and self-reflection (Leijen, Lam, Wildschut, Simons, & Admiraal, 2009). These findings are derived from survey questions or interviews conducted as part of descriptive studies involving several different instruments (Heilesen, 2010), but there is, as yet, no standard questionnaire that may be employed in order to compare results.

In this context, the main purpose of this paper is to present a brief, simple and standard questionnaire that can be used to assess student satisfaction with educational podcasts in higher education: The Student Satisfaction with Educational Podcasts Questionnaire. The podcasts used in the study were 3-5 minutes in length and served to review basic concepts and provide supplementary material for a Research Methods and Statistics course of the Degree in Psychology. Confirmatory factor analysis with cross-validation was used to assess the factor structure of the questionnaire. The corrected item-test correlations and the internal consistency were also computed.

Method

Participants

Participants were 376 students (292 women and 84 men) with an average age of 19.88 years (SD = 3.81) who were enrolled in the Research Methods and Statistics course that forms part of the first year of the Degree in Psychology offered by the University of Malaga (Spain). They all used the podcasts at least once during the academic year: 93.4% accessed them on their computers, 2.9% on their phones and the rest on an iPad or tablet; in terms of location, 83% watched the podcasts at home, 9.8% at university and the rest in other places.

Instruments

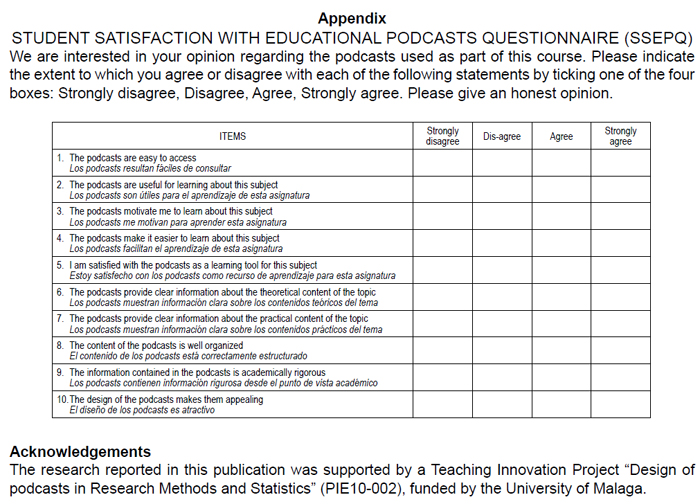

The Student Satisfaction with Educational Podcasts Questionnaire (SSEPQ). The questionnaire was developed in order to assess student satisfaction with educational podcasts in higher education. The SSEPQ consists of 10 Likert-type items with four response options (see Appendix). The items assess satisfaction in terms of the perceived content adequacy (i.e. whether the content is well organized and whether the podcast provides clear information about the topic), ease of use, usefulness and benefits to learning from the podcast (i.e. whether using the podcast makes the learning process easier or increases students' motivation). These items were derived and discussed with a panel of experts who were involved in teaching innovation projects and had experience of teaching or supporting teaching in research methods courses for undergraduates. We expected the SSEPQ to show a one-factor structure, such that the total score on the questionnaire would provide a general index of students' satisfaction with podcasts.

Podcasts. The podcasts assessed in this study were 11 educational podcasts designed by the authors for the Methods and Statistics course of the Degree in Psychology with the aim of providing additional material to assist learning; they thus fall into the category of supplementary use podcasts. The podcasts, each of which lasted between 3 and 5 minutes, were presented in an audio-visual format using Microsoft PowerPoint, Audacity® and Camtasia® software. Each podcast presented a key course topic related to both the theoretical and practical aspects of several statistical analytic techniques (e.g. introduction to statistical hypothesis testing, Type I error and Type II error, Student's t-test for independent samples, Mann-Whitney test, correlation, etc.). The podcasts began with an introduction to the topic, followed by a short-summary of the concept and a step-by-step guide to performing and interpreting the statistical analysis of data using the IBM SPSS software package. Technical aspects were tested in order to ensure the quality of the video and audio. The podcasts were made available via the virtual campus platform and could be freely accessed throughout the academic year by students.

Procedure

The SSEPQ was administered to students during the last day of the Research Methods and Statistics course. Participants were asked to indicate their ID number, age and sex, but were told that their data would be analysed anonymously and by researchers who were not involved in the teaching of the course.

Data Analysis

In order to assess the expected one-factor structure of the SSEPQ a confirmatory factor analysis (CFA) was carried out via structural equation modelling, using the EQS 6.3 software package (Bentler, 2006). A cross-validation strategy was employed, splitting the sample into two random groups. The first sample was called 'calibration sample' (N = 177) and the second 'validation sample' (N = 199). The one-factor structure was fitted in both samples in order to provide more evidence of construct validity and to verify the structural model underlying the SSEPQ. Finally, this structure was also fitted in the total sample.

Analyses were performed on the polychoric correlation matrix of the items using the maximum likelihood and robust estimation methods. The Satorra-Bentler chi-square (χ2S-B) was computed with the following goodness-of-fit indices (Bentler, 2006): the comparative fit index (CFI; Bentler, 1990), the non-normed fit index (NNFI; Bentler & Bonnet, 1980) and the root mean square error of approximation (RMSEA; Browne & Cudeck, 1993; Steiger, 2000). The CFI and NNFI measure the proportional improvement in fit by comparing a hypothesized model with the null model as baseline model. Values above .90 are generally considered as indicating an acceptable fit (Bentler, 1992; Bentler & Bonet, 1980; Sharma, Mukherjee, Kumar, & Dillon, 2005; Sun, 2005; Weston & Gore, 2006), while a value close to .95 would indicate a good fit (Hu & Bentler, 1999). The RMSEA is an absolute misfit index, and the closer its value to zero, the better the fit. Values below .08 indicate a reasonable fit (Browne & Cudeck, 1993) and those below .06 a good fit (Hu & Bentler, 1999).

An item analysis was also performed by computing the corrected item-total score correlation. Internal consistency was assessed by Cronbach's alpha coefficient.

Having tested the one-factor structure of the SSEPQ, we then conducted a descriptive analysis of the total test and of each item in order to determine students' satisfaction with podcasts.

Results

Factor structure and internal consistency

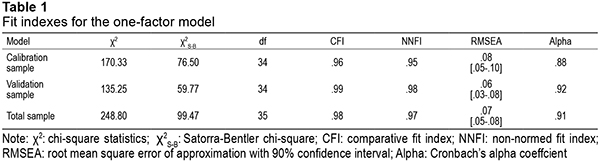

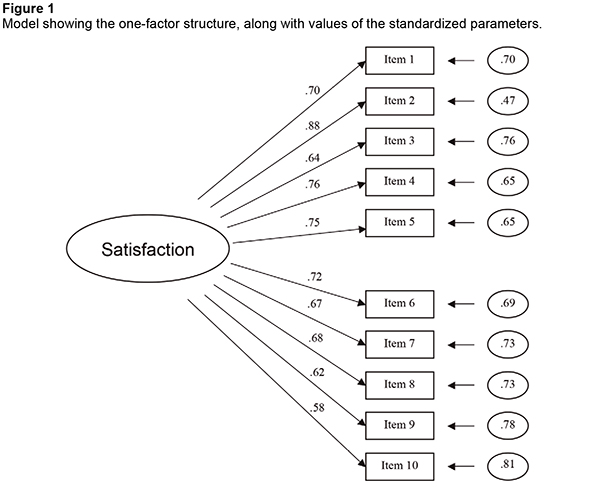

A CFA was performed to test the one-factor structure of the SSEPQ in the calibration sample. The values of the CFI and NNFI indicated a good fit of the model, as both were equal to or higher than .95. The value of the RMSEA, equal to .08, was also acceptable. The one-factor model was then tested in the validation sample, showing adequate goodness-of-fit indexes, with values similar to those found with the calibration sample. Finally, the goodness-of-fit indexes related to the test for the total sample were calculated; these also showed a good fit and, therefore, a stable structure across groups. Table 1 shows the fit indexes for the cross-validation strategy. Estimations for the standardized parameters of the model with the total sample are shown in Figure 1; all are significant.

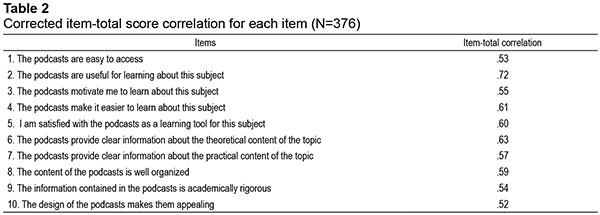

Table 2 shows the corrected item-total score correlation for each item in relation to the total score of the SSEPQ. The results show satisfactory values above .40. The values of Cronbach's alpha presented in Table 1 (equal to or higher than .88) indicate that the questionnaire has adequate internal consistency.

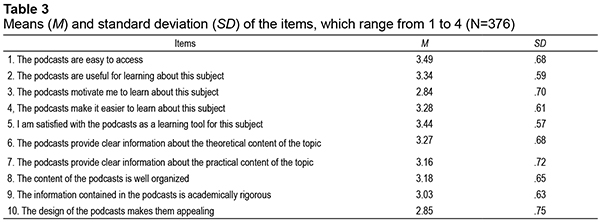

Students' satisfaction with podcasts

Table 3 presents descriptive statistics for the items. All the mean values were higher than 3, except for item 10 (mean of 2.85). The total score on the SSEPQ is calculated by summing the 10 items, each rated from 1 to 4. High scores indicate a high overall satisfaction with the podcast. The total mean score for this sample was 31.93 (out of a possible maximum of 40). Taken together, these results show that the students were satisfied with the use of podcasts as educational tools.

Discussion

The main purpose of this paper was to present a brief, simple and standard questionnaire for assessing students' satisfaction with educational podcasts in higher education: The Student Satisfaction with Educational Podcasts Questionnaire (SSEPQ). The SSEPQ consists of 10 Likert-type items that gather students' views regarding content adequacy, ease of use, usefulness and benefits to learning from the podcasts they have used during a course.

Results from the CFA showed that the questionnaire has a one-factor structure, which supports the use of the total score as a global index of students' satisfaction with podcasts. The one-factor model was replicated across a second independent sample from the same population, as well as for the total sample. All the items showed a satisfactory corrected item-total correlation. Results from the reliability analyses also indicated that the SSEPQ has adequate internal consistency.

Overall, the SSEPQ total score obtained in this sample indicates that students were satisfied with the short 3-5 minute podcasts as a tool to complement traditional educational resources. Specifically, students considered that the podcasts provided clear and rigorous information about the topic, that their content was well organized and that they were easy to access, useful and motivating to learn about the subject. These results are consistent with previous research based on survey questions or interviews, which found that students were satisfied with the use of podcasts (Lakhal et al., 2007; Traphagan et al., 2010) and perceived their benefits as a learning tool (Clark et al., 2007; Bolliger et al., 2010; Evans, 2008; Hill & Nelson, 2011; Kay & Kletskin, 2012; Montealegre et al., 2010; Popova et al., 2014; Vajockzi et al., 2010; Van Zanten et al., 2012). Our descriptive analysis for each item showed mean values above 3 on all items, except for the item referring to the design of the podcast (i.e. whether it made them appealing) which was rated 2.85. This result suggests that the design of the podcasts might need to be revised to make them more attractive to students.

The main advantage of the SSEPQ is its brevity and that it can provide teachers with direct feedback from their students, thus allowing them to make continuous improvements to the teaching/learning process. Advances in new technologies, of which teachers are not expert users, also highlight the need for tools that can provide data regarding students' satisfaction with these new technologies. As Biggs (2001) pointed out, keeping track of students' views regarding the different aspects of their learning process should be regarded as a cornerstone of quality assurance in higher education. Indeed, although a lot of educational research has highlighted the importance of providing adequate and useful feedback to students, ensuring that teachers receive adequate and useful feedback from students is also fundamental. The assessment of students' satisfaction with the SSEPQ can be used to identify which aspects of podcasts should be improved to ensure that they are effective learning tools.

This study has also some limitations. First, the questionnaire used here was developed to evaluate educational podcasts that had been designed for a research methods course in psychology. Consequently, further research is required to provide evidence of its applicability to different courses and knowledge domains. If the questionnaire is shown to be an applicable tool, it could be then used in comparative studies of student satisfaction with podcasts across different scientific fields of higher education. Second, we focused solely on the indicator of students' satisfaction with podcasts. Other indicators (e.g. educational outcome) can also be considered in order to assess whether podcasts are effective and appropriate tools to improve or facilitate students' learning experience.

To sum up, the SSEPQ is a brief and standard questionnaire that assesses students' satisfaction with educational podcasts. Lecturers can find the SSEPQ a helpful tool when developing and using such podcasts to improve their teaching. Our results indicate a high level of satisfaction with the use of short 3-5 minute podcasts as a supplementary learning tool.

References

1. Abdous, M., Facer, B. C., & Yen, C. (2012). Academic effectiveness of podcasting: A comparative study of integrated versus supplemental use of podcasting in second language classes. Computers and Education, 58, 43-52. https://doi.org/10.1016/j.compedu.2011.08.021. [ Links ]

2. Abt, G., & Barry, T. (2007). The quantitative effect of students using podcasts in a first-year undergraduate exercise physiology module. Bioscience Education, 10, 1-9. https://doi.org/10.3108/beej.10.8. [ Links ]

3. Alonso-Arbiol, I. (2009). Use of audio likes improves students' performance in higher education. In A. Lazinica and C. Calafate (Eds.), Technology education and development (pp. 447-456). InTech. https://doi.org/10.5772/7271. [ Links ]

4. Bentler, P. M. (1990). Comparative fit indexes in structural models. Psychological Bulletin, 107, 238-246. https://doi.org/10.1037/0033-2909.107.2.238. [ Links ]

5. Bentler, P. M. (1992). On the fit of models to covariances and methodology to the Bulletin. Psychological Bulletin, 112, 400-404. https://doi.org/10.1037/0033-2909.112.3.400. [ Links ]

6. Bentler, P. M. (2006). EQS 6 Structural equations program manual. Encino, CA: Multivariate Software, Inc. [ Links ]

7. Bentler, P. M., & Bonett, D. G. (1980). Significance tests and goodness of fit in the analysis of covariance structures. Psychological Bulletin, 88, 588-606. http://doi.org/10.1037/0033-2909.88.3.588. [ Links ]

8. Biggs, J. (2001). The reflective institution: Assuring and enhancing the quality of teachingand learning. Higher Education, 41, 221-238. https://doi.org/10.1023/A:1004181331049. [ Links ]

9. Bolden, B., & Nahachewsky, J. (2015). Podcast creation as transformative music engagement. Music Education Research, 17, 17-33. https://doi.org/10.1080/14613808.2014.969219. [ Links ]

10. Bolliger, D. U., Supanakorn, S., & Boggs, C. (2010). Impact of podcasting on student motivation in the online learning environment. Computers and Education, 55, 714-722. https://doi.org/10.1016/j.compedu.2010.03.004. [ Links ]

11. Browne M. W., & Cudeck, R. (1993). Alternative ways of assessing model fit. In K. Bollen and J. Long (Eds.), Testing structural equation models (pp. 136-162). Newbury Park, CA: Sage. [ Links ]

12. Burke, S., & Cody, W. (2014). Podcasting in undergraduate nursing programs. Nurse Educator, 39(5), 256-259. https://doi.org/10.1097/NNE.0000000000000059. [ Links ]

13. Carvalho, A., Aguiar, C., & Maciel, R. (2009). A taxonomy of podcast and its application to higher education. In ALT-C 2009 "In dreams begins responsibility"-choice, evidence and change, 8-10 September 2009, Manchester. Retrieved from http://repository.alt.ac.uk/638/1/ALT-C_09_proceedings_090806_web_0161.pdf. [ Links ]

14. Elliott, K. M., & Shin, D. (2002). Student satisfaction: An alternative approach to assessing this important concept. Journal of Higher Education Policy and Management, 24, 197-209. https://doi.org/10.1080/1360080022000013518. [ Links ]

15. Evans, C. (2008). The effectiveness of m-learning in the form of podcast revision lectures in higher education. Computers and Education, 50, 491-498. https://doi.org/10.1016/j.compedu.2007.09.016. [ Links ]

16. Fernández, V., Simo, P., & Sallan, J. M. (2009). Podcasting: A new technological tool to facilitate good practice in higher education. Computers and Education, 53, 385-392. https://doi.org/10.1016/j.compedu.2009.02.014. [ Links ]

17. Heilesen, S. B. (2010). What is the academic efficacy of podcasting? Computers and Education, 55, 1063-1068. https://doi.org/10.1016/j.compedu.2010.05.002. [ Links ]

18. Hill, J. L., & Nelson, A. (2011). New technology, new pedagogy? Employing video podcasts in learning and teaching about exotic ecosystems. Environmental Education Research, 17, 393-408. https://doi.org/10.1080/13504622.2010.545873. [ Links ]

19. Hu, L., & Bentler, P. M. (1999). Cutoff criteria for the indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1-55. https://doi.org/10.1080/10705519909540118. [ Links ]

20. Kay, R., & Kletskin, I. (2012). Evaluating the use of problem-based video podcasts to teach mathematics in higher education. Computers and Education, 59, 619-627. https://doi.org/10.1016/j.compedu.2012.03.007. [ Links ]

21. Lakhal, S., Khechine, H., & Pascot, D. (2007). Evaluation of the effectiveness of podcasting in teaching and learning. In G. Richards (Ed.), Proceedings of World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2007 (pp. 6181-6188). Chesapeake, VA: AACE. [ Links ]

22. Lee, M. J. K., & Chan, A. (2007). Reducing the effects of isolation and promoting inclusivity for distance learners through podcasting. Turkish Online Journal of Distance Education-TOJDE, 8, 85-104. [ Links ]

23. Leijen, A., Lam, I., Wildschut, L., Simons, P. R. J., & Admiraal, W. (2009). Streaming video to enhance students' reflection in dance education. Computers and Education, 52, 169-176. https://doi.org/10.1016/j.compedu.2008.07.010. [ Links ]

24. McGarr, O. (2009). A review of podcasting in higher education: Its influence on the traditional lecture. Australasian Journal of Educational Technology, 25(3), 209-321. https://doi.org/10.14742/ajet.1136. [ Links ]

25. Montealegre, M. C., Carvajal, D., Holguín, A., Pedraza, R., & Jaramillo, C. A. (2010). Implementation of podcast and clickers in two biology courses at Los Andes University and impact evaluation in the teaching-learning process. Procedia Social and Behavioral Science, 2, 1767-1770. https://doi.org/10.1016/j.sbspro.2010.03.981. [ Links ]

26. Narula, N., Ahmed, L., & Rudkowski, J. (2012). An evaluation of the '5 Minute Medicine' video podcast series compared to conventional medical resources for the internal medicine clerkship. Medical Teacher, 34, E751-E755. https://doi.org/10.3109/0142159X.2012.689446. [ Links ]

27. O'Bannon, B. W., Lubke, J. K., Beard, J. L., & Britt, V. G. (2011). Using podcasts to replace lecture: Effects on student achievement. Computers and Education, 57, 1885-1892. https://doi.org/10.1016/j.compedu.2011.04.001. [ Links ]

28. Pegrum, M., Bartle, E., & Longnecker, N. (2015). Can creative podcasting promote deep learning? The use of podcasting for learning content in an undergraduate science unit. British Journal of Educational Technology, 46, 142-152. https://doi.org/10.1111/bjet.12133. [ Links ]

29. Popova, A., & Edirisingha, P. (2010). How can podcasts support engaging students in learning activities? Procedia Social and Behavioral Science, 2, 5034-5038. https://doi.org/10.1016/j.sbspro.2010.03.816. [ Links ]

30. Popova, A., Kirschner, P. A., & Joiner, R. (2014). Effects of primer podcasts on stimulating learning from lectures: How do students engage? British Journal of Educational Technology, 45, 330-339. https://doi.org/10.1111/bjet.12023. [ Links ]

31. Reychav, I., & Wu, W. (2015). Mobile collaborative learning: The role of individual learning in groups through text and video content delivery in tablets. Computers in Human Behavior, 50, 520-534. https://doi.org/10.1016/j.chb.2015.04.019. [ Links ]

32. Román, P., & Solano, I. M. (2010). Sistemas de audio y vídeo por Internet. En I. M. Solano (ed.), Podcast educativo. Aplicaciones y orientaciones del m-learning para la enseñanza (pp. 55-74). Sevilla: Editorial MAD. [ Links ]

33. Sharma, S., Mukherjee, S., Kumar, A., & Dillon, W. R. (2005). A simulation study to investigate the use of cutoff values for assessing model fit in covariance structure models. Journal of Business Research, 58, 935-943. https://doi.org/10.1016/j.jbusres.2003.10.007. [ Links ]

34. Steiger, J. H. (2000). Point estimation, hypothesis testing, and interval estimation using the RMSEA: Some comments and a reply to Hayduk and Glaser. Structural Equation Modeling, 7, 149-162. https://doi.org/10.1207/S15328007SEM0702_1. [ Links ]

35. Sun, J. (2005). Assessing goodness of fit in confirmatory factor analysis. Measurement & Evaluation in Counseling & Development, 37, 240-256. [ Links ]

36. Taylor, L., & Clark, S. (2010). Educational design of short, audio-only podcasts: The teacher and student experience. Australasian Journal of Educational Technology, 26, 386-399. https://doi.org/10.14742/ajet.1082. [ Links ]

37. Traphagan, T., Kusera, J. V., & Kishi, K. (2010). Impact of class lecture webcasting on attendance and learning. Educational Technology Research and Development, 58, 19-37. https://doi.org/10.1007/s11423-009-9128-7. [ Links ]

38. Vajoczki, S., Watt, S., Marquis, N., & Holshausen, K. (2010). Podcasts: Are they an effective tool to enhance student learning? A case study from McMaster University, Hamilton Canada. Journal of Educational Multimedia and Hypermedia, 19, 349-362. [ Links ]

39. Van Zanten, R., Somogyi, S., & Curro, G. (2012). Purpose and preference in educational podcasting. British Journal of Educational Technology, 43, 130-138. https://doi.org/20.1111/j.1467-8535.2010.01153.x. [ Links ]

40. Vogele, C., & Gard, E. T. (2006). Podcasting for corporations and universities: Look before you leap. Journal of Internet Law, 10, 3-13. [ Links ]

41. Weston, R., & Gore, P. A. (2006). A Brief Guide to Structural Equation Modeling. The Counseling Psychologist, 34, 719-751. https://doi.org/10.1177/0011000006286345. [ Links ]

42. Wieling, M., & Hofman, W. (2010). The impact of online video lecture recordings and automated feedback on student performance. Computers and Education, 54, 992-998. https://doi.org/10.1016/j.compedu.2009.10.002. [ Links ]

![]() Correspondence:

Correspondence:

Dpto. de Psicobiología y Metodología de las Ciencias del Comportamiento.

Facultad de Psicología. Blvr. Louis Pasteur, 25.

29071 Málaga (Spain). E-mail: ralarcon@uma.es.

E-mail de la coautora Rebeca Bendayan: bendayan@uma.es.

E-mail de la coautora María José Blanca: blamen@uma.es

Recibido: 23 de diciembre de 2016

Modificado: 20 de febrero de 2017

Aceptado: 14 de marzo de 2017