Introduction

In today's world of information, there is a set of factors that are essential to succeed: understand complex, ambiguous, contradictory information; sort from the massive amount of data which information is legitimate, grounded, and reusable; make good decisions (i.e., decisions that are adapted to the problem in hands and that enable an individual to solve it efficiently, avoiding negative outcomes); solve problems efficiently; participate democratically and actively in one's community; enter and stay in an ever more competitive and unusual job market; interact and work with people who have a dissimilar background, culture, language, political party, and/or religion; update skills and forever be a lifelong learner; and more. Here, critical thinking (CT) is claimed to be fundamental (Franco, Butler, & Halpern, 2015; Halpern, 2014; Kay, 2015; Vieira, Tenreiro-Vieira, & Martins, 2011).

Being a hot topic, there are many definitions and theoretical models of CT. From a revision of the literature (Finn, 2011; Franco & Almeida, 2015; Franco et al., 2015; Krupat et al., 2011), CT may be defined as having the ability to use a particular set of higher-order cognitive skills, and being in the disposition to use those higher-order cognitive skills in everyday life, to tilt the odds of success in one's favor. Hence, thinking becomes custom-made thinking, since it is customized to the task, circumstances, and context.

As relevant as it is to define CT, to identify its skills and dispositions, and as imperative as it is to understand the impact that CT has in personal, academic, and professional life spheres, it is also indispensable to consider valid and reliable ways to assess CT (Saiz & Rivas, 2008). There is a set of tests designed to do exactly so, each one focused on (a) particular dimension(s), and using a specific format. There are a few examples of well-known CT assessment measures. The Watson-Glaser Critical Thinking Appraisal (Watson & Glaser, 1980, 2005), aimed at grade nine through adulthood, assesses inference, recognition of assumptions, deduction, interpretation, and evaluation of arguments, using multiple-choice items. The Ennis-Weir Critical Thinking Essay Test (Ennis & Weir, 1985) is open-ended and aimed at grades seven through college, assessing general CT ability in argumentation. The California Critical Thinking Skills Test: College Level (Facione, 1990) is aimed at college students, and assesses analysis, inference, evaluation, deductive reasoning, and inductive reasoning using multiple-choice items. The Cornell Critical Thinking Tests have a multiple-choice item format and two levels of assessment: Level X (Ennis & Millman, 2005a), aimed at grades 4-14, that assesses induction, deduction, credibility, and identification of assumptions; Level Z (Ennis & Millman, 2005b), specially aimed at college students and adults, that assesses induction, deduction, credibility, identification of assumptions, fallacies, definition, prediction, and experimental planning. Also, there is the Halpern Critical Thinking Assessment (HCTA; Halpern, 2012), which will be presented ahead in further detail.

Such a variety of instruments creates a wide range of possibilities for cognitive assessment. Yet, tests have limitations. Some, given their format: although easier to grade, multiple-choice may prevent the possibility of grasping beyond the basic cognitive processes of memorization and recall, or recognition and selection, since this format asks if the solution presented is right or wrong, or requests the selection of one answer from various alternatives. Here, the thinking process may not be (fully) assessed, since respondents are not asked to elaborate their thinking by answering questions or providing constructed answers. On the other hand, some tests present items that seem too artificial to respondents, failing to activate the relevant thinking processes that are applied to solve everyday problems, or even the motivation to invest intellectual effort in responding (Franco, Almeida, & Saiz, 2014; Saiz & Rivas, 2008).

The HCTA test is considered a turning point in the CT assessment scenario, mainly because of its format, the presentation of real-life scenarios, and seeing that it comprises the two components of CT - skills and dispositions. The HCTA test assesses five dimensions that correspond to the five skills that the theoretical model presented by Halpern postulates as core to CT: verbal reasoning; argument analysis; thinking as hypothesis testing; likelihood and uncertainty; decision making and problem solving. This test combines multiple-choice questions (which demand recognition) and open-ended questions (which demand free recall and elaboration), thus enabling a more comprehensive evaluation of CT: while preventing a long consumption of time when taking the test, it allows the test administrator to analyze the thinking process of respondents. The HCTA test is also attractive for its real-life situations, with which the respondents can easily relate to. This feature is particularly relevant considering that CT is thinking that is contextually applied, very much reliant on the circumstances faced by individuals. Also, it comprises the behavioral and attitudinal components of CT, making it possible to assess both skills and disposition to be a critical thinker (Ku, 2009). Overall, validation studies of the HCTA test consider academic performance and the impact of CT in daily life. Indeed, the relevance of CT becomes clearer when considering the links connecting CT to the quality of decisions made on a daily basis. This real-world validity is one of the main assets of the HCTA test, since it allows to predict if CT results in decisions and action, and to analyze if such outcomes are positive/negative for the performer (Butler et al., 2012; Halpern, 2012).

In this paper, our aim was to analyze the level of CT of the average college student. We describe the process of translation and adaptation of the HCTA test to Portuguese, and present its validation study. Considerations are made about the psychometric proprieties of the Portuguese version, the (dis)similarity with the original version, and the impact of culture to help explain our data, as well as the concept of CT itself. Also, we examined if there are differences in the quality of CT according to disciplinary area and academic level, seeing that both variables are associated to CT in the literature (e.g., Badcock, Pattison, & Harris, 2010; Brint, Cantwell, & Saxena, 2012; Cisneros, 2009; Drennan, 2010; Mathews & Lowe, 2011).

Methods

Participants

A total of 333 students enrolled in a public university located in the North of Portugal participated in this study. The inclusion criteria/requirements were: (i) to be at least 18 years or about to turn 18 (in this study, two participants, having late birthdays, entered university with 17 years); (ii) attend the first year of a Graduate Degree or a Master's Degree; (iii) provide voluntary participation. Participants ranged in age from 17 to 51 (M = 22.0, SD = 5.65), and a majority was female (n = 252, 75.7%). This convenience sample was composed of students in the first year of a Graduate Degree (n = 179, 53.8%) or a Master's Degree (n = 154, 46.2%) in a diversity of majors (e.g., Biological Sciences; Biomedical Sciences; Communication; Computer Science; Economics; Education; Engineering; Foreign Languages, Literatures and Linguistics; Management; Medicine; Physics; Psychology) in the disciplinary area of Social Sciences and Humanities (SSH) (n = 159, 47.7%), or Science and Technology (ST) (n = 174, 52.3%). All academic majors assessed were equally distributed according to academic level (Graduate Degree and Master's Degree).

Instrument

Halpern Critical Thinking Assessment. The HCTA test was originally developed by Halpern in the USA, and has been tested in several other countries, such as Belgium (Verburgh, François, Elen, & Janssen, 2013), Ireland (Dwyer, Hogan, & Stewart, 2012), Spain (Nieto & Saiz, 2008), or even China (Hau et al., 2006; Ku & Ho, 2010). Its reliability and validity have been ascertained in different countries, testing a diversity of participants in concern to their academic level, and using varied methodologies. The HCTA test is composed of two parts that are correlated, thus creating a general CT factor: one pertaining constructed response items, one concerning forced choice items; this structure is confirmed using confirmatory factor analysis in the original study (.51), as well as in other studies in different countries, such as Belgium (.42). As for reliability, the HCTA test showed adequate internal consistency in the original study (α = .88), and again, in other studies in different countries, such as Spain (α = .78) (for further detail, see Halpern, 2012).This standardized test assesses five dimensions: Verbal Reasoning (VR) - to recognize how thought and language influence each other, and to detect techniques that are present in daily language to avoid being manipulated by them; Argument Analysis (AA) - to analyze the validity of the arguments that are daily used in support of a certain decision or action; Thinking as Hypothesis Testing (THT) - to keep an empirical attitude when processing information to explain life events and test hypotheses; Likelihood and Uncertainty (LU) - to mediate decisions with estimates about the probability of success/failure when performing that decision; Decision Making and Problem Solving (DMPS) - to analyze a problem from different angles, to generate alternatives of action, and to select the one with higher chances of success. Twenty-five everyday life scenarios from diverse areas (e.g., education, politics, health, finances) are presented; for each, the respondent must first respond to open-ended questions, and then to multiple-choice questions. The HCTA test combines items that demand free recall and elaboration (open-ended questions), and recognition (multiple-choice questions). Besides, it appeals to both behavioral and motivational components of CT by assessing CT skills and the disposition to be a critical thinker (Halpern, 2012; Ku, 2009). Both components are strongly connected, and for this reason they are not separately evaluated in the Factor Analysis of the HCTA test's items. Construct validity suggests two first-order factors associated to the format of the items (open-ended and multiple-choice items), from which a general second-order factor emerges, identified as CT. This computer-based test is aimed at subjects aged 15 years and older, and can be used for Educational Psychology or in the job market for personnel selection. Its completion time varies between 60 to 80 minutes, and both its administration and grading are computerized. Higher the score in the test - ranging from 0 to 194 points - higher the level of CT.

Procedures

The author was contacted and permission (from author and editor) was granted to use the HCTA test for our research. This test was translated to Portuguese by a native speaker; the back-translation to English and the comparison between the two versions was undertaken with the assistance of two university teachers and a professional translator. The goal was to identify inaccuracies, and to accomplish an accurate translation and a culturally-fit adaptation. This process required a close collaboration with the author, given the specificity of a few idiomatic expressions and/or due to cultural particularities in the original form of the test, hence, the need to find a proper equivalent in Portuguese. Minor adaptations were made to convey authenticity and familiarity to all items, securing linguistic equivalence while respecting cultural singularities.

A qualitative analysis was undertaken with 14 undergraduates in the third year of a psychology major using the think aloud method (Ericsson & Simon, 1993); this analysis with this incidental group aimed to guarantee the clarity, comprehensibility, and relevance of all items. Since the HCTA test is computerized, it was necessary to collaborate with the Portuguese company associated to the company that markets the HCTA test, to program it and have it operational to administer.

We contacted faculty teachers from several departments to ask them a few minutes of class time to approach participants. In class, we presented the concept and relevance of CT to students, informed them about our study goals, and asked for their voluntary participation. On the other hand, we offered to share with each participant her/his results, so they could find out more about their CT. The students who were interested in participating provided their email to be later on contacted, to arrange a convenient day and schedule for their participation. Participants could also decide if they took the test individually or at the same time as other peers, seeing that the administration of the HCTA test occurred in a classroom specifically designated for this study, equipped with seven computers. Each participant spent between one to two hours taking the test.

All tests were rated by one same grader: open-ended items were graded by analyzing each answer and rating it according to a grading chart presented by the computer program; the multiple-choice items were automatically graded by the computer program. After grading the protocols of the complete sample, data was exported to a SPSS data base, and for each participant we printed a results' sheet produced automatically by the computer program. Following the administration of the HCTA test and its grading, each participant was again contacted to set a convenient day and schedule to provide them their results.

Statistical analysis

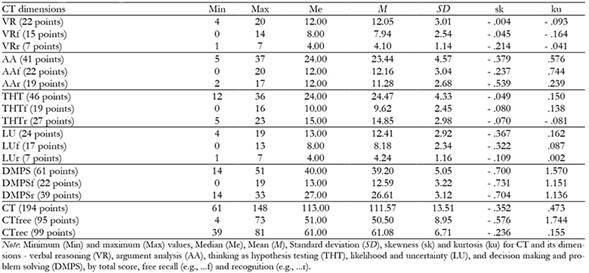

For the sensitivity estimation, descriptive statistics (Min, Max, Me, M, SD, skewness and kurtosis) were analyzed by CT dimension and CT total score. Absolute values higher than three (for skewness) and 10 (for kurtosis) were considered to challenge the normality of the distribution (Kline, 2000). For the validity estimation, the five-factor model presented for this test was tested through a Confirmatory Factor Analysis (CFA). The model's goodness-of-fit was assessed using a set of indices: Chi Square (χ2/df), Comparative Fit Index (CFI), Parsimony Comparative Fit Index (PCFI), Goodness-of-Fit Index (GFI), Parsimony Goodness-of-Fit Index (PGFI), Root Mean Square Error of Approximation (RMSEA), and Standardized Root Mean Square Residual (SRMR). The model was considered to have acceptable or good fit, respectively, if: χ2/df was less than 5 or 2; CFI and GFI were higher than 0.8 or 0.9; PCFI and PGFI were higher than 0.6 or 0.8; and RMSEA was lower than 0.10 or 0.08 (Arbuckle, 2008; Wheaton, 1987). For the reliability estimation, we computed omega reliability coefficients for each format dimension and for the total score, given the ordinal response format of items. Values equal to/higher than .70 were considered acceptable (Gadermann, Guhn, & Zumbo, 2012). The significance cutoff point considered for all statistical analyses was p < .05. Statistical analyses were conducted using the software IBM SPSS Statistics for Windows (version 22.0), AMOS statistical package (version 21.0), and R (version 1.5.8).

Results

The descriptive statistics are presented in Table 1. Given the computerized grading of each item (the open-ended items manually by one same grader, and the multiple-choice items automatically by the program) and their automatic computation into global scores by the software, items were not analyzed individually (in order to test their difficulty), and for this reason only the descriptive statistics are presented for each dimension and for total score. Additionally, scores are shown according to the items' format - elaboration and recognition - seeing that this double format accounts for singular, yet correlated, skills. Indeed, in the manual of the HCTA test (Halpern, 2012), the author adopts this same procedure, in order to enable the assessment of these distinct cognitive processes of recall and recognition in participants' performance, hence creating the possibility of a more comprehensive assessment of critical thinking, and also, an easier format for test-taking and test-grading. For each dimension, all items assessed in an open-ended format created a total score concerning elaboration (e.g., VRf) and all items assessed in a multiple-choice format created a total score concerning recognition (e.g., VRr). The maximum points possible is specified for each dimension and for total score. Considering the total score of each dimension, skewness (negatively skewed, ranging between - .700 and - .004) and kurtosis (leptokurtic, ranging between - .093 and 1.570) values are acceptable (Kline, 2005). The average CT total score was 111.57 (SD = 13.51).

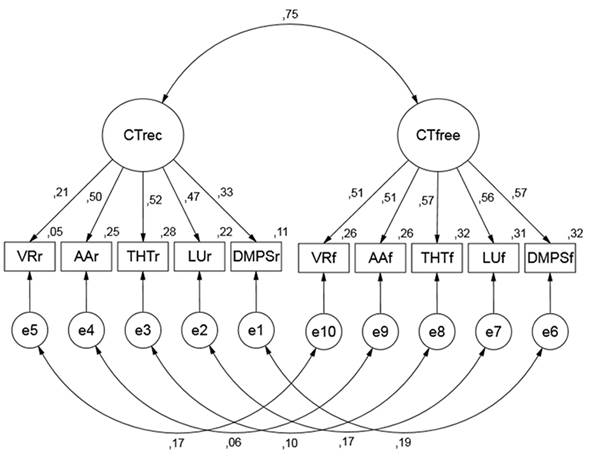

Concerning the validity of the Portuguese version, we tested the five-factor model presented in the manual of the HCTA test through a CFA to analyze its factorial structure (see Figure 1). Similarly to the original study (Halpern, 2012), we tested a measurement model (M1) and analyzed its goodness-of-fit. In M1, scores for the free-recall items in all five dimensions were hypothesized to load on a latent factor designated "CT free-recall" (CTfree), while scores for the multiple-choice items in all five dimensions were hypothesized to load on a latent factor designated "CT recognition" (CTrec). Both latent factors - CTfree and CTrec - were correlated to test the hypothesis that the two response formats, free-recall and recognition, were differentiated yet associated aspects of CT.

The indices considered to decide on the model's goodness-of-fit supported the five-factor structure presented for the original HCTA test with acceptable (PCFI and PGFI) to good (χ2/df, CFI, GFI, and RMSEA) fit. The goodness-of-fit was slightly lower in our sample, yet, accomplished (χ2(29, n = 334) = 58.6, p = .001; χ2/df = 2.02; CFI = .929; PCFI = .599; GFI = .966; PGFI = .509; RMSEA(CI90%) = .055(.035; .076)). For test of close fit (RMSEA < .05) = .308, SRMR = .0438. Overall, M1 explains 56.3% of shared variance between CTfree and CTrec, when compared to the 75.7% obtained in the original study.

Concerning the reliability estimation for total CT, CTrec and CTfree, we found reliability coefficients to be acceptable for CT (( = .75) and CTfree (( = .70), yet not as satisfactory for CTrec (( = .58).

We performed a one-way MANOVA for disciplinary area and for academic level to test if there were differences in the total HCTA scores concerning CTfree and CTrec. Statistically significant differences were found for disciplinary area, F (2, 330) = 26.00, p < .0005; Wilk's Λ = 0.864, partial η2= .14. Disciplinary area had a statistically significant effect on both CTrec (F (1, 331) = 20.50; p< .0005; partial η2= .06) and CTfree (F (1, 331) = 49.44; p< .0005; partial η2= .13), with ST students scoring higher in CTrec (M = 62.62, SD = 6.36) and CTfree (M = 53.57, SD = 8.19) when compared to SSH students in each CT score (M = 59.38, SD = 6.68, and M = 47.13, SD = 8.54, respectively). Also, we found a statistically significant difference in CT scores according to academic level, F (2, 330) = 2.92, p = .05; Wilk's Λ = 0.983, partial η2= .02. Academic level had a statistically significant effect on CTfree (F (1, 331) = 5.62; p< .05; partial η2= .02), with Master students scoring higher (M = 51.74, SD = 8.53) than Graduate students (M = 49.42, SD = 9.18), yet not on CTrec (F (1, 331) = 2.41; p= .12; partial η2= .01).

Discussion

This study adds to the HCTA test validation literature and its value to assess CT. We found fit indices for descriptive statistics, pointing to the normality of the distribution. Concerning validity, our model's goodness-of-fit was slightly lower when compared to the original study, yet, accomplished, with a model explaining 56.3% of shared variance between CTfree and CTrec. As for precision, it can be accepted. Perhaps CT itself has a different meaning across cultures, hence, its manifestation in dispositions/behavior is also singular to each culture. This hypothesis gains strength considering data about the HCTA test validity across cultures: for instance, in the Spanish version, with a sample of 355 adults, the correlation between free recall and recognition formats was .49; in the Dutch version, with a sample of 173 college students in Belgium, this correlation between the two formats was .42 (Halpern, 2012). Indeed, the cross-cultural validity of the HCTA test, by comparing its different translated versions, is a pertinent development and requires further research.

Performing a one-way MANOVA for disciplinary area and academic level, statistically significant differences were found. Disciplinary area had a statistically significant effect, with ST students scoring higher in CTrec and CTfree when compared to SSH students. As for academic level, it showed a statistically significant effect on CTfree alone, with Master students scoring higher than Graduate students. In light of our data, CT scores vary according to disciplinary area; yet, it may be necessary to go beyond such label and identify the variables that are truly illustrative of the reasons why students (that happen to be) from different disciplinary areas are unlike in regard to CT. On the one hand, disciplinary differences could be due to a particular emphasis set on skills/dispositions related to CT in the form of learning contexts, classroom characteristics, course content, or pedagogical practices (Badcock et al., 2010; Brint et al., 2012; Mathews & Lowe, 2011). For instance, Heijltjes, Van Gog, and Paas (2014) found that learning CT skills is potentiated by the combination of explicit CT instruction and practice. On the other hand, disciplinary differences could be due to individual characteristics that impact when time comes to enroll in a particular course from a certain disciplinary area (Badcock et al., 2010), seeing that neurocognitive systems, in interaction with life experiences, are responsible for individual differences in CT (Bolger, Mackey, Wang, & Grigorenko, 2014). Also, it is necessary to account for the impact of educational background, as suggested by Evens, Verburgh, and Elen (2013), who found that Secondary Education impacts the choice of disciplinary area, which relates to students' CT performance. Overall, CT differences may be mostly due to pedagogical practices, so "educators will need to study the practices of exemplary instructors rather than exemplary disciplines, because no disciplines appear to stand out in this domain" (Brint et al., 2012, p. 22).

As for academic level, it appears to differentiate students when CT is assessed in a free-recall format, with Master students scoring higher than Graduate students. In regard to this academic variable, such differences may be contextualized in light of the cumulative impact of learning, which may also affect the quality of CT. Concurrently, students in more advanced academic years would have a wider understanding of the college experience concerning academic learning, but also a deeper personal growth that accompanies the process of attaining college (Cisneros, 2009; Drennan, 2010). Altogether, this could have an impact on the quality of thinking, with higher education exerting a positive influence on CT, requiring students to become more active, and at the same time, making them more prone to think things through, to make sound decisions, to solve problems. Also, CT takes time and practice to develop, so a higher level of CT could be expected as students move along college (Halpern, 2014). Nevertheless, it is risky to elaborate on such considerations, seeing that it is not a same sample of students who was assessed in the first year of a Graduate Degree, and three years later in the first year of a Master's Degree.

Conclusions

"Today it is less important to know, and more important to know what is known", states John Kay (2015), referring to the advantages of a liberal Education, in his column on economics and business in the Financial Times. So it seems that although important to possess the "facts and figures" type of knowledge, it is very important to possess knowledge about which facts and figures are relevant and reliable, and then, about where to have access to such facts and figures, and how to (re)use them ethically and efficiently. This creates implications for higher education institutions, by implying that higher education should: (1) focus on thinking, not as overwhelmingly on "knowing"; (2) aim that students become more "generalized" concerning knowledge, not particularly more specialized; (3) develop transversal skills such as CT, not merely job-specific skills; (4) value the Social Sciences, such as Philosophy, Anthropology, or Sociology, and the skills and dispositions that are rooted in this field of knowledge, instead of predominantly supporting Science, Technology, Engineering and Mathematics. "The objective should be to equip students to enjoy rewarding employment and fulfilling lives in a future environment whose demands we can neither anticipate nor predict ... the capacities to think critically, judge numbers, compose prose and observe carefully ... will be as useful then as they are today" (Kay, 2015). Indeed, CT matters and higher education moulds (Franco, 2016). And one criteria to assess any institution of Education is its focus on the preparation of students, not only for employment, but for life. And here, "the cultivated capacities for critical thinking and reflection are crucial in keeping democracies alive and wide awake" (Nussbaum, 2010, p. 10).