Introduction

In the 1990’s, the movement towards practices based on scientific evidence, which has its roots in the clinical and health fields, started to gain recognition in the educational area with the idea of “data-driven decision making” (McCardle & Miller, 2009). In the educational context, this meant that decisions on what teaching methodology to use to support students’ learning should be based on empirical data generated by well-designed scientific studies. The American Psychological Association (APA, 2005) defines evidence-based practices as teaching practices that integrate knowledge generated both by high-quality research as well as by the experiences of educational practitioners. These practices should be prioritized by educators wanting to optimize their students’ educational outcomes. Apart from the potentially greater learning benefits for the students, evidence-based practices could also have a positive financial impact, as resources would be applied in interventions that have a higher probability of achieving the expected goals (Duff & Clarke, 2011; Forman, Fagley, Steiner, & Schneider, 2009; Snowling & Hulme, 2011). Nevertheless, the gap between recommendations based on empirical evidence and school practice remains (Spiel, Schober, & Strohmeier, 2016; Vanderlinde & Braak, 2010), and this seems also to be the case in Spanish-speaking countries (e.g., Perines, 2017; Perines, 2018; Ripoll, 2014).

According to Wilson, Petticrew, Calnan, & Nazareth (2010), the dissemination of research is a planned action that involves communication and transfer of knowledge to target audiences and stake-holders (e.g., parents, teachers, school principals, government officials, clinicians, teacher trainers, policy-makers, etc.) taking into account the settings in which findings are to be implemented (e.g., schools, associations, colleges/universities, etc.) and the available resources. The main goal of a dissemination plan is to facilitate the comprehension of research outcomes and the integration of these in decision-making processes and practices and, as a consequence, to bridge the divide between research and practice.

There are many factors at the political, academic, and practical levels that might be hindering the synchronization of research and practice in the educational area. This work aims at promoting a reflection and discussion of aspects concerning the dissemination and usage of evidence-based educational materials in Spanish-speaking countries. We start with a discussion about the availability and importance of generating evidence for educational materials. Next, we try to describe the process of disseminating research evidence and the potential role that educational researchers and practitioners could have in the process. Lastly, we try to identify possible barriers in the dissemination process that could be partly responsible for the evidence-practice gap. Therefore, this essay could be of special interest for educational researchers and practitioners, who are interested in promoting a better integration between knowledge generated in research and practice. Notwithstanding that the research-practice gap appears to be a problem in various areas of education, we will illustrate our points using examples taken from the area of reading research.

Official summary reports about “best practices” and “what works” for teaching reading to English-speaking populations have been increasingly released, and this seemingly signals a growing interest on the part of the research community to try to transfer knowledge generated in scientific studies to educators (e.g., Commonwealth of Australia, 2005; National Institute of Child Health Development, NICHD, 2000; Rose, 2006; Snow, 2002; Snow, Burns, & Griffin, 1998).

In Spanish-speaking countries, the situation is similar in terms of increased efforts in trying to ‘translate’ scientific evidence to support the work of practitioners, in particular for educators working with populations with special educational needs (e.g., Angulo-Domínguez et al., 2011; Echeita-Sarrionandía & Verdugo-Alonso, 2004; Fuentes-Biggi et al., 2006; Gobierno Vasco, 2006; Ministerio de Educación y Ciencia de España, 1994; Save the Children, 2013). In this context, the term ‘translating’ scientific evidence seems to take on its literal meaning too strongly. That is, although educational researchers in Spanish-speaking countries do recognize the need of making scientific evidence available and accessible to teachers working in the schools, there is a lack of evidence-based programs based on research carried out with Spanish-speaking children (Ripoll, 2014). As a consequence, some of the above mentioned Spanish reports are “literal translations” of the recommendations based on evidence derived from English-speaking samples. This can be problematic in some areas. A good example is the area of teaching children to read. English and Spanish differ in many aspects, including in the transparency of the orthography (Seymour, Aro, & Erskine, 2003), prosodic features (Calet, Gutiérrez-Palma, Simpson, González-Trujillo, & Defior, 2015; Dauer, 1983), and speech production (Carreiras & Perea, 2004). Additionally, the rate of learning to decode from print to speech differs greatly with Spanish-speaking children achieving a reading accuracy of 95% of words after the first year of learning to read, compared with 35% for more opaque languages such as English (Caravolas, Lervåg, Defior, Seidlová-Málková, & Hulme, 2013; Caravolas et al., 2012; Seymour et al., 2003). Consequently, it is plausible that teaching reading in English and Spanish may require different methodologies or maybe the same methodologies but with distinct emphasis at different phases of reading development, or different ages and grades. Apart from linguistic divergences, environmental issues and differences in culture, political situation, and educational systems, may hinder the application of specific teaching programs and influence their effectiveness if they are merely transplanted from an English based system to Spanish-speaking countries. Thus, there is a need to gather additional evidence about the effectiveness of educational intervention programs when applied to Spanish-speaking populations.

In sum, there is an increasing awareness about the importance of generating evidence for educational materials in Spanish-speaking countries. However, gathering evidence is dependent upon establishing successful proactive relationships with educational centers and on raising social validity of evidence-based educational materials. Nevertheless, in contrast to the health area (see systematic review of dissemination conceptual frameworks by Wilson et al., 2010), there is a lack of systematic reporting on what educational researchers do or need to do to achieve this goal in Spanish-speaking countries. Consequently, the aim of the present article is to explore how we can better connect educational research and practice in order to be able to best serve the populations of interest. In the following sections, we will reflect on the importance of generating high-quality scientific evidence, especially in Spanish-speaking countries, to improve psychoeducational practice. Moreover, we will try to trace possible pathways in the dissemination process, including reaching for target audiences, with special interest in the relationship between educational researchers and practitioners. Along this pathway, we will try to identify barriers but also common grounds where these professionals can meet, interact, exchange, and equally profit from a longer lasting relationship, while not losing sight of the ultimate goal, which is generating the highest possible benefits for school children.

The importance of generating evidence

As seen in the introduction, although the importance of using evidence-based materials and intervention programs in education has been increasing in recent years (Spiel et al., 2016), there is still a lack of evidence-based materials in Spanish (e.g., Ripoll, 2014). Thus, teachers are left with few choices or are overwhelmed with the amount of non-evidence based offers, which might not be particularly effective (Cook, Tankersley, Cook, & Landrum, 2008). In this sense, Ripoll and Aguado (2014) confirmed in their meta-analysis that only a few of the reading comprehension programs published in Spanish present evaluation data about their efficacy. One reason for this could be the amount of resources necessary to produce high-quality evidence using large-scale, randomized controlled, and longitudinal designs. In contrast to countries like the US, where private financing or donations are common, Latin-American and Spanish universities and research grant agencies are mainly supported by public money. Due to the economic crisis and a certain political instability in the last ten years in many Spanish-speaking countries, public financing of universities, research grants, and scholarships have been repeatedly reduced (Fundación Alternativas, 2017). Thus, the educational research community in many Spanish-speaking countries are struggling with the lack of resources to generate and disseminate evidence that has practical impact.

A further reason for the lack of evidence-based materials in Spanish could be the fact that educational policies, and so, state recommendations regarding teaching practices are not traditionally guided by evidence in Spanish-speaking countries (Perines, 2018; Ripoll, 2016). In Spain, the national Ministry of Education is responsible for the development of the school curriculum, which contains general objectives and minimum contents to be taught in the elementary and secondary school levels. Nevertheless, additional content and the methodology with which these objectives are to be achieved are dependent on agreements made by the Educational Councils (Consejerías de Educación) in each autonomous community. The process by which these agreements are established is not transparent and, thus it is difficult to state how much the experts in these boards use evidence to guide their decisions. In contrast, in the US, with the “Reading Excellence” Act of 1998, “No Child Left Behind” Act of 2001, and the more recent “Every Student Succeeds” Act in 2015, the government tries to put forward important laws at the national level that explicitly support and promote the use of evidence-based practices in schools. Even though these actions are not free of criticism - for example, sometimes leading to a heavy focus on preparing students for a standardized test (known as ‘teaching to the test’), or leading to an increased focus on cost effective strategies arising from these acts - the adoption of these practices might potentially create opportunities for discussion and, consequently, motivate teachers to learn more about evidence-based methods for fostering students’ learning.

Perhaps partially due to the decentralization of recommendations, teaching practices in the elementary school level in Spain vary from region to region and are not always in line with the recommended evidence-based practices. One concrete example of this can be seen in the area of literacy tuition. Scientific research has demonstrated that the teaching of reading should consider five basic skills that constitute reading competency: fluency, vocabulary, phonological awareness, alphabetic knowledge, and comprehension (NICHD, 2000). In an observational study, Suárez, Sánchez, Jiménez, & Anguera (2018) concluded that less than 50% of the teaching-to-read practices used by a small sample of pre-school and elementary school teachers were based on evidence. In the specific case of reading fluency, research has shown that prosody is an essential marker of progress in general and additionally contributes to improving reading comprehension in Spanish-speaking children (Ardoin, Morena, Binder, & Foster, 2013; Calet, Gutiérrez-Palma, & Defior, 2017; Kuhn, Schwanenflugel, & Meisinger, 2010; Valencia et al., 2010). However, although a growing number of studies show that teachers need to use activities focused on practicing reading with expression, prosody has largely been neglected in primary schools given that teachers usually focus just on reading with speed and accuracy (e.g., Suárez et al., 2018). Another essential skill from the area of reading which has being neglected in elementary school years is vocabulary knowledge. A large body of research in the area of vocabulary recommends that vocabulary should be taught by providing rich and varied language experiences, by explicitly teaching individual words and word-learning strategies, and by fostering word awareness (Beck, McKeown, & Kucan, 2002; Butler et al., 2010; Gomes-Koban, Simpson, Valle, & Defior, 2017; NICHD, 2000; Snow, 2002; Wendling & Mather, 2009). This is particularly important for at risk-students, such as students with learning difficulties or from low socio-economic status (SES) (Biemiller & Boote, 2006; Hart & Risley, 2003; Perfetti, Landi, & Oakhill, 2007). Interestingly, based on relative recent observations in some Spanish schools, one common procedure consists of solely giving teachers (outdated) lists of words (e.g., Ferrándiz-Mingot, 1978) that children should learn in each primary grade. As a consequence, vocabulary knowledge, although recognized by the teachers as an important skill, is sometimes treated as a component of reading comprehension that does not need specific instruction, and the teaching of vocabulary is confined to writing definitions of words after reading a text passage. Furthermore, a review of the literature surrounding vocabulary training in Spanish-speaking children found only four published studies (Gomes-Koban et al., 2017; Larraín, Strasser, & Lissi, 2012; Morales, 2013; Pérez, 1995), with all but the most recent of these containing serious flaws in their design or inappropriate reporting of results. Thus, even if teachers were aware of the importance of using programs based on evidence, there would not be a diverse pool from which to choose.

Besides the lack of evidence-based programs, the disconnect between educational research and practice in Spanish-speaking countries may also be due to the types of dissemination strategies and relations established among educational researchers groups and between educational researchers and teachers, who although longing to achieve the same goal, mostly work in parallel, instead of jointly. The lack of collaboration between educational research groups might be in part driven by the grant process, as competition for scarce research funds means research groups have an incentive to work alone, rather than share information. Further, this competitive behavior might be counterproductive at the political level, as a strong unified educational research community, such as the American Educational Research Association, would potentially have more power to advocate for the importance of evidence-based practices compared to smaller separate and uncoordinated voices.

Because of the lack of collaboration and well-documented procedures, the research evidence dissemination process in the educational area in Spanish-speaking countries remains unclear. To make it more transparent, a description of the primary targeted audiences and stakeholders and the most common avenues or channels used to disseminate research findings is needed.

The process of research evidence dissemination

The process of research dissemination is complex, as it entails spreading research production at different levels: researcher to researcher; researcher to practitioners; researcher to general public; researcher to law-makers, etc. The usual dissemination by publishing in scientific journals, be it in form of single studies or research summaries (e.g., meta-analysis and systematic reviews), or, more recently, data-sharing (e.g., “Open Science Framework”, “Wordbank”) works at the first level, but some authors argue that this strategy is not particularly efficient to reach wider audiences, and is especially inefficient in reaching teachers (Burkhardt & Schoenfeld, 2003).

Thus, the question about how best to convey the gathered evidence to practitioners and how to strengthen their capacity to make informed decisions remains open for discussion. Although it is agreed that dissemination strategies should be planned a priori, the usual observed practice (including our own experiences within Spanish schools) is to inform teachers about promising research findings by summarizing theoretical information and making it accessible through books and presentations in schools and practitioners conferences. In English, there are also specialized platforms (e.g., “Reading Rockets”; http://www.readingrockets.org/) dedicated to disseminating results of evidence-based research among teachers. In Spain, it is worth noting single initiatives (e.g., the website and blog “Comprensión lectora basada en evidencias” (evidence-based reading comprehension) by Ripoll) and organizations (e.g., “Synthesis: Language and Training”), both of which are working towards dissemination to a wider audience.

Even though such initiatives are commendable, the current gap between research and practice signals the necessity of undertaking further steps to increase the chance of making real changes in teaching practices. Apart from adapting messages to suit particular audiences, dissemination strategies should include ‘two-way avenues’ for continuous experience exchange (World Health Organisation, WHO, 2014). In the case of the educational area, this means that educational researchers and practitioners in Spanish-speaking countries need to create a system that allows for an interactive and continuous feedback about teaching practices and methodologies along with a system to evaluate relevant indicators of quality and effectiveness of educational materials. In this sense, it is necessary to set evaluation standards in Spanish-speaking countries in order to facilitate the verification and selection of educational programs by different educational professionals.

In English-speaking countries, there is a range of initiatives to assist evaluating the validity of effectiveness claims for a variety of programs, including the agency “What Works Clearinghouse” (https://ies.ed.gov/ncee/wwc/), the online resources “Best Evidence Encyclopedia” (John Hopkins University; http://www.bestevidence.org/) and “Promising Practices Network” (http://www.promisingpractices.net/), or the guideline “Blue Prints for Violence Prevention” (Mihalic, Fagan, Irwin, Ballard, & Elliott, 2004) among others. In an attempt to unify the standards followed by the different agencies, Flay and colleagues (2005) have described a number of criteria to assist practitioners and policy-makers to determine which interventions are effective and worth being broadly disseminated. In terms of methodology, these authors highlight proper sampling and psychometrics, rigorous data analysis, together with consistent positive and practically relevant effects with at least one long-term follow-up measure and trials in real-world settings. In addition, programs need to provide detailed manuals, training, and support for the people who will implement the intervention. Lastly, these authors recommend that programs need to include costs information and evaluation and monitoring tools to allow adopting agencies to control the intervention efficacy. In German-speaking countries, we can cite the “German Society for Evaluation Standards” (https://www.degeval.org/degeval-standards/standards-fuer-evaluation/). Their established “Standards for Evaluation” are a reference for researchers and policy-makers when verifying the quality of evaluation studies in various areas. Currently, we are not aware of any large-scale initiatives of this type in Spanish-speaking countries that could be applied to the educational area.

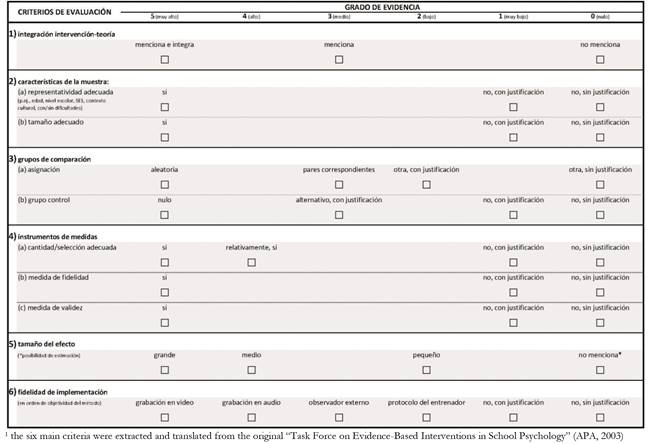

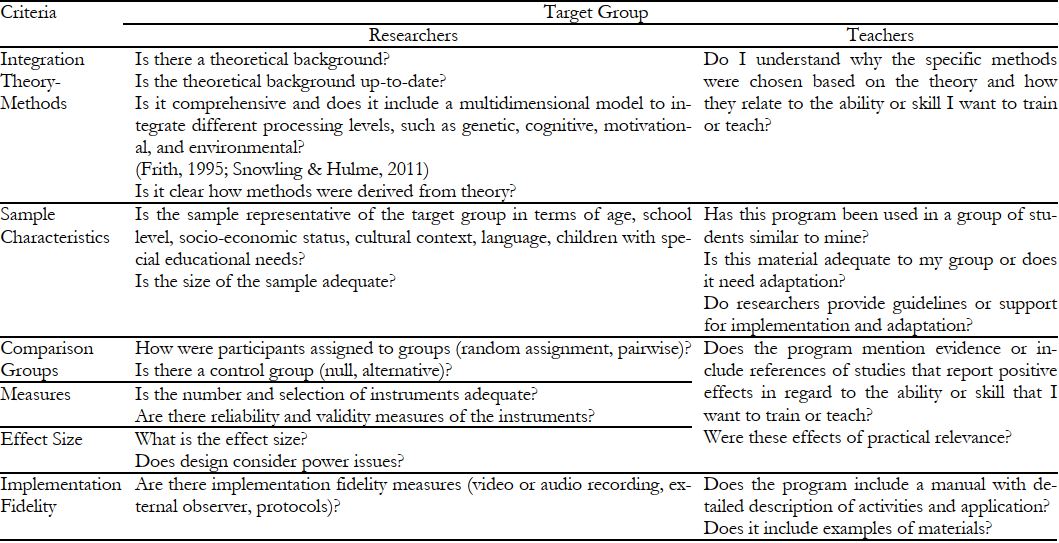

In the US, the APA and the American Society for the Study of School Psychology have attempted to bridge research and practice by publishing a manual to help evaluate the level and quality of evidence of intervention programs undertaken in the educational area (APA, 2003). The main purpose of the manual was to ensure that educational intervention results are interpretable and reliable, and that the achieved effects are positive and of practical relevance. Thinking back to our goal of finding common grounds of cooperation and interaction between researchers and practitioners to facilitate more effective dissemination, one idea could be the development of an interactive data-base with evidence-based educational programs. The criteria mentioned in the APA manual (2003) could be a suitable starting point to create a continuous feedback system in the form of a specialized platform.

The first step would be to tailor important research terminology for elementary school teachers. In Table 1, we show an example using six relevant criteria contained in the manual. A list of this sort could help teachers when searching and selecting an educational program for their classes.

Table 1. Example of how to tailor important evaluation criteria for educational materials for elementary school teachers1.

1The six criteria used for this example were extracted from the original “Task Force on Evidence-Based Interventions in School Psychology: Procedural and Coding Manual” (APA, 2003)

Further, in this specialized platform each program listed would be accompanied by an evaluation system, which would include a standard framework, such as the one suggested in Appendix 1. The more points an intervention study obtained, the higher the probability that the results are based on sound research methodology and, thus, the higher the probability of obtaining similar results to the ones reported when actually using the materials in a similar setting. It is important to point out that a check-list in the educational area needs to take into account the particularities and difficulties of implementing intervention in each specific school setting. This means that in certain circumstances, the use of a more rigorous methodology might not be possible (e.g., null control group). In these cases, it is indispensable that researchers clearly state the reasons for their choices. Research summaries, systematic reviews, and results of meta-analysis could also be added to the evaluation. Most importantly, the check-list could be accompanied by testimonies of educators who have used the material in their classes. This would facilitate access, location, and organization of information about educational interventions for a whole range of professionals when trying to select a program that better meets their goals and the students’ needs. In addition, such a repository would be an inexpensive and useful way for researchers to profit from the knowledge and expertise gathered from the work of educational practitioners, in the sense of the applicability and acceptability of intervention programs in real and daily school settings. This information could be used when releasing new or adapted versions of the materials.

Another way of creating common grounds for collaboration involves one additional level in a dissemination plan, namely the action level (Harmsworth & Turpin, 2000). Even though awareness and knowledge are essential and should be part of any dissemination strategy plan, it is only in combination with the action level that these strategies will have a greater potential to trigger real change in teaching practices. The action level includes not only the training of educators to equip them with necessary skills and knowledge to be able to implement new materials or teaching techniques with fidelity. Additionally, a kind of rapport must be established between researchers and educators. To achieve this, factors such as social validity and acceptability of programs need to be taken into account (Kazdin, 1980; Wolf, 1978). In the educational context, these terms refer to the opinion of teachers, school directors, and parents regarding whether the procedures of a specific intervention program are adequate and justifiable, and if the goals pursued are relevant to their particular needs as well as to society in general. As the design of an educational intervention involves a compromise between the rigid scientific standards and the complex dynamics in real school settings (Kazdin, 2004), it is important to assure that the issues of study are tuned to the actual problems and questions of the daily school practice and do not get lost in the process of trying to balance these two sides (Van der Akker, 1999; Perines, 2018; Institute of Education Sciences, 2018). In this sense, educational researchers implementing interventions in Spanish educational centers are recommended to take advantage of this opportunity of direct contact with practitioners to establish rapport, for example, by asking them about their current issues of interest, challenges they face, or simply what their views of research are and what expectations they place on research.

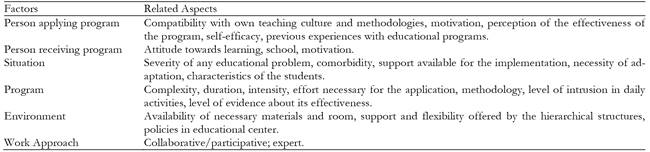

Another important point for educational researchers when trying to gain more acceptance for educational interventions is to identify factors that influence the opinion of practitioners. Studies in this area point to five relevant groups of variables related to: a) the person who applies the intervention, b) the person who receives the intervention, c) program characteristics, d) environment, and e) the type of work approach or relationship established (Eckert & Hintze, 2000; Elliott, 1988; Kamphaus, 2000; Turan, Ostrosky, Halle, & Destefano, 2004). These factors are summarized in Table 2. As the goal of the current article is to increase synergy between research and practice, let us focus on the kind of work approach and relationship built during the implementation of a research project.

According to West and Idol (1987), there are basically two types of work approaches. The collaborative approach is based on the idea of cooperation and joint work to achieve a goal, and in which opportunities for exchanging knowledge and making decisions together exist. In contrast, the expert approach has a more authoritarian and prescriptive nature. In this case, it is assumed that the researchers take the role of the expert, and the project would first be planned without interaction with the educational center and then presented for the school director’s consent. There is evidence suggesting that teachers prefer the collaborative over the expert approach (e.g., Babcock & Pryzwansky, 1983; Kutsick, Gutkin, & Witt, 1991). Nevertheless, this preference seems to interact with personal and situational variables (Forman et al., 2009). For example, Graham (1998) investigated the work approach preferences of teachers from an educational center towards an intervention outlined by an external educator for a child diagnosed with anxiety. His observations pointed to a more complex process, in which at certain moments teachers preferred direct support and clear instructions about what to do instead of opportunities to participate in discussion and decision about actions. Along similar lines, DeForest and Hughes (1992) reported that teachers with lower self-efficacy were less prone to accept a proposed intervention and to invest time and effort to collaborate with colleagues compared to teachers with higher self-efficacy. Consequently, it seems difficult to make straight-forward recommendations about the type of relationship between researchers and teachers which should be pursued, as it will greatly depend on the specific individuals involved. Nevertheless, it seems that researchers should at least set out with an attitude of allowing for the possibility of a collaborative approach so as to not forgo the possible value input from experienced and motivated teachers.

One aspect of the relationship between researchers and teachers that will clearly bring positive outcomes is the level of support offered to the teachers during implementation. This is because it has been shown that high levels of support during implementation plays a central role in regard to the acceptability of and fidelity to a program (Mautone et al., 2009). This will be particularly relevant in countries where belonging to a certain SES will dictate the quality of the educational services and resources available, which is the case of many Spanish-speaking countries. In order to diminish the educational disparities in underprivileged communities, a dissemination strategy that targets these groups is highly recommended. One example of an American based initiative targeting low SES children is the “instructional support teams” co-organized by the Metropolitan Center for Urban Education (2008) and the University of New York. Their main objective is to give teachers in public schools the support needed to guarantee that students from low SES and ethnic minorities will succeed in the general educational system, thus avoiding their placement in special education programs. Initiatives like this organized by research groups based at universities would present another opportunity to bring research closer to practice. Even though it might appear costly at first sight, it is a matter of doing the math to compare the costs of running such projects to the public expenses over many years of maintaining the parallel special education programs. Unfortunately, we are not aware of any similar initiatives in Spain.

Based on the current situation in Spanish-speaking countries as presented to this point, it becomes clear that the development of more effective and transparent dissemination strategies needs to be on the educational research agenda. A first step towards this goal would be to determine possible challenges in the dissemination process. Thus, in the next section, we will try to identify general barriers to the uptake of research finding in the educational environment and the particularities when conducting research in the educational field in Spanish-speaking countries.

The challenges of evidence dissemination

The first barrier to consider is the level of preparedness of teachers and their perception of research evidence. Recent PISA reports confirm the importance of improving Teacher Education programs and providing continued career development opportunities (Barber & Mourshed, 2007), in order to strengthen the capacity of practitioners. Teachers working in public schools are confronted with very diverse and large classrooms, especially in times of increased mobility and considerable socio-economic divide in Spanish-speaking countries. This generates great challenges for teaching and class management. Thus, support and professional development in regard to response-to-intervention models and culture-sensitive educational practices will be of great importance to develop more inclusive educational systems to attend populations with varied home learning environments and ethnic-cultural and linguistic backgrounds (Cheesman & De Pry, 2010; Gay, 2000; National Center for Culturally Responsive Educational Systems, 2005). Moreover, due to the increasing evidence of the benefit of preventive actions in terms of reading learning precursors, special attention to pre-school and kindergarten teachers is recommended (Foorman, Breier, & Fletcher, 2003). For example, studies show that not only abilities related to knowledge of print (e.g., letter identification) are predictive of reading outcomes in the elementary school years, but also oral language skills (e.g., narrative skills and expressive vocabulary) are important reading precursors (Rayner, Foorman, Perfetti, Pesetsky, & Seidenberg, 2001; Scarborough, 1988).

A second barrier is lack of clarity of research outputs in some areas. At least in literacy, researchers are sometimes confused when well-designed, theory-based and evidence driven interventions fail to generate significant measurable effects. One example is the common failure in finding transfer effects of vocabulary intervention to standardized tests of reading comprehension, despite the high and significant correlations between these two factors repeatedly reported in the literature (see meta-analysis by Elleman, Lindo, Morphy, & Compton, 2009). Usually the arguments to explain the unsuccessful attempts are related to poor intervention fidelity or implementation problems. Regardless, when intervention results fail to find large, clear effects, and the reasons for this involve complex explanations, evidence-based recommendations might appear less appealing to educators, especially if they do not have the grounding in the underlying cognitive theories.

The third barrier relates to the context of teachers’ daily work. The educational system and local school culture may hinder the use of evidence-based practices. In a study by Henderson and Dancy (2007), physics college teachers were interviewed in order to understand the reasons for the discrepancy between recommended evidence-based instructional strategies and the strategies actually applied by the teachers. In the instructors’ opinion, the main reasons for the gap are due to situational factors that favor the “traditional instruction.” Among the cited challenges were the amount of content that is expected to be covered, lack of time, class size, and the lack of role models and department rules that are supportive of innovative methods. In Spain, apart from time constraints, some studies in the area of speech therapy also report that restricted knowledge, lack of skills, and accessibility to certain resources are obstacles for applying evidence-based practices (Carballo, Mendoza, Fresneda, & Muñoz, 2008; Fresneda, Muñoz, Mendoza, & Carballo, 2012).

Of note, resources management is a challenge not only for teachers, but also for educational researchers. One problem that researchers face is trying to maximize the impact of their studies and support changes to the system, in the face of resources constraints which are imposed on research projects. In regard to non-scientific broader communication, an UK consulting agency has recently analyzed the efforts of university staff in undertaking public engagement for knowledge dissemination (Kelly, McNicoll, & Kelly, 2018). Results point to a huge hidden economic value, which are nevertheless not recognized by governmental agencies when allocating funding. We have no official data of this sort for Spanish-speaking countries, but from our observations in the field of education, many researchers do undertake dissemination activities after the completion of a research project, underlying the researchers’ personal and professional commitment to the public of interest. However, these actions not only remain hidden, they also find less support from the research community itself due to the higher value placed on scientific communication, especially publishing in high-impact (English-speaking) journals (e.g., the ‘sexenios’ system in Spain in which researchers’ productivity is evaluated by the government, with more weight given to higher impact journals). In this context, the present lack of resources, time, and recognition in relation to non-scientific dissemination initiatives pushes educational researchers in Spanish-speaking countries to tendentiously focus more on individual and scientific dissemination (in English), instead of broader and more difficult to reach audiences.

This brings us to the fourth and last barrier to be discussed - the need to interact with the end user (Vanderlinde & Braak, 2010; WHO, 2014). Although the implementation of a research project will open the doors to educational centers, it will not guarantee a successful flow of information, future collaborations or changes in school practices. In this sense, an educational research characterized by small isolated projects usually carried out by individual doctorate students or small research groups in a highly competitive environment (Burkhardt & Schoenfeld, 2003) will not suit our goals. There is a need to develop two-way avenues in longer-lasting relationships that involve intensive discussion and possibilities of exchange between the relevant partners. A US based project of this sort carried out by the Carnegie Foundation for the Advancement of Teaching (2017) has been trying to create a common ground between researchers and educators. Based on the idea of “improvement science” and “networked improvement communities,” a multi-disciplinary team is formed to work user-centered and problem-centered in order to develop solutions to improve teaching and learning. This means that in this project the knowledge gathered by and the opinion of people working directly in the schools are recognized and highly valued. In addition, the concept involves the development of an infra-structure which will enable knowledge generated by the network community to arrive at the discussion table and be integrated in the decision process. Another great possibility for interaction, and even for starting initiatives like the one just mentioned, are conferences aimed at a wider audience than just researchers. For example, the British Dyslexia Association organizes yearly talks and workshops directed at researchers, teachers, and family members. In this mixed atmosphere, the different groups have the chance to meet, interact, broaden their knowledge, and network beyond their own professional boundaries. In Spain, some similar “mixed meetings” already exist, such as the “Congreso Internacional de Psicología y Educación,” as well as others organized by local dyslexia associations and “Centros de Profesores” (as part of their career development strategy). Nevertheless, we are not aware of such initiatives as the “networked improvement communities” in Spain.

Conclusions

Awareness about the advantages of using evidence-based educational practices is increasing. This means that theory with evidence of its impact and clearly established value to society will attract more interest from practitioners, decision-makers along with private and governmental funding agencies (Flay et al., 2005). Nevertheless, the gap between research and practice remains. Thus, developing a sustainable collaborative system between the research community and the educational centers that enables continuous exchange and reciprocal support in longer lasting relationships is becoming a priority. Due to the lack of well-documented dissemination practices in Educational Psychology in Spanish-speaking countries, this work attempted to trigger this important discussion by describing some of the systems involved. This should allow the identification of possible barriers for research dissemination and stimulate the generation of ideas on how to overcome the research-practice and school performances divides.

In this work, we make a call for the generation of more theory-driven and methodologically sound evidence for educational interventions in Spanish-speaking countries, as it seems illegitimate to recommend teachers evidence-based practices when there are so few evidence-based materials available in Spanish. Furthermore, evaluation standards should be set to enable different educational professionals to better judge the effectiveness of evidence-based educational materials. This evidence check-list should be made available in the form of an interactive platform, which would include additional information about the educational products gathered during their evaluation in research projects and application in real classrooms settings.

Most importantly, we believe that there is a need to reconsider the current process of generating and gathering evidence in the educational centers. In this sense, an educational research culture that values collaboration among groups of researchers and the continuity of the researcher-teacher relationship through the development of an effective interactive and continuous feedback system would generate important information for the revision of theoretical concepts and for updating intervention programs. To achieve this goal, it seems necessary to make budget allocation for broader and longer lasting dissemination strategies explicit in the research project proposals. It is relevant to highlight that funding agencies are gradually realizing the importance of communicating research activities and results to different and wider audiences. For example, prestigious European-funded research programs, such Marie Curie and H2020, explicitly require “outreach” and “communication strategies” to be described in the grant application process. Therefore, educational researchers need to be prepared for this demand.

In addition, special attention should be given to teachers’ educational formation in respect to self-efficacy and positive mind-set towards research and evidence-based practices. This means that instead of considering teachers as final consumers of research products (Christianakis, 2010) and, thus, working towards offering final recipes of what works best, more efforts should be invested in improving Teacher Education programs and in creating opportunities for interested educators to get involved in the research process. This would produce empowered, well-informed decision-makers and best collaboration partners.

In times of economic crisis and political instability in Spanish-speaking countries, it is important to mention that high academic performances are not only a result of high investment in education (Barber & Mourshed, 2007). According to the OCDE, Singaporean students are among the best in international comparison studies, such as PISA, despite their investment in education being lower than twenty-seven of the thirty participating countries. In this case, it might be a matter of re-shifting resources and rethinking concepts in the educational research process, especially in respect to collaboration. The possible common grounds for exchange between the groups of interest identified in this work (e.g., interactive platform, instructional support teams, networked improvement communities) should serve as first step in the attempt of generating further discussion among colleagues about best ways to form a networked collaborative system in Spanish-speaking countries: a sustainable system for continuous information exchange, in which expertise of all groups are equally valued and from which all parties, and especially the students, can equally profit.