Introduction

The term metacognition, as coined by Flavell (1979), referred to reflection on one's own thoughts, and distinguished metacognitive knowledge, metacognitive experiences, goals or tasks, and actions or strategies. Since then, the term has had several other conceptions related to a wide spectrum of cognitive abilities and a multitude of overlapping concepts: social cognition (Andreou et al., 2018), theory of mind, self-regulation (Inchausti et al., 2019), introspective accuracy (Silberstein & Harvey, 2019), mentalization, cognitive insight, attributional complexity, emotional awareness and alexithymia (Bröcker et al., 2017a; Faustino et al., 2019), attentional biases and metacognitive beliefs (Wells & Capobianco, 2020), and the ability to analyze and modify reasoning and behavior (Moritz et al., 2007).

The importance of analyzing the capacity for identifying emotional states, social cognitions, or being able to predict the behavior of others, for example, has been found in various disorders. A first proposal directed at schizophrenia and depression focused on cognitive insight, that is, the perception of being objective about reality, being able to make use of feedback from others, having a certain perspective on and questioning one's own thoughts and beliefs (Beck et al., 2004). The question was not a matter of clinical insight, but of awareness of one's own distorted beliefs and misinterpretations. Later conceptually varied proposals have addressed metacognitive capacity, mainly in schizophrenia (Inchausti, García-Poveda, et al., 2017; Lysaker et al., 2011, 2013), substance abuse (Inchausti, Ortuño-Sierra, et al., 2017), OCD (Irak & Tosun, 2008) and depression or generalized anxiety disorder (Wells & Carter, 2001).

Several conceptual proposals have arisen depending on whether metacognition is understood as a unitary concept, such as a continuum from simple to more complex levels, or modular, as the interaction of independent functions (Semerari et al., 2003).

The unitary hypothesis has led to such proposals as the Self-Regulative Executive Function model (S-REF) by Wells & Matthews (1996), based on thought content (e.g., metacognitive beliefs on pathological worry) (Cartwright-Hatton & Wells, 1997) and Reflective Function, developed in an intervention by Fonagy et al. (2018). Several tools for evaluating metacognition have been based on unitary conceptions of the construct: the Reflective-Functioning Scale (Fonagy et al., 1998), the Assimilation of Problematic Experiences Scale (Stiles et al., 1992), or the Interpersonal Reactivity Index by Davis (1980), and the Metacognitions questionnaire (MCQ-30) by Wells & Cartwright-Hatton (2004) based on the S-REF model.

The modular hypothesis led to the Metacognitive Multi-Function Model (MMFM), which focuses more on mental functions and operations than content (Semerari et al., 2003). Metacognition is broken down into relatively independent subfunctions, which fits Flavell's original concept well. Independence of the metacognitive subfunctions may be observed, for example, in poor metacognitive capacity of oneself, but good performance with respect to others (Lysaker et al., 2011). There is a hierarchy, then, in which good execution in higher skills occurs along with adequate performance in more basic abilities. Furthermore, a distinction is made between the ability to discriminate concrete cognitions, such as identifying and interpreting emotional states and the behavior of others (social cognitions), and synthesizing abilities such as schemas predicting behavior (Lysaker et al., 2013). Metacognition would be the result of integrating both types of abilities (Bröcker et al., 2017b).

The modular hypothesis has also led to instruments like the Metacognition Assessment Scale (Semerari et al., 2003) or its hierarchized version, from simpler to more complex metacognitive tasks (Lysaker et al., 2005), and the Metacognition Assessment Interview (Pellecchia et al., 2015; Semerari et al., 2012). Both evaluate metacognition through the narrative in interviews.

The Italian Metacognition Self-Assessment Scale (MSAS) by Pedone et al. (2017) based on the MMFM, is derived from the abovementioned MAS-A and MAI. The 18-item self-report, which began as a screening test for metacognitive abilities, makes data collection easier than interpreting the commonly used interviews, reduces their emotional charge (Bröcker et al., 2017b), and is more direct, not requiring a previous order to elicit metacognition (Lysaker et al., 2011). Although the MSAS originally subdivided metacognition into five abilities, only four factors were found: 1) Self-Reflectivity, referring to monitoring and integrating one's own mental states; 2) Critical Distance or the ability to differentiate representations from each other and from reality (differentiation), and ability to distance from one's own thoughts, recognizing their subjectivity and the possibility that they may be false (decentration); 3) Mastery of coping strategies mediated by metacognitive abilities; and 4) Understanding Other Minds. These four factors cover abilities related to a person's own functioning, following descriptive areas related to the Self, Others and general management or coping (Faustino et al., 2019; Pedone et al., 2017).

Their application to disorders would enable design of interventions focused more on integrating metacognitive abilities than on traditional specific symptoms or thought content. Evaluation of metacognition from a modular conception (such as MSAS) would also enable individualized design of the intervention and suggest the most suitable format (e.g., individual or group). Although previous studies have related metacognitive abilities to different theoretical approaches, they have not contributed to a concrete ability, such as insight or metacognitive capacity, for reflecting on symptomatology (Beck et al., 2004). One of the main uses suggested for the MSAS is as a follow-up during intervention in the metacognitive processes proposed, such as those related to insight or awareness of the illness. This analysis would be a novelty with practical use in research on intervention, and particularly in psychotic disorders, for which the instrument was developed.

The MSAS originally cross-validated in a general Italian population (Faustino et al., 2019; Pedone et al., 2017) supported the four factors mentioned above and one general factor (metacognition) with adequate fit in the confirmatory analysis. In a recent meta-analysis, Craig et al. (2020) mentioned that the MSAS metacognition self-report had been validated in an older population, whereas most validation studies focus mainly on university students. Faustino et al. (2019), in their Portuguese adaptation of the MSAS, performed an exploratory factor analysis in addition to other validity indices, which fit to the original MSAS, with a reliability of .73 to .84 for the four factors in a general population and a clinical group. Except for this Portuguese version, as far as we know, this instrument has not been validated in any other language, and the Spanish adaptation of the MSAS would provide a screening tool for metacognitive abilities in our context. Such a screening instrument is useful for studying metacognitive processes simply and rapidly. Although this study is directed mainly at nonclinical populations, it can also be applied to general and clinical populations and for selecting candidates for therapy or follow-up after completing intervention (Hausberg et al., 2012).

The general objective of this study was to validate the MSAS test in a Spanish-speaking general population and compare the four-factor structure with that of its authors (Faustino et al., 2019; Pedone et al., 2017). This objective was broken down into the following specific objectives:

Translate and adapt the Metacognition Self-Assessment Scale (MSAS) into Spanish.

-

Analyze the psychometric characteristics of the MSAS:

Reliability, using internal consistency and temporal stability indicators.

Validity indices based on the internal structure, with Confirmatory Factor Analysis and test the goodness of fit of the original four-factor model.

Measurement invariance related to sex (male and female) and age (young and older adults).

Study criterion validity indicators, through their concurrent relationship with the Cognitive Insight Scale by Beck et al. (2004).

Explore the validity of known groups based on the sensitivity of the MSAS scales in discriminating between participants from the general population and those who had a psychopathological history, were under psychological treatment, or who had been prescribed psychotropic medication.

Method

Participants

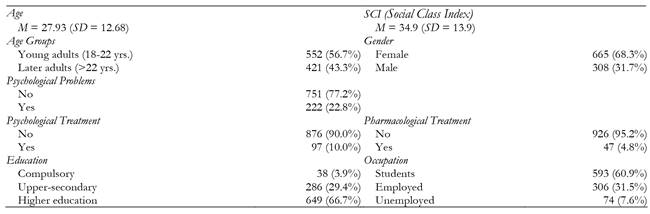

Although 1039 participants were contacted originally by accidental sampling of the general population, after filtering, the final sample selected was comprised of 973 participants aged 18 to 86 (M = 27.93; SD = 12.68), of whom 308 were men (31.7%) and 665 were women (68.3%). For analytical purposes, the sample was divided according to the median age (22 years), differentiating between young adulthood (18 to 22 years) and later adulthood (23 years or older), consistent with the classification used by the Massachusetts Institute of Technology (Simpson, 2018). The participants were mostly single (753; 77.4%), followed by married/living together (180, 18.5%), divorced/separated (32; 3.3%) and widowed (8; 0.8%). The weighted mean of the Social Class Index (Hollingshead, 1975) was 34.94 (middle class; SD = 13.9; range: 11 to 81), with a large proportion of students (60.9%) and employees (25.2%). The sample description is given in Table 1 under Results. Inclusion criteria were to be at least 18 years of age and a native Spanish speaker. The exclusion criteria were based on positive answers on the Moritz, Favrod, et al. (2013) control scale and/or scoring below five on the EPI (Eysenck & Eysenck, 1990) sincerity scale (n = 68). There were no statistically significant differences in age (t(1037) = -.917, p = .363) between participants discarded and included in the study, but by sex (χ2 (1, 1039) = 9.759, p = .002), a higher percentage of males were excluded (8.3% vs. 3.7%).

Instruments

Basic sociodemographic information record sheet

These records were used to collect the study code, sex, date of birth, marital status, occupation, education, history of psychological problems, and psychological treatment/medication.

Metacognition Self-Assessment Scale (MSAS)

The MSAS (Pedone et al., 2017) is an 18-item self-report measure, which can be administered in 10-15 minutes. It is answered on a five-point Likert scale (1 = never, 5 = always) in a range of 18-90 points, where higher scores indicate higher metacognitive performance. It has four factors. Self-reflexivity (i.e., 3A “I am aware of what are the thoughts or emotions that lead my actions”), Critical Distance (i.e., 6A “I can clearly perceive and describe my thoughts, emotions and relationships in which I am involved” (differentiation) or 3D “I can describe the thread that binds thoughts and emotions of people I know, even when they differ from one moment to the next” (decentration). and Mastery have five items (i.e., 1D “I can deal with the problem voluntarily imposing or inhibiting a behavior on myself”, while Understanding Other Minds has three (i.e., 4D “When problems are related to the relationship with the other people, I try to solve them on the basis of what I believe to be their mental functioning”). The Cronbach's alphas found by its authors ranged from .72 to .87.

Beck Cognitive Insight Scale (BCIS)

The BCIS (Beck et al., 2004), validated in Spanish by Gutiérrez-Zotes et al. (2012), is a self-report on cognitive insight or metacognitive capacity for reflecting on symptomatology. It has 15 Likert-type items with four answer choices rated for agreement from 0 to 3. It measures Self-Reflectiveness (R) and Self-Certainty (C) factors, and provides a Cognitive Insight index (CI = R - C). The internal consistency scores were .68 (R) and .60 (C) ((Beck et al., 2004), and .59 (R) and .62 (C) (Gutiérrez-Zotes et al., 2012). In this study, the internal consistency indices were α = .638, .694, .656 for R, C and CI, respectively.

Eysenck Personality Inventory (EPI), Sincerity Subscale

The EPI Sincerity Subscale (Eysenck & Eysenck, 1990) comprises nine yes/no items about daily life, and is a widely used index of social desirability with test-retest reliabilities over .75.

Moritz Control Scale

The answer control scale by Moritz, Favrod, et al. (2013) was used. It consists of four items in yes/no format based on common myths about psychosis, manifestations of delirium, split personality, or very rare but widespread symptoms, e.g., “One time I was abducted by aliens.” This short scale does not include the psychometric data in the original study, but it was designed for Internet-based psychosis studies (Moritz, Van Quaquebeke, et al., 2013). Any positive response suggests that the participant should be excluded.

Procedure

To adapt the MSAS to Spanish, after receiving permission from its authors, it was translated into Spanish by an expert clinical psychologist. A native expert made the back-translation into English. A second expert in both cultures and the researchers revised the resulting adaptation. As an additional reference, the Italian version provided by the authors of the MSAS (unpublished) was analyzed and compared to the Spanish version by an expert in both languages.

Students in higher courses in Psychology were invited to participate in this study, and they in turn, invited family, friends or partner (snowball sampling) following clear instructions, resulting in a heterogeneous sample of the general population. Psychopathological history and whether they were on psychological treatment/psychotropic medication were based on participant answers, but confirmation of the type of pathology was not requested, and was accepted as received as usual in the general population. Participants were ensured confidentiality and gave their informed consent. Test-retest data (one month between measurements) were collected online from the document sent to the participants. Students who participated and recruited four additional participants for the study received academic credit. An alternative procedure for extra credit was available to those who did not participate in the study. All the participants were informed of the time it would take to complete the tests (about 50 to 60 minutes) and that sincerity would be controlled for (there were no lost data). The study was approved by the Andalusian Biomedical Research Ethics Coordinating Committee (2795-N-21).

Data Design and Analysis

Data were collected in a selective test-retest study. First, a descriptive analysis was done of the total score, and normality was tested with skewness, kurtosis, the Kolmogorov-Smirnov test, and Mardia's multivariate normality test.

Score reliability was analyzed for internal consistency with Cronbach's alpha and McDonald's omega, as recommended by Viladrich et al. (2017), Consistency is considered excellent when values exceed .90, good above .80, acceptable over .70, and questionable below that (George & Mallery, 2003). Pearson's test-retest correlation and the two-way mixed, average score, and Intraclass Correlation Coefficient (ICC) were used to examine temporal stability. Values are defined as fair from .40 to .59, good from .60 to .74, and excellent above .75 (e.g., Cicchetti, 1994).

Evidence of the internal data structure was found from the item correlation matrix by Confirmatory Factor Analysis (CFA) using the JASP MPLUS emulation of robust Unweighted Least Squares (ULS) and standardization of latent and observed scales. There is some agreement in considering the chi-square test of significance insufficient for CFA model fit due to its tendency to reject models when the samples are large (e.g., Ropovik, 2015). Therefore, various authors have recommended a set of indices for evaluating model fit, including relative indices such as the Comparative Fit Index (CFI) along with the Bentler-Bonnet Non-Normed Fit Index (NNFI) and Normed Fit Index (NFI). A model is considered to be good when these values are equal to or greater than .95 (e.g., Brown, 2015). The Root Mean Square Error of Approximation (RMSEA) is the most prominent absolute fit index, both for its point estimate and the 90% Confidence Interval (CI90). A model is considered adequate when its value is equal to or less than .05 (e.g., Herrero, (2010)). Another comparative index is the Expected Cross Validation Index (ECVI), where lower values suggest better model fit (MacCallum & Austin, 2000).

Multiple-group CFAs were run, sequentially testing for configural (factor structure), metric (factor loadings), scalar (intercepts), and strict (residual variances) equivalence (Byrne, 2008; Cheung 2008) to assess measurement invariance related to sex and age, where ΔCFI < .01 for the configural model implies that the invariance assumption still holds (Byrne, 2008; Cheung & Rensvold, 2002).

Evidence of relationships with external variables was analyzed using Pearson's correlations between the MSAS factors and the BCIS, a scale related to metacognition. The independent samples t-test was used for analysis of the evidence of discrimination between samples. These validity indicators were interpreted with the Cohen's d effect size, interpreted as negligible (< .10), small (< .30), medium (< .50), or large (≥ .50).

Data analysis was performed with IBM SPSS Statistics (Version 26), JASP (Version 0.14.1; JASP Team, 2020) and WebPower (Zhang et al., 2021).

Results

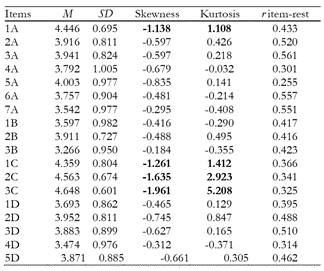

Table 2 shows the descriptive data of the scale items, emphasizing extreme skewness and kurtosis, mainly in Item 1A and the items in Section C. The correlations between the items varied from .255 to .561.

Table 2. Descriptive Statistics of MSAS Items.

Notes:For all the items: N = 973; Range 1-5; Skewness SE = 0.078; Kurtosis SE = 0.156; Extreme values are in bold type.

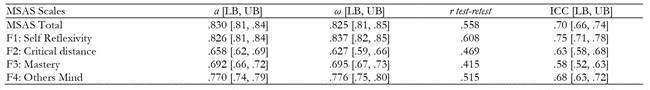

Reliability of the scores

Test-retest reliability (Table 3) with a one-month measurement interval, although statistically significant in all cases, did not reach the .70 criterion, while ICC were always over the minimum of .50, and remained moderate. Internal consistency showed a good Cronbach's alpha (> .80) for the MSAS total score and for the Self-Reflexivity subscale, and acceptable for the Others Mind subscale (>.70), but were inadequate for Critical Distance and Mastery scales. Very similar results were found for the McDonald's omega, confirming low consistency of the Critical Distance and Mastery subscales.

Internal structure

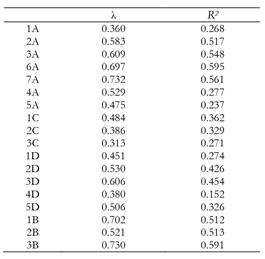

Unweighted Least Squares was used, since the Mardia test for multivariate normality was significant in both stages (p < .001), showing a violation of the normality assumption. The CFA (Table 4) found robust factor loadings above .50 except for items 1A, 5A, 1C, 2C, 3C, 1D, and 4D.

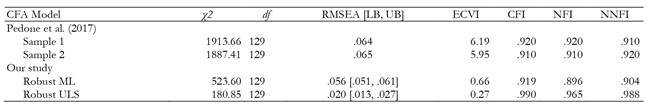

The absolute fit indices are presented in Table 5. As the χ2/df ratio was less than 2, the mean square errors of approximation fulfilled the acceptance criterion for fit (RMSEA = .2 < .6), and all the relative fit indices were shown to be above the acceptance criteria (CFI, NFI and NNFI > .95), demonstrating adequate fit of the model to sample data. These results, using the same estimation method (robust ML), were very similar to the original by Pedone et al. (2017). Although the RMSEA fit index criterion (< .060) was met, whereas it was not in the Pedone et al. model, the relative indices of fit (CFI, NFI and NNFI) were all below .95. With robust ULS estimation, all the fit index criteria improved. This estimation method is recommended when normality assumptions are not met and the items are ordinal, as they are in our case. In view of the difficulty of evaluating their adequacy, some authors (e.g., Shi & Maydeu-Olivares, 2020) recommend using comparative indices, such as the SRMR (Standardized Root Mean Residual), that are not as affected by the estimation method. We found an SRMR of .046 with the ML and .048 with ULS, which in both cases would be within the recommended values of .00 and .08 (Hu & Bentler, 1999). Unfortunately, we do not know what the values were for this index in the original study by Pedone et al. (2017), but we assume that they would be similar to ours if the similarity of our indexes and theirs are taken as a reference.

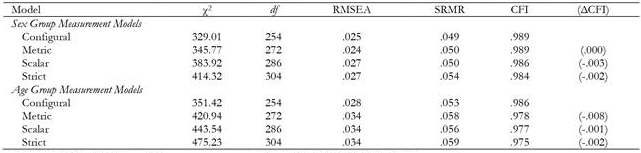

Measurement Invariance

A multigroup analysis sequentially tested configural, metric, scalar, and strict invariance models by simultaneously evaluating the fit for male and female samples on the one hand, and young and older adult samples on the other. Using the fit of the configural model as the reference to compare the rest of the equivalence models specified later (Byrne, 2008), all the fit indices indicated good fit of the four-factor Pedone model (Table 6 ), supporting equivalent solutions for both men and women on the one hand, and for young and older adults on the other.

Table 6. Model Comparisons for Invariance across Sex and Age.

Note.According to Cheung and Rensvold (2002) ΔCFI < 0.01 implies that the invariance assumption still holds.

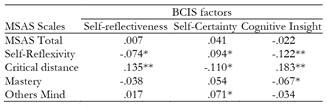

Relation with external variables criterion

Positive correlations were found between the MSAS Self-Reflexivity factor and the BCIS Self-Certainty factor, and between the Critical Distance and Self-Reflexivity factors (Table 7). Furthermore, a weak negative correlation was found between the BCIS Cognitive Insight Index and Self-Reflexivity. There was also a small negative correlation between the Critical Distance and Self-Certainty factors. The MSAS Critical Distance factor is the only one that had significant correlations with the three BCIS components. Many of the correlations were not statistically significant and none of them reached medium values.

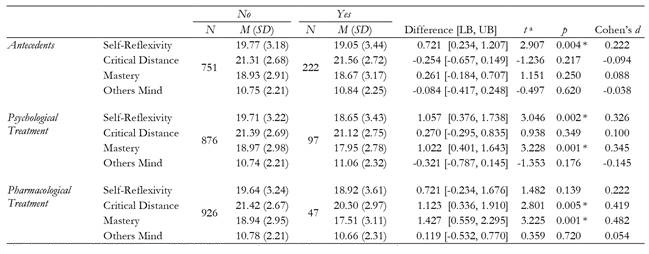

Known-group validity

Analysis of the MSAS scales by comparison of means with the Student's t-tests (Table 8) showed that the Self-Reflexivity subscale significantly differentiated participants with a history of psychological problems or were under psychological treatment from those who were not, and the Mastery scale significantly discriminated those who were under psychological treatment or medication from those who were not. Finally, the Critical Distance scale showed significant differences between those who were on medication or not. The Other Minds scale could not significantly discriminate them based on any of these three criteria. All significant differences showed small to medium Cohen effect sizes.

Table 8. Discriminant Validity of MSAS Scales according to Antecedents of Psychological Problems, Psychological and Pharmacological Treatments.

aIndependent Samples Student’s t tests (df = 971). All comparisons meet the equal variance requirement

*Significant difference with the Bonferroni correction for multiple comparisons (lowering the α level from 0.05 to 0.0125)

Discussion

The term metacognition refers to a set of various cognitive processes, from social cognition and theory of mind, to self-regulation processes, attributions, beliefs or complex attentional biases (Andreou et al., 2018; Bröcker et al., 2017a; Faustino et al., 2019; Inchausti et al., 2019; Wells & Capobianco, 2020). The modular hypothesis of metacognition focuses on mental functions and operations in which higher abilities depend on more basic abilities for their integrated functioning (Semerari et al., 2003). This hypothesis proposes factors related to monitoring and integrating one's own mental states (Self-Reflectivity); ability to differentiate representations and distinguish them from reality (Differentiation); ability to distance from one's own thoughts, or decentration (Critical Distance); Mastery of coping strategies; and understanding of Other Minds. This study focused on a Spanish adaptation and validation of the Metacognition Self-Assessment Scale (Pedone et al., 2017) from this perspective. Self-assessment of metacognitive functions is a novelty in psychological intervention, and therefore, the instrument will be useful for screening and follow-up of these specialized processes. Before its use and validation with clinical samples, this study focused on self-evaluation of metacognition in the general population from a modular conception. The original MSAS authors used a convenience sample of the general population, although afterwards the final selection of participants was randomized. The Portuguese validation (Faustino et al., 2019) also used convenience sampling. Therefore, the procedure followed for participant selection are comparable in these three validations.

In the descriptive analysis of the MSAS scales, the means tended to be considerably higher than in the original version. The main difference between the two studies seems to be the sample used. The original team chose an older general population, while this study selected a mainly university sample, and age is one of the most influential variables in the differences between metacognition self-reports (Craig et al., 2020).

In the MSAS score reliability study, the internal consistency data were similar or even better than in the original or the Portuguese (Faustino et al., 2019) studies. Even so, certain variations were found that could reflect the modular nature of metacognition (Lysaker et al., 2011), leading to intrasubject differences between items on simple and complex abilities. The test-retest reliability of the scores was adequate, similar to the Portuguese version.

A confirmatory factor analysis was done for validation instead of exploring the original structure, as there are already sufficient indicators in the literature, from both the original MSAS and Portuguese versions. Evidence of internal structure was similar to the original version of the MSAS as well as the Portuguese version with fit indices within expected criteria. However, Faustino et al. (2019) used exploratory factor analysis for validation. Our results for the absolute indices were more favorable (Chi square, RMSEA and ECVI) than found by Pedone et al. (2017), and somewhat lower in the relative indexes (CFI, NFI and NNFI).

In this study, some items saturated below .50 and had poor communalities during testing. Scores in Mastery showed lower saturation than the rest of the factors and poor internal consistency. Scores on the Self-Reflexivity factor had the most explained variance, as it did in the original study, although the other factors had higher explained variance in this study. It is possible that the higher education of the present sample may have made the use of metacognitive abilities more complicated, thereby making the factor structure more complicated as well (Craig et al., 2020). The correlations between factors were higher than in the original study, and in both cases Self-Reflexivity was noteworthy.

The Understanding Other Minds factor, as in the Portuguese version, correlated with the rest the least. This is consistent with the differences found between the self-reflexive metacognitive skills and the others (Lysaker et al., 2011). Differences between the clinical and general populations have been found with the MSAS (Faustino et al., 2019) and between Binge Eating Disorder patients with and without obesity (Aloi et al., 2020) in some factors, but no differences in Understanding Other Minds. These results are in line with the one-concept (metacognition) MMFM which would correlate the factors without excessively overlapping of the subcomponents.

The results of the multigroup confirmatory factor analysis showed that the same factorial solutions were invariant across gender (male versus female) and age (younger versus older adult), providing evidence of strict, scalar, metric, and configurational invariance. This means that the behavior of these sex and age groups can be assumed to be equivalent in Pedone's four-factor model.

Regarding evidence of its relationship to the external variables criterion, the correlations between the BCIS (Beck et al., 2004) and MSAS scores do not seem very strong, despite the closeness of the Cognitive Insight and Metacognition concepts (Bröcker et al., 2017b). The Critical Distance factor suggests a slight connection with the BCIS factors (and BCIS composite index), as well as cognitive insight with Self-reflexivity. This is coherent with the theoretical framework of the BCIS, since the definition of cognitive insight fits the concept of Critical Distance (Gutiérrez-Zotes et al., 2012, p. 3), which is why the BCIS scale was used. A negative correlation was also found between Critical Distance and Self-Certainty. Greater capacity for distancing thought means less confidence in its absolute veracity. One possible explanation is that values were not very consistent in either the original (Beck et al., 2004) or the Spanish validation of this instrument (Gutiérrez-Zotes et al., 2012). Its designers interpreted this as being due to the small number of items.

Neither did the validation of the Portuguese version of the MSAS show any very outstanding, although significant, relationships between the MSAS and other instruments, such as the MCQ Metacognitions Questionnaire (Wells & Cartwright-Hatton, 2004) and the Interpersonal Reactivity Index (Davis, 1980). The modular conception of metacognition is so specific that the main relationships between the scores on the instruments may be observed more with specific factors than with the overall mean (Craig et al., 2020), in addition to the abovementioned patient/non-patient condition in the specific case of the BCIS.

In clinical samples, a relationship has recently been observed between higher levels of metacognitive ability and clinical insight, depression and intellectual capacity (Wright et al., 2021). This relationship may have been masked in this study, as it dealt only with the general population and did not control for any particular variable. Another circumstance to be kept in mind is that the correlations between online metacognition tools and personal interviews are usually very poor (Craig et al., 2020).

Participants with a psychopathology, those who were currently under psychological treatment, and those who had been prescribed psychotropic medication were compared as an approach to evidence of discriminant validity. In all positive cases (self-informed), without considering diagnosis or type of psychotropic medication, the results were statistically significant, in that those with a psychopathological history or under psychological treatment had lower scores in Self-Reflexivity. Results were also observed in the Mastery factor for treatments, with a medium effect size in the case of psychotropic medication, and in Critical distance for psychotropic medication (medium effect size).

This study had a series of limitations that should be taken into consideration. In the first place, the sample was recruited by accidental sampling, in which a large part of the participants were university students, while other populations were not equally represented. However, the sample was very large, and a control system was applied to answers given their online application. In the second place, self-evaluation capacity may have been evaluated more than metacognition (Hausberg et al., 2012). If metacognition is understood as reflection of thought, the same ability that is being evaluated is necessary to answer the scale. This must be kept in mind as a limitation of the evidence of validity and usefulness of the results, and it would be more conclusive to test the instrument along with other evaluations of these metacognitive processes. In brief, although the use of self-reports for evaluating metacognition has been questioned (Craig et al., 2020), it could suggest the importance of following a different strategy during evaluation: when capacities are minimal, the self-report could be less useful, while if some capacities are intact, and precisely from the modular perspective, the different factors could highlight what factors or components have been retained and which have been strengthened, and further, verify the development of those factors with a follow-up.

Although the adaptation was adequate, the possible influence of cultural nuances in the results should be considered, as well as the influence of education or IQ (Birulés et al., 2020). Some of these may have influenced the reliability of some factors, and this should be analyzed in later studies. It would be very valuable for future research to study the relationship between socio-educational level and metacognitive performance, and also to apply the MSAS in clinical samples to be able to monitor the evaluation of these processes.

Finally, this study was designed for application to a general population, and therefore, participants with a psychological problem, under psychological treatment or medication may not have been within the validity criteria. This would partly explain the low correlations between the MSAS and BCIS scales. Therefore, the evidence of internal structure should be corroborated with a clinical population with different diagnoses, evolution and treatments.

In conclusion, the Spanish adaptation of the MSAS showed sufficient indications of validity to be used in the general population in Spanish-language contexts, and for evaluating metacognition and its subcomponents. It is a short instrument, easily filled out, and suitable for monitoring changes and evolution during intervention, and is worth testing in future studies.