Mi SciELO

Servicios Personalizados

Revista

Articulo

Indicadores

-

Citado por SciELO

Citado por SciELO -

Accesos

Accesos

Links relacionados

-

Citado por Google

Citado por Google -

Similares en

SciELO

Similares en

SciELO -

Similares en Google

Similares en Google

Compartir

Educación Médica

versión impresa ISSN 1575-1813

Educ. méd. vol.7 no.3 jul./sep. 2004

assessment and evaluation

Associate Professor

Keywords: Clinical Performance Assessment, Rubric,

Authors: Kwon, Hyungkyu; Lee, Giljae; Lee, Eunjung

Institution: Kyungsung University (Kwon, Lee Giljae) KAIST (Lee, Eunjung)

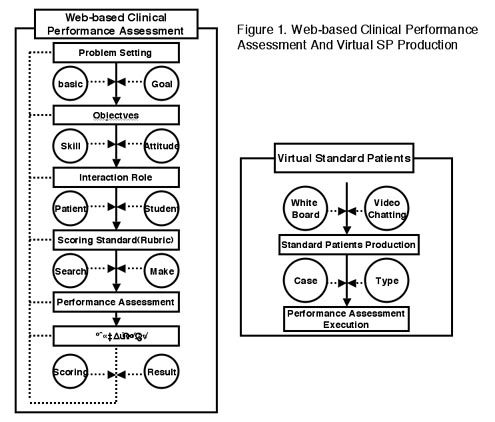

Summary: Web-based Clinical Performance Assessment Model Development Kwon, HyungKyu Lee, KilJaeLee, Lee EunJung. The clinical performance assessment using standardized patients is emphasized for measuring and evaluating clinical practice and performance capabilities of students objectively. However, it lacks acceptable standardized criteria for performance assessment and has the problems of validity, reliability, and fairness due to the differences of various evaluators and evaluation institutions. This research utilizes the rubric, the evaluation criterion for clarifying the level of outcomes in the process of performance assessment. Through the rubric, instructors can decide the performance standard for student's performance based on the data from learning outcomes and can obtain the guidelines for what to evaluate and how to score. And learners can get not only the role of self monitoring for study but also the motivation to achieve the goal. Web-based clinical performance assessment model clarifies objectives(skills, attitudes) for prepared problems and clarifies interaction roles of patients and students. The clarified interaction roles are practiced applying the modes of performance assessment for the evaluation criterion and evaluation method. The automatic/manual scoring is done based on the rubric. Also, the tool for the production and use of virtual standard patient is supported on the web environment. Rubrics for the produced standard patients and clinical performance assessment are accumulated in database and can be used in various synchronous/asynchronous clinical performance assessment. Learners can experience many clinical skills under various conditions and circumstances through clinical performance assessment.

Developing and Validating an Objective Structured Clinical Examination Station to Assess Evidence-Based Medicine Skills

Keywords: Evidence-based medicine

Authors: Gruppen, LD; Frohna, JG; Mangrulkar, RS; Fliegel, JE

Institution: University of Michigan

Summary: Objectives: Skills in Evidence-Based Medicine (EBM) have been identified by numerous medical education organizations as required competencies for students and residents. Although some tools for assessing EBM knowledge exist, there are few tools that assess competence in EBM performance. We have developed a computer-based objective structured clinical exam (OSCE) station to assess the student EBM skills and to evaluate the effects of curricular changes.

Methods: The web-based case requires students to read a clinical scenario and then 1) ASK a specific clinical question using the Population/Intervention/Comparison/Outcome (PICO) framework, 2) generate appropriate terms for a SEARCH of the literature, and 3) SELECT and justify the most relevant of three provided abstracts to answer the clinical question.

Scores are computed for each of the three sections and overall.

Results: Two cohorts of third-year medical students were compared. The 2002 cohort had a minimal EBM curriculum whereas the 2003 cohort had an expanded, longitudinal EBM curriculum. Our assessment documented statistically and pragmatically significant effects.

| Item | Class of 2002 | Class of 2003 | Effect Size |

| (N=140) | (N=157) | ||

| ASK | 22.7 | 26.0a | 0.59 |

| SEARCH | 13.7 | 15.3a | 0.52 |

| SELECT Abstract | 22.3 | 23.4 | 0.10 |

| Total Score | 58.7 | 64.8a | 0.46 |

| % passing all three parts | 29% | 53%a | 0.48 |

a p<0.01

Conclusions: Using this validated methodology, we were able to document a significant change in performance in two of three skills on the EBM station. We attribute this improvement to the changes made in our curriculum. This EBM assessment tool has also been used for first year residents and is being evaluated currently in a multi-institutional validation study.

Assessment of academic staff evaluation program

Keywords: assessment -faculty member-program of evaluation

Authors: Rahimi, B.Zarghami N

Institution: Oromiyeh University of medical science Educational development center.

Summary: Background: The teaching capability of academic staff has a significant relationship with their awareness of the educational process and the evaluation program. It is necessary that academic staff are aware of their own teaching capability and are able to improve continuously the quality of their practice.

Aim: To determine an evaluation program for academic staff.

Summary of work: The subjects of this analytical descriptive study include 70 of 150 academic staff of Urmia University of Medical Sciences who responded to questionnaires. Initially a questionnaire was prepared, containing closed and open ended questions about the evaluation process. To increase the reliability and validity of the questionnaire, it was piloted first. It was distributed and then collected by the researchers.

Summary of results: The findings of this study revealed that 64% of academic staff was male and 36% was female. 35.65% indicated no knowledge of an existing evaluation process during teaching. 44.33% indicated lack of commitment for implementation of an evaluation process and 47.19% indicated lack of commitment of the authorities and disadvantages of evaluation. 63.5% of academic staff agreed to be evaluated at the end of courses and 70% agreed to take part in educational workshops as a feedback system.

Conclusion: It is speculated that evaluation could improve teaching skills.

Explicit transferable skills teaching: does this affect student attitudes or performance in the first year at Medical School?

Keywords: Transferable skills, attitudes, performance

Authors: Whittle, S. R. & Murdoch-Eaton, D.G

Institution: University of Leeds

Summary: Recent changes in UK school curricula have introduced optional Key Skills units, leading to qualifications in Communication, IT and Use of Number. These are designed to teach transferable skills in the context of students' A level courses. Approximately 20% of undergraduate intake at Leeds University Medical School possess some of these qualifications. This study was designed to detect differences between students with and without explicit skills qualifications. Students completed a questionnaire on arrival which asked how often they had practised a range of 31 transferable skills in the previous 2 years, and how confident they felt about their abilities in these. Studies are underway to determine any differences in performance between the two groups in medical course components with clear transferable skill objectives. Questionnaires were completed by 478 students (99 with Key Skills qualifications, 279 without). Students with Key Skills qualifications felt that they had received more opportunities to practise information handling (p=0.01) and IT skills (p=0.02). They also felt more confident in these skills (information handling p=0.04, IT skills p<0.001). Limited evidence suggests that they rated their technical/numeracy skills more highly (p=0.06). Students who have received specific skills teaching demonstrate improved confidence in some skills, and there appears to be a positive relationship between confidence and opportunities to practise these skills. Initial results from an essay writing module however, suggest that students with key skills qualifications do not perform better. Later performance however may show differences. Should Medical Schools encourage students to achieve these qualifications?

The Use of Video to Evaluate Clinical Skills in Paediatrics

Keywords: video, OSCE, assessment, paediatrics

Authors: Round, j.

Institution: St. George's hospital medical school

Summary: Background: Objectively testing examination skills in paediatrics raises unique problems. Using general paediatric cases (as seen by GP's or non-specialists) is difficult as signs rapidly change and disappear. Children rapidly become tired, disinterested or non-compliant so the usefulness of a particular station alters during the exam. Lastly children require feeding and naps, which will not fit an exam schedule. Video has been used in examination of psychiatric patients and communication skills. To increase reliability and face validity of paediatric examination stations, video stations of children with visible signs were developed. This abstract details their content and usefulness.

Methods: Video stations used in a large (n=186) clinical OSCE for undergraduates, results of which are below. Stations consisted of 60-90 seconds of edited footage of children with acute problems (bronchiolitis, croup) or undergoing developmental assessment. With the station was an instruction sheet and written questions. Performance was compared with overall performance. Student opinions were obtained at interview.

Results: Students score a mean of 13.2/20 (SD 2.1) in the video station (OSCE mean 14.2, SD 2.8). Performance was well correlated to the overall OSCE result (r=0.32) with the mean correlation of each station being r=0.38. Students felt that the station was fair although many confessed to a temporary shock at the new assessment method.

Discussion: This video station compares well with other forms of examination assessment in paediatrics. Its quality may be increased as students become more familiar with this type of station.

QFD and continuing medical education

Keywords: QFD matrix , continuing medical education

Authors: Ruiz de Adana Perez R. Agrait Garcia P. Carrasco Gonzalez I. Duro Martinez JC. Rodriguez Vallejo J M. Millan Nuñez Cortes J

Institution: Agencia Lain Entralgo

Summary: Quality functional deployment is a way to listen the customers and understand exactly what they are waiting and using a deductional (deductive) system,to find which is the best way to satisfy customer needs with disponible resources. QFD is a process design methodology for guarantee that customer voice is listened during the planning ,design and implementation of the product or service: listening, understanding, acting and translating what the customer tells us is the philosophical heart of QFD

Objectives: To implement the planning of the continuing medical education matrix in Laín Entralgo agency based on quality function deployment model. To analize the matrix QFD analysis identifying and prioritizing the opportunities in the process of continuing medical education

Methods and persons: A working group composed by 6 experts in planning continuing medical education implement QFD (quality function deployment) matrix identifying and analyzing the following segments : requirements of the customer (Which?).

Characteristics of the process´ activities. (How?). The matrix relationship between which¨¨ and how¨¨. Competitive evaluation. Objectives of the processes activities. (¿How much?). Compliance evaluation of the processes characteristics. Technical and relative importance of every process activity.

Results: We show the QFD matriz of the continuing medical education process using the student requirements. The analysis of the QFD matrix identifies possibilities of improvement in the following activities of the continuing medical education process : identification of organizational needs, identification of professional expectatives (needs),making process of continuing medical education plan, design of educational courses, teachers selection, schedule and courses acreditation

Outcome of quality assessment of a cardiology residency as a result of joint brainwork of graduates and their present medical chiefs

Keywords: quality, program evaluation post-graduate

Authors: Alves de Lima, A., Terecelan, A., Nau, G., Botto, F., Trivi, M., Thierer, J., Belardi J

Institution: Instituto Cardiovascular de Buenos Aires

Summary: Experiences acquired by residents during residency programs (RP) do not always assure success in the working field.

Objectives:

a. to find out what graduates perceive regarding their degree of training acquired after residency period

b. to correlate the opinion of both Graduates and their present medical chief (PMC)

c. to determine whether graduates and PMC perceive existence of re-adaptation period (RAP) after conclusion of RP.

Method: the study was carried out in a University Hospital in Buenos Aires in 2003. All the G, graduated between 1998 and 2001. The G should identify their PMC. The PMC constituted the doctor responsible for the G for > 60% of his weekly working hours during the study period. Data was obtained through an 8-question survey. A qualitative and quantitative analysis ( CA) were carried. The Wilcoxon test was used for the CA.

Results: 15 G (100%) and 13 PMC were included. G showed great satisfaction towards received training during RP. In-patient care areas were specially identified. PMC judged the G as highly competent, particularly on in-patient care areas regarding counseling skills and overall clinical competence. Regarding RAP, 13 G and 8 PMA considered that it exists and that it lasts 385(±6) vs. 344(±5) days (p=NS)

Conclusion: graduates expressed high satisfaction on their preparation and medical chiefs on their performance. Most of the participants considered that there is a re-adaptation period after residency. The present data provides evidence of the effectiveness of a program aimed at preparing doctors for medical practice.

Facilitating PPd using a learning portfolio:experience in a new UK medical school

Keywords: professionalism, reflection, protfolio, undergraduate medical education,assessment

Authors: Roberts, JH.

Institution: Phase 1 Medicine, University of Durham, Stockton campus, University boulevard, Thornaby, Stockton-on-Tees, TS17 6BH

Summary: Purpose: To describe the process of using a reflective Learning Portfolio to assess second year medical students' personal and professional development (PPD) in a new UK medical school.

Methods: PPD at Durham covers ethics, communication skills, evidence based medicine, self care and clinical contexts of care. As part of their formative assessment, students were required to keep a learning portfolio for eighteen months exploring their development in these areas, supported by five prompts: Initial motivation for, and early impressions, of medicine, learning needs and achievements, 'critical incidents' and links between PPD and the wider curriculum. The portfolios were assessed by a PPD tutor and allocated a provisional mark, according to the evidence of reflection throughout the portfolio. This mark was confirmed after a 30 minute interview with the assessing tutor and student. The interview was an opportunity for tutors to give substantial and individual feedback to the student. Tutors were given training to guide them in the marking and conduct of the interview.

Results: Tutor and student inexperience combined to produce anxiety about the assignment and some reluctance to seek help. One finding was that students largely used the portfolio as a cathartic exercise which raised issues with confidentiality.

Conclusion: There is a fine balance between encouraging students to determine the content of their own portfolio and the need for clear criteria for assessment purposes. Tutors' enthusiasm and preparation for the activity are also pivotal in securing its success. We have responded to students and tutor feedback by shortening the length of the assignment and providing more support for tutors and students.

The evaluation of a medical curriculum: using the methods of programme evaluation to align the planned with the practised curriculum.

Keywords: curriculum evaluation, quality assurance, medical curriculum, programme evaluation

Authors: Wasserman, E.

Institution: University of Stellenbosch, Republic of South Africa

Summary: Background: The current focus on the quality assurance of higher education in general and medical education in particular creates a need for a practical but methodologically sound approach to curriculum evaluation. This presentation describes an approach to curriculum evaluation in medical education based on programme evaluation methods used in the social sciences.

Aims & objectives: The aim of the presentation is to explain how the evaluation of a curriculum can be undertaken on the basis of the methodology of social scientific programme evaluation. The curriculum of the medical programme offered at the Faculty of Health Science of the University of Stellenbosch since 1999 is used as a case study to illustrate this approach.

Methods: Clarificatory evaluation is used to assess the planning of a curriculum (the planned curriculum). A Logic Model is constructed as a product of this clarification evaluation.

Results: Aspects of a Logic Model that is the product of the process of clarification evaluation of the medical programme offered at the Faculty of Health Science of the University of Stellenbosh will be presented to illustrate this approach.

Discussion and conclusions: Curriculum evaluation is an important component of the process of quality assurance. Aligning the planned and the practised; curriculum as an approach to the quality assurance of a curriculum can be applied to any of the four types of academic reviews described by Trow. The approach described here is consistent with the definition of quality as fitness for purpose.

Assessment of educational program quality in Tehran University of medical sciences and health services, according to the referendum from the graduates

Keywords: assessment, education, graduate

Authors: Farzianpour, F.

Institution: School of public health Tehran university of medical sciences and Educational development center

Summary: Introduction: Educational program quality assessment in university level is to determine:

1- The degree and extent of foreseen objectives for university fulfillment, and also.

2- The strength and weakness points of these assigned objectives. Educational program quality assessment is one of the most significant duties of universities of medical sciences. On the other hand, occupational capacity, ability and efficiency of medical graduates in order to offer the best health and treatment services, and to provide individual and social health, mostly depends on provision and fulfillment of the above-mentioned objectives. In case, the educational programs are not well designed and well-performed, there will be harmful cultural, social and economical effects, imposed to people, the graduates and also university credence and management. The general objective of this study is to improve educational program quality and to promote education in a university level.

Special objectives are:

1- To determine the total average scores of the graduates.

2- Distribution of age and gender.

3- Satisfaction.

4- Strength and weakness points.

5- Finally Educational problems of the graduates.

The survey method has been analytic-descriptive and the comunity under the study is about 178 graduates from medical faculty. All the data has been analyzed, based on the software: (SPSS 9, 10) Survey findings show that % 61.2 of the graduates are males and % 38.8 are females. The graduates average score is 15.75, but the standard deviation and the range is 1.23, 12.66, 18.55 respectively. About 78.7 percent of the graduates expressed satisfaction on their faculty. The most important strength and weakness point of the survey community has been %52.2 in training period and %78.7 in physio-pathological one, respectively. It is concluded that, the average satisfaction of the graduates on university level is 66.11 and there has been a significant promotion in educational program quality, in medical university.

The relationship between group productivity, tutor performance and effectiveness of PBL

Keywords: Problem-based learning, tutoring

Authors: Dolmans, D., Riksen, D. & Wolfhagen, I.

Institution: University of Maastricht, Dept. of Educational Development & Research, PO Box 616, 6200 MD Maastricht

Summary: Tutor performance and tutorial group productivity correlate highly with each other. Nevertheless, for some tutorial groups, a discrepancy is found between the two variables. The hypothesis tested is whether a high performing tutor can compensate for a low productive group and whether a high productive tutorial group can compensate for a relatively low performing tutor. Students rated the tutor performance, the tutorial group productivity and the instructiveness of the PBL unit (1-10). In total 287 tutors were involved and were categorized as having a relatively low, average or high score on tutor performance. This was also done for the group productivity score. For each combination, the average instructiveness score was computed. The results demonstrated that the average instructiveness score was higher if the productivity score was higher. The instructiveness score was also higher if the tutor score was higher. However, the average instructiveness score did not differ significantly under different levels of tutor performance, whereas it did differ significantly under different levels of group productivity. It is concluded that a high productive group can too a considerable extent compensate for a low performing tutor, whereas a high performing tutor can only partly compensate for a relatively low productive tutorial group. The findings of this study are in line with earlier studies demonstrating that tutorial group productivity and tutor functioning interact with each other in a complex manner. The implications are that faculty should put more efforts in improving group productivity, eg by evaluating tutorial group functioning at a regular basis.

Improving Clinical Competence in Health Issues in a Third Year Pediatric Clerkship

Keywords: Health Issues; Pediatric Clerkship; OSCE

Authors: Bonet, N.; Márquez, M.

Institution: University of Puerto Rico, School of Medicine

Summary: Background: Objective Structured Clinical Examination (OSCE) is used in medical schools to evaluate clinical skills. At the Department of Pediatrics, School of Medicine of the University of Puerto Rico, students' development of clinical competence on health issues, e.g., growth/development, health maintenance, disease prevention and patient education have been a great concern, that is sustained by students' performance on USMLE Steps 1 and 2 and the NBME pediatric subject test.

Methods: To improve students' clinical skills, the faculty implemented the following interventions:

Students must follow Guidelines for Health Supervision III (American Academy of Pediatrics) for interventions on ambulatory setting and assigned case presentations

Students are required to present a lecture on the topic of growth and development

An immunization lecture was added to didactic activities

Faculty was asked to strengthen their teaching of health promotion, maintenance issues and disease prevention during clerkship rotations.

To assess students' skills after above interventions, the faculty, in 2002, restructured the pediatric OSCE to include one station exclusively for health maintenance and disease prevention.

Results: Outcome data for 2002 and 2003 indicate an improvement of students' performance on USMLE Step 2 on topics of preventive medicine and health maintenance. OSCE's mean scores show students with superior performance on the health maintenance station, overall mean of 85.68%.

Conclusions: There is evidence that selected interventions and OSCE stations to teach and evaluate clinical skills are effective.

The Effect of Educational Stressors on the General Health of the Medical Residents

Keywords: educational stressor, general health, medical resident

Authors: Khajehmougahi, N.

Institution: Ahwaz University Medical Sciences

Summary: Introduction: In the age of information and application of technology in today's knowledge area, troublesome regulations and traditional medicine instruction procedures may cause serious stresses and be a threat to General Health (GH) of the students of medicine.

Aim: The purpose of the present study was to determine the effect of current medicine instruction procedures on general health of residents studding in Ahwaz University of Medical Sciences.

Method: Type of the study was cross sectional. Subjects were 114 desirous to cooperation residents in different fields of specialized. The instruments were the Educational Stressors Questionnaire, including 45 four- choice item, and General Health Questionnaire. After completion the questionnaires the results were analyzed through Pierson Coefficiency Correlation procedure using the SPSS.

Results: The residents mentioned their educational stressors as follows: Lack of an arranged curriculum, educational troublesome regulations, deficient educational instruments, and inadequate clinical instruction. 37.6 percent of the subjects appeared to have problems in GH, and, there was observed a significant positive coefficiency (p<0.01) between educational stressors with all the followings: GH, somatic problems, Anxiety, and with disorder in Social functioning.

Conclusion: As it appeared, educational stressors can be a risk factor for the students' GH, which may follow reduced interest, educational fall, and failing to achieve mastering the diagnosis procedures and treating ways. The study's findings suggest basic changes in the current medicine instruction ways.

Temperament, character, and academic achievement in medical students

Keywords: Temperament, Character, Academic Achievement

Authors: LEE, YM; HAM, BJ; LEE, KA; AHN, DS; KIM, MK; CHOI, IK; LEE, MS

Institution: College of Medicine, Korea University, College of Medicine, Hallym University

Summary: Objective: This study investigates the relationships between TCI dimensions and the academic achievements of medical students.

Method: Our sample consisted of 119 first-year medical students at the Korea University Medical School during the 2003–04 academic year. The Temperament and Character Inventory (TCI) was administered to all participants during one class in the third quarter of the first academic year of medical studies. In addition, first-year grade-point average (GPAs) scores were obtained. We examined the relationships between individual TCI dimensions and the GPA scores in the analysis by using correlation coefficients.

Results: Our results suggest that NS (Novelty seeking), P (Persistence), and SD (self-directedness) dimensions are associated with academic achievement in medical students. Medical students scoring high on NS and low on P and SD were significantly less likely to sit examinations successfully.

Conclusion: Dimensions of the personality play a major role in the academic achievements of medical students. Personality assessment may be a useful tool in counseling and guiding medical students.

High states undergraduate OSCE?s: what do you do for students who require supplementary examinations?

Keywords: OSCE, supplementary examination, practicality

Authors: Worley, P. and Prideaux, D.

Institution: Flinders University

Summary: Increasingly, medical schools are using large scale OSCEs to examine students at key progression points in their undergraduate courses. The reliability and validity of this method of testing is extremely important in a culture where society is demanding high quality standards and students may involve lawyers to overcome perceived unfairness in assessments. Large scale OSCEs require a large commitment from a wide range of clinicians and support staff in both the University and the associated clinical services. This commitment may be given once a year, but what happens when a student is eligible for a medical/compassionate or academic supplementary examination, especially when this examination contributes to a ranking process that determines future career options? And if students with a medical supplementary then qualify for an academic supplementary examination, can you mount a third OSCE?

This paper will examine this important assessment challenge, from both educational and practical perspectives, based on the experience at the Flinders University School of Medicine. We will present a range of solutions to this difficulty and will invite debate from others? experiences in meeting this challenge.

Using portfolios to develop and assess student autonomy and reflective practice

Keywords: portfolio assessment, reflective practice

Authors: Toohey, SM, Hughes CS, Kumar RK, O'Sullivan AJ, McNeil HP

Institution: University of New South Wales

Summary: The Faculty of Medicine at the University of New South Wales implemented a new undergraduate program in 2004, which focuses on achievement of a set of eight graduate capabilities. The program emphasis is on producing doctors who have a well integrated knowledge base, are capable of evaluating their own performance, and of setting their own learning agendas. Students have substantial freedom to pursue topics that interest them through project or clinical work. The flexibility of the program, as well as the focus on developing student responsibility and reflective practice, called for a different approach to assessment. As part of an assessment scheme which includes written and clinical exams, individual assignments and group projects, students present a portfolio of their work at three points in the six year program. Students must pass each of the portfolio assessments to progress to the next phase of the program or to graduate. This paper focuses on the distinctive design features of the UNSW portfolio. These include the use of the portfolio as a tool to help students take responsibility for planning and managing their own learning. Marking against the graduate capabilitie through all aspects of the assessment system enables a student to present a profile of performance in regard to each of the capability areas. Included in the portfolio are selected assignment and project work, the full range of teacher grades and comments given in relation to each capability, peer feedback on team work and the student's own self assessment and reflection.

Clinical Skills Assessment at Medical Schools in Catalonia (Spain) in the year 2003

Keywords: Assessment, clinical skills, undergraduate, OSCE

Authors: Viñeta M, Kronfly E, Gràcia L, Majó J, Prat J, Castro A, Bosch JA, Urrutia A, Gimeno JL, Blay C, Pujol R, Martínez JM.

Institution: Institut d'Estudi de la Salut

Summary: The Institute of Health Studies jointly with the Catalan Medical Schools have conducted several projects on Clinical Skills Assessment using OSCEs since 1994. In 2003 an Objective Structured Clinical Examination (OSCE) to assess clinical competences for final year medical students was used in seven Catalan Medical Schools. A multiple-station examination, with 14 cases distributed in 20 stations, and a written test, composed of 150 MCQ (20 questions with pictures associated), was designed to assess medical competences. A questionnaire to be answered by the candidates was distributed and implemented at the end of the exam in order to find out the examinees' opinion. The OSCE scored highly on internal consistency with a Cronbach's alpha = 0.86 for the multiple-station examination and 0,83 for the written test. The global mean score for the test was 61.82 % (sd: 6.7). The mean scores, obtained by the 422 medical students who completed the OSCE, for every specific competence assessed, were as follows: history taking 64.8 % (sd: 8.4), physical examination 51.4 % (sd: 11), communication skills 61.4 % (sd: 6.2), knowledge 58.1 % (sd: 10.4), diagnosis and problem-solving 59.8% (sd: 8.9), technical skills 73.9 % (sd: 12.4), community health 64.5 % (sd: 13), colleague relationship 48.6 % (sd: 9.9), research 62 % (sd: 22.5) and ethical skills 62.4 % (sd: 17.1). The examinees' opinion for the organization, contents and simulations was high (main score was more than 8 points in a likert scale over 10 points). OSCE based methodology has proved to be a feasible, valid, reliable and acceptable tool to evaluate final year medical students in our context.

Is it possible to conduct high-stake oral examinations in a reliable and valid way for small numbers of candidates with limited resources?

Keywords: Oral examination, structured oral examination, high-stake examination, limited resources, reliability, validity, MCQ, feasibility

Authors: Westkämper R1, Hofer R1, Weber M2, Aeschlimann A3, Beyeler C4

Institution: 1Department of Medical Education, University of Bern, 2Stadtspital Triemli, Zürich, 3RehaClinic, Zurzach, 4Department of Rheumatology and Clinical Immunology/Allergology, University of Bern, Switzerland

Summary: Background: Medical societies face the challenge of ensuring high quality certifying examinations with optimal utility (reliability, validity, educational impact, acceptability, costs).

Aims: To assess reliability and to consider aspects of validity of a structured oral examination (SOE) in a small medical society.

Methods: Thirteen candidates took part in the certifying examination based on a blueprint of the Swiss postgraduate training program in rheumatology. A multiple-choice-question (MCQ) test was followed by a SOE [3 teams of 2 examiners testing 3 cases each in two hours according to previously agreed on criteria]. In addition, communication skills (CS) were assessed on a rating scale [9 items, Likert scale 1 to 4]. Data were analysed by SPSS.

Results: The cases were solved on average by 92% of the candidates (range 77-100%). Correlations of the competence demonstrated in one case with the sum of the results achieved in the other 8 cases ranged from –0.14 to 0.97, indicating a wide range of discrimination power. Nevertheless, overall reliability was high (Cronbach-a 0.88). Significant correlations were found between SOE and CS (r = 0.88, p < 0.001), SOE and MCQ (r = 0.58, p = 0.038), but not between CS and MCQ (r = 0.46, p = 0.110).

Conclusions: Our SOE assessed medical competencies that seem more closely related to CS than factual knowledge tested by MCQ tests and yielded a high reliability. Our design and the efforts of the examiners contributed to a high validity. All together resulted in a satisfactory quality with an acceptable utility.

Using real patients in clinical examinations: A questionnaire study

Keywords: Patients; Paediatric; Clinical Exams

Authors: Williams, S.; Lissauer, T.

Institution: Royal College of Paediatrics and Child Health

Summary: There are a number of publications detailing the experience of examiners and candidates during clinical exams. There is little, however, which has documented the experience that patients have despite specific concerns in the use of real patients in such exams. The aim of the current research is to investigate the experience of parents and children who participate in the MRCPCH Part two Clinical and Oral examination and to open up the debate on the ethics of using real patients in clinical exams. Questionnaires were sent to all centres hosting the MRCPCH clinical examinations in June 2003 and February 2004 to capture both quantitative and qualitative data. Overall the results suggest that the majority of children and parents found taking part in the clinical examination a positive one. Multiple regression analysis highlights administrative variables (such as the length of time involved and the conditions at the centre) rather than the consultative variables (such as the interactions with the candidates and examiners) as a major factor in having a negative experience. Whilst this type of research is relatively new, the results of the present survey do suggest that far from being a traumatising or abusive experience that the vast majority of children found taking part in the exam an enjoyable experience. They further suggest that careful attention to the timings and structure of the exam could help to eradicate the potential for a negative experience.

Variation on a theme: the use of standardized health professionals (SHP) in an objective structured clinical examination (OSCE) in neonatal-perinatal medicine

Keywords: OSCE, Standardized Health Professional, Neonatal

Authors: Brian Simmons, Ann Jefferies, Deborah Clark, Jodi McIlroy, Diana Tabak and Program Directors of the Neonatal-Perinatal Medicine Programs of Canada (2002-03),

Institution: Depts. Of Paediatrics, University of Toronto, Toronto; University of Calgary, Calgary. Wilson Centre for Research in Education, University of Toronto, Toronto, ON, Canada.

Summary: Background: Standardized patients (SPs) are traditionally used in the OSCE to portray patients or parents. We developed an OSCE for subspecialty trainees in Neonatal – Perinatal Medicine that included SHP roles.

ObjectiveE: To compare reliability of SHP and SP stations.

Design/methods: Two OSCEs conducted in 2002 and 2003 consisted of 14 SP stations, 8 SHP stations and 1 post encounter probe. SHPs included respiratory therapists, nurses, physicians and a medical student. Examiners completed station specific checklists, global ratings to assess CanMEDS roles (medical expert, communicator, collaborator, manager, professional, scholar, health advocate) and an overall global rating. SPs and SHPs completed communication global ratings. Projected alpha coefficients (to a ten-Station OSCE) were calculated, using Spearman-Brown Prophecy formula.

Results: 54 trainees participated. As shown in the table, alpha coefficients were greater than 0.70. There were no significant differences in reliability between SP and SHP stations (p > 0.05). Reliability was consistently higher with global rating scores.

Conclusions: SHPs may be used in OSCE stations, which require medical knowledge and expertise. SHPs could be used in high stakes exams. A formal training program should be considered.

General physician view about communication skills & patient education in Shiraz –Iran

Keywords: communication skills & patient education

Authors: Najafipour, F.

Institution: valfajr health center

Summary: General physician view about communication skills & patient education in Shiraz –Iran Fatemeh najafipour – Azam najafipour-Bagher Nasimi Nowadays clinical competency of physicians usually judged based on communication with patient.

Effective communication between physician and patient is one of the most important steps to improve level of health and prevention in society. Applying effective communication skills of physician lead to more involvement role of patient in treatment process. This study has been done to assessment view point of general physician about communication skills & role of patient education in treatment process.

Material & Method: This was a descriptive, cross –sectional study. Data were gathered using a scientifically validated questionnaire which contained closed questions that were focused on communication skills and educational behavior of physician relating to patient. The questionnaire was distributed among 100 general physician who participated in contineous medical education program (CME)

Result showed: 85% general physician stated effective communication is very important in treatment process. 90% of general physician stated educationing patient leads in to more cooperation between the physician and patient for better following up ofthe treatment plan. Only 40% of general physician has been spent adequate time on patient education. The details of results would be presented to the conference.

The Impact of the Eighty Hour Work Week on The House Staff at a Large University Affiliated Community Based Teaching Hospital

Keywords: Resident Working Conditions

Authors: Best, K., Weiss, P., Koller, C., Hess, L.W.

Institution: Lehigh Valley Hospital, Department of Obstetrics and Gynecology

Summary: Objective: To determine how the recently mandated eighty hour work week restriction affects the psycho-social well-being and clinical experience of ob/gyn, surgical, and internal medicine residents at Pennsylvania's largest community-based teaching hospital.

Methods: A questionnaire consisting of ten items, each scored on a five-point Likert scale, was distributed to upper year residents in the departments of ob/gyn, surgery, and internal medicine. The questionnaire addressed residents' perceptions of the psycho-social and clinical impact of the mandated eighty hour work week as well as their program's level of compliance. Resident participation in sentinel cases and/or procedures prior to and after the mandated hours was evaluated to determine the impact on clinical experience.

Results: Final results pending; however, preliminary data suggest that the ACGME work restrictions have positively impacted upon resident stress/fatigue and home life without compromising the quality of neither patient care nor patient safety. A small, but statistically non-significant impact on surgical and/or procedural experiences was noted.

Conclusions: Transitioning to the eighty hour work week prompted numerous concerns from house staff and faculty. Thus far, our data suggests that there is no negative impact on the quality of patient care. The data also shows a commitment to compliance with the mandated work restrictions despite the concerns.

M.D.

Keywords: Teaching Scholarship, Faculty Recognition

Authors: Wolpaw, D., Wolpaw, T.

Institution: Case School of Medicine (Case Western Reserve University School of Medicine)

Summary: Traditional approaches to recognizing contributions to medical education are largely dependent on learners and subject to popularity and exposure bias, impacting only a small percentage of our teachers. With the goal of a process that would be inclusive, broadly applicable, transparent, and academically rigorous, we set out to address the challenge of faculty recognition for education in three steps: 1) Track faculty effort in medical education through an electronic summary, 2) Ask faculty to describe a recent educational effort in a 1-2 page "Best Contribution" narrative, 3) Subject these narratives to an academically rigorous peer review process that serves as the basis for recognition awards. This program is designed to evaluate scholarship and quality in the various products of educational effort, rather than take on the complex and ultimately subjective challenge of fairly evaluating the quality of the teachers themselves. It is expected that the impact of this program will be seen in four ways: 1) Enhancing the profile of education and educators 2) Opening up the classroom for better communication on new and/or successful ideas, 3) Creating a straightforward template for teaching recognition that can be easily translated across institutions, and 4) Establishing a broad-based peer review network for educational ideas and products. Program evaluation includes tracking submissions, peer-review scores, and subsequent publications, as well as surveying attitudes of applicants, non-applicants, and members of the promotions and tenure committee to assess impact and changes in institutional culture.

Starting Work - Ready or not? Views of commencing medical interns on the skills developed during their undergraduate program

Keywords: curriculum evaluation, undergraduate medicine, graduate skills

Authors: Lindley, J.; Liddell, M.

Institution: Monash University

Summary: Decisions about the quality of medical education rely, in part, upon the performance of new graduates in their roles as beginning doctors. The success of the course in preparing medical graduates is dependent upon graduates being equipped with the necessary knowledge, skills, attitudes and professional behaviours. As the practice of medicine requires the application of knowledge and skills in a clinical setting embedded within a social context, graduates must be capable managers of health care across a complex range of situations. To evaluate graduate outcomes the Faculty of Medicine at Monash has collected data from two consecutive cohorts of graduates during their first year as medical practitioners in the hospital system. The second cohort had undertaken a final year program that was significantly revised compared to that undertaken by the first cohort. The project gathered graduates' views on the success of their undergraduate course in preparing them for the demands of the medical workplace. Responses were sought on a range of vocational skills comprising clinical tasks, procedural techniques and professional relationships. Data from the surveys was analysed and results for clinical tasks, practical skills and professional relationships revealed some differences between the cohorts with students from the second cohort indicating that they perceived themselves to be slightly better prepared than their counterparts in the previous cohort. Data analysis also allowed identification of specific areas for curriculum review.

Influence of the APLS and PALS courses on self-efficacy in paediatric resuscitation

Keywords: APLS, PALS, self-efficacy, paediatric, resuscitation

Authors: Turner, N.M. Paediatric Anaesthesiologist Dierselhuis, M.P., Final year Medical Student Draaisma, J.Th.M., Paediatrician ten Cate, Th.J., Professor in Medical Education

Institution: Wilhelmina Children's Hospital and Faculty of Medicine, University Medical Centre, Utrecht, and St Radboud Medical Centre, Nijmegen, The Netherlands

Summary: Introduction: Most life support courses recognise that performance during resuscitation depends partly on attitudinal factors1. The current study was designed to assess the effect of following either the Advanced Paediatric Life Support (APLS) or the Pediatric Life Support (PALS) course on the learners' self-efficacy in respect of six psychomotor skills. Global self-efficacy at paediatric resuscitation was also measured.

Methods: All candidates attending the courses were sent an anonymous questionnaire before the course and three and six months later. They were asked: 1) to rate their self-confidence in respect of the six skills and globally using a 100 mm visual analogue scale; 2) to estimate the frequency of performance of the skills; 3) to nominate two direct colleagues with a similar level of experience who did not intend to follow either of the courses.

Results: Preliminary results suggest that attending the courses does lead to increased self-efficacy both globally and in respect of defibrillation, insertion of an intraosseous device and umbilical vein catheterisation. Prior to the course, candidates appear to have less self-confidence about intubation and defibrillation than their colleagues who choose not to follow the course. See graph Discussion Although this study makes use of a new method of measuring self-efficacy, and despite the fact that the relationship between self-efficacy and performance is variable2, we cautiously conclude that the APLS and PALS-courses seem to have a positive affective effect on the candidates which might be associated with improved performance of paediatric resuscitation.

References: 1. Carley S, Driscoll P, Trauma education, Resuscitation 48 (2001) 47-56. 2. Morgan PJ , Cleave-Hogg D, Comparison between medical students' experience, confidence and competence. Medical Education 36 (2002) p 534-539.

Towards the promotion of quality in Medical Education at the Faculty of Medicine of the University of Porto (FMUP): Connecting the Evaluation Process with the Proposal of an Innovative Curriculum of the FMUP

Keywords: Evaluation, Curriculum

Authors: Tavares, M.A.F., Bastos, A., Sousa-Pinto, A.

Institution: Faculty of Medicine University of Porto and School of High Education, Politechnic Institute Viana do Castelo

Summary: From 1998, the medical course of the FMUP was evaluated under several institutional initiatives, all of them within the scope of quality programs in higher education. As part of these programs, the CNAVES (National Council for Higher Education Evaluation) provided the guidelines for a new evaluation process of the medical course, during the academic year 2002-2003. The answer to this request triggered a dynamic process in FMUP involving the whole institution, being performed as a developmental evaluation. The results obtained in resources, administration, education and research, allowed to draw a developmental strategic view of FMUP. Evaluation of the curriculum provided a set of strengthnesses and weaknesses that reinforced the urgent need to reform the curriculum content and integration of subjects, merging basics with a clinical view from the beginning of the medical course, enhancing the clinical component and introducing optional modules. Within the development of a quality program, in the same academic year, the Curriculum Committee of FMUP started to design the new curriculum. The basic structure of the emerging proposal resulted on a core curriculum with study optional modules, providing vertical integration within a system-organization model and horizontal integration within a theme/subject organization. This model will overcome the weaknesses demonstrated in the different evaluation processes of the course, supporting and enhancing the strengthnesses of the Institution. The present work will describe the process of developmental evaluation settled at the FMUP and the central guidelines that will provide the foundation of the new curriculum (Supported by FMUP).

Students perceptions of learner-centered, small group seminars on medical interview

Keywords: learner-centered method,medical interview, undergraduate education, video-tape review

Authors: Saiki, T. Mukohara, K. Abe, K. Ban,N.

Institution: Nagoya University Hospital

Summary: Background: Experts in medical education recommend learner-centered instructional methods. We utilized such an experiential, interactive method for a two-day, small group seminar on medical interview and communication skills for students at the Nagoya University School of Medicine. It was part of a 1-week clerkship rotation at the Department of General Medicine.

Purpose: To describe the perceptions of medical students of the learner-centered, interactive, small group seminar for medical interview and communication skills.

Methods: A 10-item questionnaire was administered to a total of 101 students who participated in the seminar throughout the academic year April-2003 to March-2004. The questionnaire items were related to the process of a learner-centered educational method and included a global assessment of satisfaction with the seminar. Each item was rated on a 4-point scale labeled as unsatisfied, somewhat unsatisfied, somewhat satisfied, and satisfied. The proportions of students who were satisfied were calculated for each item.

Results: Seventy-six percent of students were satisfied with the seminar overall. Among the other 9 items engaging all students in discussion was rated the highest (80% satisfied). The items concerning structuring the seminar in logical sequence and managing time well were rated the lowest (39% and 42% satisfied, respectively).

Conclusion: The learner-centered seminar on medical interviewing was well received by students, especially for its interactive methods. Items that reflect more teacher-centeredness such as structuring the seminar in logical sequence and managing time well received lower satisfaction ratings.

Formal education in the early years of postgraduate training: has the pendulum swung too far?

Keywords: formal, informal, experiential, work-based, supervision,

Authors: Agius, S J.; Willis, S; Mcardle, P; O'Neill, P

Institution: University of Manchester

Summary: Formal education in the early years of postgraduate training: has the pendulum swung too far?

Background: The relationship between hospital consultants and doctors in training is set to experience yet further transformation with a Government-instigated modernisation process in postgraduate medical education.

Method: The University of Manchester has conducted a qualitative study of the culture of medical education in the SHO grade, based on interviews with 60 clinicians and educational leaders. These were recorded, transcribed and subjected to content analysis. For this study, data was coded to determine perceptions of formal and informal education.

Results: Within hospital-based communities of practice in medical education, the centrality of the relationship between consultant and doctor in training remains undiminished. The educational experience of a doctor in training depends largely upon the consultant(s) to which (s)he is assigned. There is a common perception that too much emphasis is being placed on formal education, to the detriment of work-based experiential learning.

Discussion: There is a perception that the early years of postgraduate medical training have altered as a result of external variables (reduced hours, shift systems). There is a consequent sense of loss at the reduction in contact between trainer and trainee, compounded by a belief that education is increasingly dislocated from the work-place through the use of formal classroom-based techniques. If the Government's new model of training is to work, then education should be located firmly in the work-place, within a formalised structure that makes learning explicit and foregrounds the importance of supervision and feedback. This will assist in retaining consultant commitment to the educative role, reducing the sense of conflict between service and training, whilst providing an effective means for the doctor in training to harness the requisite knowledge, skills and attitudes as an itinerant learner within a coherent structure.

Which factors are associated with the evaluation of a post-graduate course in public health?

Keywords: evaluation, public health, post-graduate course

Authors: Revuelta Muñoz, E.; Farreny Blasi, M; Godoy Garcia, P

Institution: Institut Català de la Salut

Summary: Introduction. Evaluating postgraduate courses is essential for increasing their quality and adapting them to the needs of students. The objective of this study was to analyse whether the student-related characteristics have an influence on their evaluation of post-graduate courses.

Methods: The population of the study was 70 students from the "Diplomado en Sanidad" a post-graduate course in Public Health held in Lleida (Spain) from 2001 to 2003. This course was organised in 8 modules: "Introduction to Public Health", "Statistics", Transmitted Diseases", "Protocols in Cronic Diseases", "Health Protection" (HP), "Epidemiology", "EpiInfo", and "Research Methodology" (RM) The first 4 modules were theoretical and the other 4 had a practical approach. Independent study variables were: student profession, gender and age. The dependent variable was the global evaluation of each module. The information was obtained from self-administered questionnaire. The question related to the dependent variable was "Do this course generally meets your needs?". It was scored between 1 ("total disagreement") and 5 ("total agreement"). Each variable was characterized with a mean and its standard error. The relationship between the dependent and independent variables was studied using an ANOVA test with a p value < 0.05.

Results: The students' evaluation of the modules ranged between 3.2 for Statistics and 4.2 for RM, with significant differences (p<0.001). Epidemiology, EpiInfo and HP were also significantly well-valued.. We did not detect any significant differences for age and gender.

Conclusions: Modules with a more practical approach receive the best evaluations and greatest acceptation, independent of student profile. We should therefore adapt a more practical approach in our lectures.

Managing change in postgraduate medical education: what the consultant saw

Keywords: organisational change, educators' role

Authors: Agius, S J.; Willis, S.; Mcardle, P.; O'Neill, P A.

Institution: University of Manchester

Summary:

Background: The structure and content of postgraduate medical training in the UK are undergoing a major modernisation process. This will have a significant impact on the role of hospital consultants with educative responsibilities.

Methods: The University of Manchester has conducted a qualitative study of the culture of medical education in the SHO grade. The study includes an exploration of hospital consultants' perceptions of the modernisation process, and its impact on their role. Interviews were conducted with 28 consultants with varying education-related duties. These were recorded, transcribed and subjected to content analysis.

Results: There is widespread uncertainty about the nature of change to postgraduate medical education, particularly amongst front-line clinical educators with no additional education-management role. Even those with such roles (e.g. Medical Directors, Clinical and College Tutors)display considerable levels of anxiety and confusion about the modernisation process. There is a strong sense that educational supervisors should have dedicated time to plan and deliver training. This should be supported with appropriate and sustained training for their educational role.

Discussion: Hospital consultants are concerned about the impact of modernisation in postgraduate medical education on their own role. This is understandable given the many pressures on their time, although much of their uncertainty is a result of limited awareness about change combined with communication deficiencies from Government downwards. Development of the regional and local infrastructure that supports medical education is required. The majority of consultants are committed to the education of doctors in training, bur greater recognition and support of their role is necessary if goodwill is to be maintained.

Does portfolio contribute to the development of reflective skills?

Keywords: portfolio, assessment, self-evaluation

Authors: Driessen, E.

Institution: Maastricht University

Summary: Questions about the utility of a portfolio as a method for the development and assessment of reflective skills are frequently raised in the literature. However, the literature shows few studies which report answers to these questions. The purpose of this presentation is to give more insight in the practical use of a reflective portfolio in medical undergraduate education. In our research, we were specifically interested in the conditions that promote the development of reflective skills. We have interviewed teachers about their experiences with coaching and assessing students in keeping a portfolio. While doing this, we focussed on the teachers' perceptions of portfolio and reflection. We used grounded theory methodology to explore teacher perceptions in an open and broad way. All mentors in our study agreed that the process of compiling and discussing a portfolio contributes to the development of reflective ability. The thinking activities that a student undertake while compiling his portfolio are essential for this effect. Factors which are decisive for the successful use of portfolio are: mentoring, portfolio structure, the nature of student experiences, assessment and perceived benefit by the student.

Standardized patients in a catalan medical school: a way to learn competencies

Keywords: Standardized patient, undergraduate, competencies

Authors: Descarrega-Queralt, Ramon; Vidal, Francesc; Castro, Antoni; Solà, Rosa; Olivares, Marta; Oliva, Xavier; Ubía, Sandra; Nogués, Susana; Escoda, Rosa; González-Ramírez, Juan

Institution: Facultat de Medicina i Ciències de la Salut - Reus. Universitat Rovira i Virgili de Tarragona

Summary: In 2001 the Faculty of Medicine of Universitat Rovira i Virgili started a project on competencies learning. The participants in the project were students of the last courses of Medicine. Cases with standardized patients were the formative instrument. The competence components analysed were: history taking, physical examination and communication skills. An opinion questionnaire was undertaken by 50 participants. Through the questionnaire 18 different areas were evaluated, using a Likert scale, relating to logistics, organization, contents and learning impact. Results proved this project is feasible and well accepted, and is a good method to improve the learning process of medical students.

A survey of cheating on tests among Catholic University of Chile medical students

Keywords: cheating

Authors: WRIGHT, A.; Trivino MD,X, Sirhan MD, X; Moreno MD, R

Institution: Pontificia Universidad Católica de Chile, Escuela de Medicina

Summary: Cheating is an unethical behavior. In medical schools, this represents a recurrent problem, with a reported frequency close to 60 percent. To investigate cheating on tests, an anonymous questionnaire was distributed among 97 fourth-year medical students. Students were asked whether they have seen other students cheat and their attitudes about cheating on: ethical, behavioral, and legal grounds. They also were questioned on the reasons for, consequences of, and deterrents to cheating. Of the students, 86% reported that they had seen other students cheating. Ninety percent considered cheating unethical, 77% as reprehensible, and 43% as unlawful. The main reasons for cheating were to obtain better grades (21%), insecurity about the correct answer (16%), and lack of study (13%). Eighty-six percent reported negative consequences related to cheating, 91% considered it detrimental to the student who cheats, and 63% felt cheating to be harmful to peers. Slightly more than half of the students expressed that cheating is not related to inappropriate behaviors with patient care. The expected increase in grades was mentioned as a positive consequence (60%), especially when applying for residency. The main deterrents proposed were improved test quality (35%), more effective monitoring (28%), and application of institutional regulations. Interestingly, a high percentage of students were in agreement in their responses and attitudes to cheating. It is remarkable that students perceive test cheating as unethical and having negative consequences. This constitutes the ground basis to develop a nurturing culture of Medicine, enhancing honesty, integrity, and professionalism.

Practising Doctors Can Accept Review

Keywords: peer review, acceptance

Authors: Kaigas, T.

Institution: Cambridge Hospital

Summary: Acceptance of peer review by doctors in a Canadian community hospital was assessed using a post-review survey. In this program, practising doctors were systematically reviewed in the hospital using a multimodal review process. They then filled out a survey regarding their impression and degree of satisfaction with the review. High acceptance was demonstrated with 92% seeing the review as positive overall. Possible reasons for this are discussed and proposals presented to gain acceptance, even with sceptical groups of doctors.

The feasibility, reliability, and construct validity of a program director's (supervisor's) evaluation form for medical school graduates

Keywords: outcomes assessment

Authors: Steven J. Durning, Louis N Pangaro, Linda Lawrence, John McManigle and Donna Waechter

Institution: Uniformed Services University, Bethesda, Maryland 20814, USA

Summary: Purpose: We determined the feasibility, reliability and construct validity of a supervisor's survey for graduates of our institution.

Methods: We prospectively sought feedback from Program Directors for our graduates during their first post-graduate year. Surveys were sent out once yearly with up to 2 additional mailings. For this study, we reviewed all completed Program Director Evaluation Form surveys from 1993-2002. Interns are rated on a 1-5 scale in each of 18 items. Mean scores per item were calculated. Feasibility was estimated by survey response rate. Internal consistency was determined by calculating Cronbach's alpha and with exploratory factor analysis with varimax rotations. Assuming that our graduates would show a spectrum of proficiency when compared to graduates from other schools, construct validity was determined by analyzing the range of scores, including the percent of scores below acceptable level (2 or 1, see below table).

Results: 1297 surveys (81% graduates) were returned. Cronbach's alpha was 0.93. Mean scores across items were 3.81-4.2 with a median score of 4.0 for all questions (standard deviations ranged from .76-.84).

| Performance (rating) | %Graduates |

| Outstanding (5) | 31.5% |

| Superior (4) | 36.4% |

| Average (3) | 25.2% |

| Needs Improvement(2) | 3.5% |

| Not Satisfactory (1) | .1% |

Factor analysis found that the survey collapsed into 2 domains (69% of the variance): professionalism and knowledge.

Conclusions: Our survey was feasible and had high internal consistency. Factor analysis revealed two complimentary domains (knowledge and professionalism), supporting the content validity. Analysis of range of scores supports the form's construct validity.

The survey of general physicians` views about quality of compiled and continuing education programs

Keywords: Quality- Continuing Education- GP

Authors: Marashi, T. Shakoorniya, A – Heidari soorshjani, S

Institution: Faculty of Health,Ahvaz Medical Sciences University.

Summary: Title: The survey of general physicians` views about quality of compiled and continuing education programs. The continual education has been necessarily accepted in the world, in this direction, the instructional needs and determining the priority of continuing education programs prepare the possibility of obtaining the desired quality. The present study has been done to determine Gp`s view, who have participated the compiled programs of continuing education according to quality of the program based on their contents, proportion with the occupational needs, and to make interest in specialty study. This study is a descriptive – analytical study, and the samples were 451 (GP) who have participated the continuing instructional programs in 2002. Data gathered through questionnaire The results of this study according to 4 especial research targets are to be wet forth, that 51% of all the participating, have very well evaluated the success of program in order to present the new scientific subjects; 63% of all the participating, to be proportional the programs contents with the occupational needs and 61% of all the participating the program competence on making interest in personal study. The forth-especial target of his research was the perception of the most important motivation to participate the program have evaluated, orderly, the review of information 2.70, seeking remedies in solving the professional problems 2.63, information and experience interchanges 2.84 and gaining points 3.19.This programs have been completely successful ones, but it is recommended that we could obtain the further qualitative promotion of instructions by presenting the new scientific appreciative subjects, using the various methods in performing the instructional programs, also attending to coincidence of contents with occupational needs of GP and making reasons on them by setting forth the important questions.

The effectiveness comparison of two educational methods on academic advisors Knowledge, Attitude, and Practice

Keywords: Academic Advisor, Knowledge, Attitude, Practice, Medical Students, Educational Workshop

Authors: Hazavehei, S. Department of Health Promotion and Education, School of Health, Isfhan University of Medical Sciences, Isfhan, Iran Hazavehei@hlth.mui.ac.ir

Institution: Isfahan University of Medical Sciences

Summary: The purpose of this study was to investigate the effect of two educational methods on (workshop and having educational material) the level of knowledge, attitude, and practice of Hamadan University of medical sciences. In this study, participated in the pre-test Section (before the intervention) and participated in the experimental program. The AA in experimental program randomly divided in two groups. The Group 1 (N=43) participated in the one day workshop as an educational method one and Group 2 (N=44) received only educational material as an educational method two. Data collection for knowledge, attitude, and practice was conducted by the valid and reliable questionnaires before educational program and after one academic semester prior to the program. The results insinuated that the significant differences existed between (p<0.001) the level of knowledge about important educational policy and regulation related to academic guiding and counseling students in pre-test group (M=10.77, SD=4.2) compare to Group 1(M=14.77), and Group 2 (M=11.54, SD=2.76). This differences existed only between Group1 with Group 2 and pre-test group. There was a significant difference (p<0.05) between the level of attitude in Group 1 (M=61.79, SD=5.78) with pre-test group (M=57.20, SD=11.6). This study shown that developing educational workshop program based on roll playing, group discussion, and group working and interaction could be affected to the behavior and attitude that result improving their skills and abilities. Finding of this research may be able to be beneficial for developing educational program for AA of universities.

Assessment of the intra-service rotations in anaesthesiology and reanimation: change in methodology

Keywords: Assessment in anaesthesiology, improving trainee´s rotation, trainee´s evaluation.

Authors: Rincon, R.

Institution: Hospital Germans Trias i Pujol

Summary: Assessment of the intra-service rotations in Anaesthesiology and Reanimation: change in methodology

Authors: Rincón R, Hinojosa M, Llasera R, Escudero A, Moret E, García Guasch R. Hospital Germans Trias i Pujol. Badalona. Barcelona (Spain)

Introduction: In order to improve the supervision of the trainee rotations, the anaesthetist in charge of each area will complete an evaluation form. The change in methodology will improve the personal performance of the trainee.

Objectives: Improve the final result, reaching the stated objectives more successfully, through the identification of the strengths and weaknesses that need to be improved.

Material and methods: Once the consultant has defined the objectives of their area, the evaluation form is completed at the halfway point and at the end of the period, both by the consultant and the resident independently. Both evaluation forms are compared and contrasted establishing the points to be improved and comparing the progress of the learner. The evaluation include seven aptitude and five attitude criteria. Both are conducted in a qualitative way with a descriptive, non-numerical scale.

Results:

-All the trainees and the consultants agree to being evaluated and to evaluating respectively.

-75% of the time, the trainee is unaware of the detected errors, and once informed modified 50% of the errors. If the error is in clinical theory is easier to modify compared with the practical error, since this depends on the trainee´s learning curve in that specific technique.

-This system improves the quality of observation, the setting of objectives and evaluation.

-Academic activity was re-activated in most of the areas.

Conclusion:

-The evaluation form is useful in the detection of problems.

-It improves the quality of training if both evaluations are done during each period.

-The interest of the consultants in residents´ training has been re-awakened.

-The extra work needed in this evaluation process requires an allocation of six hours a week for the tutor.

Rheumatology Review Course on Personal Learning Projects as a Method of Continuing Professional Development

Keywords: Personal Learning Projects; Continuing Professional Development

Authors: Bell, M., Sibbald, G.

Institution: Sunnybrook and Women's College Health Sciences Centre

Summary: Abstract

Purpose: To determine whether Rheumatologists adopt and adhere to the use of personal learning projects (PLPs) as a method of continuing professional development (CPD) and maintenance of certification following the introduction to the concept of PLPs and their utilization within a review workshop.

Methods: Rheumatologists attending a 2 day continuing education workshop were involved in a 30 minute interactive lecture outlining the concept of learning portfolios and how to use a PLP as a method of continuing education. Attending Rheumatologists filled out a pre and post-workshop evaluation questionnaire followed by the completion of a 3 month follow-up questionnaire.

Results: 25 Rheumatologists who have been in practice for a mean of 16 years completed the pre, post and 3 month follow-up questionnaires with a similar number of males and females. Average awareness of CPD methods was 7.8 post workshop, with a slight increase in 3 month follow up results. In 2002 the average number of PLP was reported at 5.8 with a median of zero (range 0-120), while post and 3 month-workshop results show a personal increase in PLP in 2003. Time constraints still remained the number one barrier for personal involvement with CPD, while the use of paper diaries remained the favoured PLP method of recording.

Conclusion: There was an increase in Rheumatologists awareness and application of PLPs, which was sustained at the 3 month period. The benefits and ease of PLP as a method of CPD require reinforcement to improve adoption and adherence.

Patient Satisfaction In An Ambulatory Rheumatology Clinic

Keywords: Patient Satsifaction; Rheumatology Clinic

Authors: Bell, M., Bedard, P.

Institution: Sunnybrook and Women's College Health Sciences Centre

Summary: Purpose: To determine patient satisfaction with care in the Division of Rheumatology at Sunnybrook & Womens College HSC across six domains: provisions of information, empathy with the patient, attitude towards the patient, access to and continuity with the caregiver, technical quality with competence, and general satisfaction.

Methods: Patients who had a diagnosis of chronic arthritis and had been seen in clinic on at least three prior occasions were asked to complete the Leeds Patient Satisfaction Questionnaire (LPSQ) once they had registered for the appointment. The LPSQ is a 45-item Likert scale (1-5: <3 dissatisfied: >3 satisfied) survey measuring satisfaction with care across the six domains described above. The attending rheumatologist and other clinic medical staff were not made aware of which patients had completed the questionnaire. All questionnaires were scored according to the guidelines of the Leeds Satisfaction Questionnaire, and were checked by two independent investigations to minimize arithmetical errors. Descriptive statistics were calculated.

Abstract

Results: Eighty-seven patients completed the questionnaire. The mean normalized Overall Satisfaction score, combining satisfaction rates across all subgroups, was The overall mean scores of the subgroups were Giving of information Empathy with the patient technical quality of competence Attitude towards the patient Access to the service and continuity of care General Satisfaction

Conclusions: Patients appear to be very satisfied with the care they receive. Areas that could be improved in the future include patient education regarding clinic services, waiting times, and receiving urgent consultation if needed.

Determination of the Effect of a Teaching Skills Workshop on Interns' Evaluations of their Residents-as-Teachers

Keywords: Teaching skills – Clinical teaching – Educational spiral - Needs Assessment

Authors: Ajami, A.(M.D.), Soltani Arabshahi, S.K. (M.D.), Siabani, S. (M.D.)

Institution: Iran Medical University, Deputy of Education, Medical Educational & Developmental Center

Summary: Introduction: Residents play an important role in teaching medical students and there is a large number of contact hours among them. So developing teaching skills, being familiar with innovative teaching styles, knowing how to increase the educational efficacy, providing an educational spiral are the necessities of Residency Programs.

Objective: To determine the effect of teaching skills workshop on the teaching role of residents.

Materials & methods: This is a Quasi- experimental study. A self-administered questionnaire was distributed among interns of pediatrics and internal medicine wards in 2 universities. Then the randomized selected residents participated in an 8 hours workshop. 2-3 months after the workshop, the interns again completed the questionnaire.

Results: There was a significant difference between the mean group ratings for all of the teaching skills characteristics in both universities.The overall teaching skills in Iran University and 5 categories of teaching skills in Kermanshah University except "Giving feedback", and "Professional characteristics", were increased.Overall teaching effectiveness of residents was increased after the workshop.

CONCLUSION: Increasing scores of skills after the workshop, reveal that training programs and teaching skills courses for residents should be performed as formal instructional residency programs.A needs assessment should be done to develop such a course.

The dual roles of the global rating scale on a 30 station Objective Structured Clinical Examination for chiropractic radiologists: reward and punishment, plus standard setting

Keywords: OSCE, borderline method, global rating scale

Authors: Lawson, D.; DeVries, R.

Institution: Lawson: University of Calgary, DeVries, Northwestern Health Sciences University